Research:Onboarding new Wikipedians/OB6

The purpose of this experiment is to compare the current onboarding experience (very similar to R:OB5) to a new test version, which presents calls to action after redirecting the user back to the page they were on prior to registration. Learn more about the test user experience.

Research questions[edit]

RQ: How does OB6 affect newcomers' editing activities?

OB6 represents a substantial improvement to the user experience. Rather than directing users to a Special page after they register an account, this change to the onboarding experience lets a newcomer choose whether to learn to edit to the article they were visiting, or proceed directly to a copy-editing task. We suspect that by minimizing the interruption to newcomers' browsing, reducing the number of options provided, and by providing immediate access to work that newcomers can contribute productively to, we'll both increase newcomer contributions and increase their rate of success.

Since newcomers with OB6 will be brought back to the page they were looking at, we suspect that they will both be more likely to make an edit right after registering and will edit more than the control condition overall.

Hypothesis 1: Newcomers with OB6 will contribute more.

Since newcomers with OB6 will be directed toward copy-editing work -- a type of work that newcomers should be able to contribute productively to without learning much about Wikipedia -- we expect that this will increase the proportion of newcomers who manage to make productive contributions.

Hypothesis 2: Newcomers with OB6 will be more likely to make productive contributions.

Despite the fact that we hypothesize that newcomers will contribute more with OB6, our means for encouraging them to contribute focus on a low difficulty type of work: copy editing. Due to the low difficulty of this task, we expect two effects that minimize the possibility that newcomers will make contributions that lead to them being reverted or blocked.

Methods[edit]

For the week of Oct. 8-15th, 2013, we will randomly split newly registered users between a control (current, OB5-like) and a test condition (OB6) based on their user ids.

- OB6 cohort: users which were presented with the new OB6 onboarding experience, and who elected to either edit the page they were redirected to, or accept a Getting Started suggested page to copyedit

- Control cohort: users which were presented with the current default OB5-like onboarding experience, and who accept one of the three types of suggested tasks (copyediting, adding wiki links, clarifying content)

Metrics (quantitative)[edit]

Based on the activities of these newcomers, we'll capture a set of behavioral measures from the Mediawiki database to check our hypotheses and generate some usage statistics from logging. See Schema:GettingStartedOnRedirect.

Productivity[edit]

As in previous experiments, we'll observe the number of edits that newcomers save with a focus on two key thresholds:

- 1+ NS0 edits

- 5+ NS0 edits

Unlike previous experiments, we'll include some more intuitive measures of newcomers investment based on their edit sessions. Specifically we'll measure the amount of sessions newcomers complete in their first week and the amount of time they spend editing within those sessions.

In order to get a sense for the proportion of newcomers who make productive contributions in their session of editing, we'll look for NS0 edits that are not quickly reverted under the assumption (based on previous research[1]) that automated systems like ClueBot NG and semi-automated tools like Huggle will remove most damage within minutes. We'll determine a newcomers NS0 edit to be "productive" if their edit is not reverted within 48 hours.

Quality[edit]

In order to explore the quality of the contributions by newcomers, we'll measure whether users made at least one productive edit (i.e. an edit that was not reverted within 48hrs), as well as the long term proportion of reverts for users who continued editing.

We also examined the type of edits and the type of pages edited by users in the test.

Survival[edit]

In addition to the amount of time spent editing, number of edits, and related quality metrics, we'll compare whether new editors from each cohort stick around on Wikipedia. We'll measure survival based on whether newcomers continue editing past a week and throughout the following 30 days.

Funnel analysis[edit]

Funnel analysis of these cohorts will be limited, as we are not tracking the full edit-preview-save funnel in this experiment. However, we can examine the click-through rate (CTR) for users presented with each test or control experience, with the funnel limited to the point at which they accept a call to action.

However, we may examine how many users elected to take the full guided tour of how to edit, in either test or control groups. Each tour step, including whether the user closed a tour, is logged as part of Schema:GuidedTour.

Results[edit]

Funnel analysis[edit]

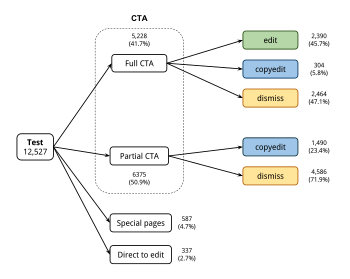

In order to get a sense for how newcomers were making use of the new version of onboarding, we generated the following funnel graphs.

The control funnel has similar behavior compared to past experiments, such as the preceding A/B test. Overall, the Special page had a 34% click-through rate. The copyedit task ("Fix spelling and grammar") was the most popular, with add wikilinks the second most-clicked task type.

The test funnel demonstrates several interesting patterns.

- Partial CTA

- Roughly half of users were redirected to non-editable pages or non-article pages, resulting in seeing a CTA that only invited them to "Find pages that need easy fixes" (i.e. the copyediting task).

- Direct to edit

- Of the users who were redirected back to editable articles, a small but important minority (337 users, 3%) were already editing, and skipped all CTAs.

- Special pages

- Another minority were viewing Special pages (587, 5%), such as search, and also skipped all CTAs.

- Full CTA

- The rest of users (41.7%) were reading an editable article, and for these users, choosing to "Edit this page" with a guided tour was by far the most popular option (46%).

User who saw the Full CTA had an astoundingly high rate of edits, with 51% of them completing an edit to that page. This is one of the highest conversion rates we have observed in desktop or mobile experiments; compare to new mobile editors, another successful group.

However, we also saw that users who were redirected back to non-articles and presented with the Partial CTA were far less likely to accept a task suggestion (70-80% dismissed the modal, versus around 60% for the test group overall), and to complete any edits (17% completed 1+ article edits, compared to 22% in the test group overall.)

Newcomer productivity[edit]

In order to compare the productivity between the experimental conditions, we gathered statistics for users who were bucketed into each condition. We filtered out users who had Javascript turned off (and therefore could not have seen the test condition) by limiting our analysis to users who had at least one log event stored. Note that log events for OB6 are stored via a javascript request.

Users in the test condition received a different experience based on where they were when they signed up (note the funnel analysis above). To bring a more nuanced view to our analysis, we split users based on this pre-registration page regardless of experimental condition in order to perform a fair comparison. Since this analysis affords a more nuanced explanation of the results, we opt to present graphs split by this return type.

First, we look at two metrics that have become a staple for the Growth team: NS0 24h 1+ and NS0 24h 5+. Here, we see no significant differenced between the cohorts for either the one article edit or five article edit thresholds. This suggests that, if OB6 changes newcomer behavior, it isn't visible in the first 24 hours of activity.

Next, we looked at the number of sessions and amount of time that newcomers spent editing in their first week since registration. The figures below show that neither the number of sessions nor the time spent editing differs between cohorts in a significant way for their first week. Combined with the results above, it seems clear that OB6 does not substantially affect the short- or mid-term activity level of newcomers.

Next, we looked at the quality of work performed by newcomers by measuring the proportion of newcomers who managed to make an edit to an article that was not immediately reverted in their first week. Newcomers in the test condition were significantly less likely to make at least one productive contribution overall (, p = 0.041). When we broke down the productive proportion by the user's return type, it seems clear that this drop in productivity occurred primarily in the case where users were redirected back to a non-Article page view (25.7% of newcomers in the test condition).

This result could suggest that a part of the process of making ones first contributions breaks down in the case that a newcomer is shown a partial CTA (as is done on non-main pages). A logical conclusion from the analysis is that these newcomers would perform better (as measured by productive edits) if they were shown Special:GettingStarted rather than the Partial CTA.

Conclusions[edit]

In this test, we set out to compare two very different workflows for onboarding new Wikipedians. In the control, we redirected all newly-registered users through a page with three suggestions for editing tasks (Special:GettingStarted). We compared this to a test workflow, where users were redirected back to the page they were on prior to account creation, and then either invited to edit the current page (if possible) or edit a suggested page that needed grammar and spelling fixes.

We made the following hypotheses:

- Hypothesis 1: Newcomers with OB6 will contribute more.

- unsupported: Statistically-speaking, newcomers in the test group contributed the same amount of content edits as the control group, when looking at the rate at which they reached 1+ and 5+ edits to articles.

- Hypothesis 2: Newcomers with OB6 will be more likely to make productive contributions.

- rejected: When looking at the test group as a whole, they were slightly less likely to be productive editors than the control group. This result demonstrates one of the inherent risks in a more nuanced workflow. In order to understand why, we examined more detailed sub-groups (return type) in the test & control conditions, separating them out based on what kind of page they were redirected back to.

- We observed that the overall lower productivity seems to have been caused by one particular minority of editors: those who we redirected back to non-articles, such as Help pages and policy (25.7% of test condition users). For other users, however, there are substantial benefits to the test version. For those already editing a page when they signed up, we sent them straight back to editing. Also, 51% of those who were reading an editable article and who accepted a guided tour contributed to that page successfully, which is one of the highest conversion rates we've ever observed.

Next steps[edit]

Overall, we believe the improved flexibility of the test version outweighs the minor reduction productivity for some sub-groups of users. Rather than forcing all users through the same experience, tailoring the UX of onboarding to the user can produce substantial benefits for the users most motivated to edit. In future, we'll work on making specific improvements to help less productive workflows improve.

References[edit]

- ↑ When the Levee Breaks: Without Bots, What Happens to Wikipedia's Quality Control Processes? R. Stuart Geiger & Aaron Halfaker. (2013). WikiSym.