Research talk:AfC processes and productivity/Work log/2014-04-14

Monday, April 14th[edit]

Today, I want to examine collaboration trends. To do this, I need to solve two problems:

- How do fairly compare an AfC world to a non-AFC world?

- What signals will collaboration (productivity) leave in the data?

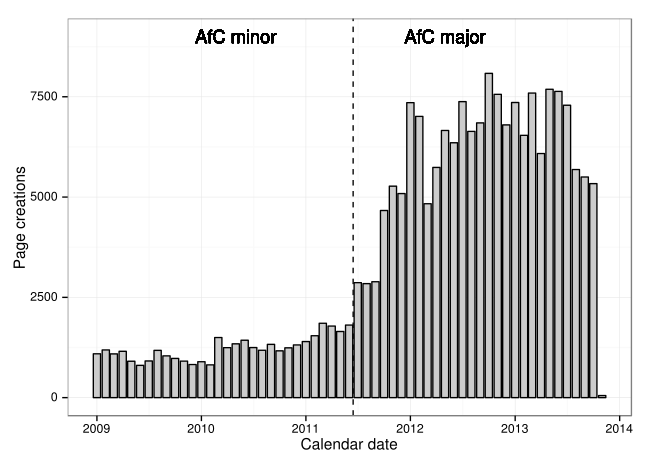

For 1, I plan to take the timespan of AFC pages and split it into two Epochs based on when newcomers began to be routed to AFC. --EpochFail (talk) 01:05, 15 April 2014 (UTC)

OK, so here's how I'd like to split the world up temporally.

Cool. So now I need to write a script to gather stats on the first month of newly created articles.

OK. I have a query for getting all relevant article pages and flagging those that were -- at some point -- included in AfC.

SELECT

page.*,

afc.page_id IS NOT NULL AS is_afc

FROM nov13_page page

INNER JOIN nov13_page_origin origin USING (page_id)

LEFT JOIN afc_page_20140331 afc USING (page_id)

WHERE

page.first_revision BETWEEN "200901" and "201311" AND

origin.original_namespace = 0 OR afc.page_id IS NOT NULL;

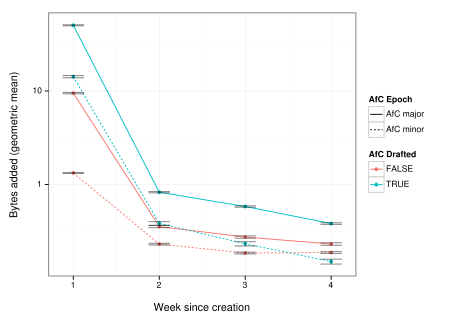

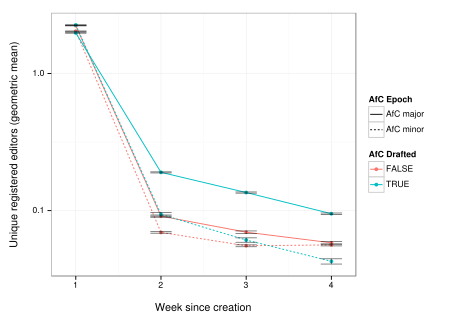

Once this is read, I can join it against revision and archive in order to gather revisions from the first N weeks and build some stats on those. Here are the stats that I think will be interesting:

- Raw number of edits

- Number of bytes added

- Number of unique registered editors

- Number of unique anon editors

Now to write the query that will get that. --EpochFail (talk) 01:55, 15 April 2014 (UTC)

I just wanted to jump in quick to share this proof of concept. It's weird, but it works. Conditional unique counting!

> SELECT

-> COUNT(DISTINCT IF(user_id > 0, user_text, NULL)) AS unique_users,

-> COUNT(DISTINCT IF(user_id = 0, user_text, NULL)) AS unique_anons

-> FROM (

-> SELECT 10 AS user_id, "foo" AS user_text

-> UNION

-> SELECT 10 AS user_id, "foo" AS user_text

-> UNION

-> SELECT 10 AS user_id, "foo" AS user_text

-> UNION

-> SELECT 11 AS user_id, "bar" AS user_text

-> UNION

-> SELECT 0 AS user_id, "192.168.1.1" AS user_text

-> UNION

-> SELECT 0 AS user_id, "192.168.1.2" AS user_text

-> UNION

-> SELECT 0 AS user_id, "192.168.1.3" AS user_text

-> ) AS fake_stuff;

+--------------+--------------+

| unique_users | unique_anons |

+--------------+--------------+

| 2 | 3 |

+--------------+--------------+

1 row in set (0.00 sec)

I had to come up with some new techniques for this one. It's gonna be pretty big, so I'm dumping it in a hidden table.

Giant SQL of Doom!

|

|---|

SELECT

page_id,

page_namespace,

page_title,

SUM(week_1_revisions) AS week_1_revisions,

SUM(week_2_revisions) AS week_2_revisions,

SUM(week_3_revisions) AS week_3_revisions,

SUM(week_4_revisions) AS week_4_revisions,

SUM(week_1_bytes) AS week_1_bytes,

SUM(week_2_bytes) AS week_2_bytes,

SUM(week_3_bytes) AS week_3_bytes,

SUM(week_4_bytes) AS week_4_bytes,

SUM(week_1_unique_anons) AS week_1_unique_anons,

SUM(week_2_unique_anons) AS week_2_unique_anons,

SUM(week_3_unique_anons) AS week_3_unique_anons,

SUM(week_4_unique_anons) AS week_4_unique_anons,

SUM(week_1_unique_users) AS week_1_unique_users,

SUM(week_2_unique_users) AS week_2_unique_users,

SUM(week_3_unique_users) AS week_3_unique_users,

SUM(week_4_unique_users) AS week_4_unique_users

FROM (

(SELECT

page_id,

page_namespace,

page_title,

SUM(DATEDIFF(rcurr.rev_timestamp, first_revision) < 7) AS week_1_revisions,

SUM(DATEDIFF(rcurr.rev_timestamp, first_revision) BETWEEN 8 AND 13) AS week_2_revisions,

SUM(DATEDIFF(rcurr.rev_timestamp, first_revision) BETWEEN 14 AND 20) AS week_3_revisions,

SUM(DATEDIFF(rcurr.rev_timestamp, first_revision) BETWEEN 21 AND 27) AS week_4_revisions,

SUM(

IF(DATEDIFF(rcurr.rev_timestamp, first_revision) < 7,

CAST(rcurr.rev_len AS INT) - CAST(rprev.rev_len AS INT),

0)

) AS week_1_bytes,

SUM(

IF(DATEDIFF(rcurr.rev_timestamp, first_revision) BETWEEN 8 AND 13,

CAST(rcurr.rev_len AS INT) - CAST(rprev.rev_len AS INT),

0)

) AS week_2_bytes,

SUM(

IF(DATEDIFF(rcurr.rev_timestamp, first_revision) BETWEEN 14 AND 20,

CAST(rcurr.rev_len AS INT) - CAST(rprev.rev_len AS INT),

0)

) AS week_3_bytes,

SUM(

IF(DATEDIFF(rcurr.rev_timestamp, first_revision) BETWEEN 21 AND 27,

CAST(rcurr.rev_len AS INT) - CAST(rprev.rev_len AS INT),

0)

) AS week_4_bytes,

COUNT(DISTINCT

IF(DATEDIFF(rcurr.rev_timestamp, first_revision) < 7 AND rcurr.rev_user > 0,

rcurr.rev_user_text,

NULL)

) AS week_1_unique_users,

COUNT(DISTINCT

IF(DATEDIFF(rcurr.rev_timestamp, first_revision) < 7 AND rcurr.rev_user = 0,

rcurr.rev_user_text,

NULL)

) AS week_1_unique_anons,

COUNT(DISTINCT

IF(DATEDIFF(rcurr.rev_timestamp, first_revision) BETWEEN 8 AND 13 AND rcurr.rev_user > 0,

rcurr.rev_user_text,

NULL)

) AS week_2_unique_users,

COUNT(DISTINCT

IF(DATEDIFF(rcurr.rev_timestamp, first_revision) BETWEEN 8 AND 13 AND rcurr.rev_user = 0,

rcurr.rev_user_text,

NULL)

) AS week_2_unique_anons,

COUNT(DISTINCT

IF(DATEDIFF(rcurr.rev_timestamp, first_revision) BETWEEN 14 AND 20 AND rcurr.rev_user > 0,

rcurr.rev_user_text,

NULL)

) AS week_3_unique_users,

COUNT(DISTINCT

IF(DATEDIFF(rcurr.rev_timestamp, first_revision) BETWEEN 14 AND 20 AND rcurr.rev_user = 0,

rcurr.rev_user_text,

NULL)

) AS week_3_unique_anons,

COUNT(DISTINCT

IF(DATEDIFF(rcurr.rev_timestamp, first_revision) BETWEEN 21 AND 27 AND rcurr.rev_user > 0,

rcurr.rev_user_text,

NULL)

) AS week_4_unique_users,

COUNT(DISTINCT

IF(DATEDIFF(rcurr.rev_timestamp, first_revision) BETWEEN 21 AND 27 AND rcurr.rev_user = 0,

rcurr.rev_user_text,

NULL)

) AS week_4_unique_anons

FROM halfak.nov13_limited_page

INNER JOIN revision rcurr USE INDEX (page_timestamp) ON

rcurr.rev_page = page_id AND

rcurr.rev_timestamp <= DATE_FORMAT(DATE_ADD(first_revision, INTERVAL 28 DAY), "%Y%m%d%H%i%S")

LEFT JOIN revision rprev ON

rcurr.rev_parent_id = rprev.rev_id

GROUP BY 1,2,3)

UNION

(SELECT

page_id,

page_namespace,

page_title,

SUM(DATEDIFF(acurr.ar_timestamp, first_revision) < 7) AS week_1_revisions,

SUM(DATEDIFF(acurr.ar_timestamp, first_revision) BETWEEN 8 AND 13) AS week_2_revisions,

SUM(DATEDIFF(acurr.ar_timestamp, first_revision) BETWEEN 14 AND 20) AS week_3_revisions,

SUM(DATEDIFF(acurr.ar_timestamp, first_revision) BETWEEN 21 AND 27) AS week_4_revisions,

SUM(

IF(DATEDIFF(acurr.ar_timestamp, first_revision) < 7,

CAST(acurr.ar_len AS INT) - CAST(aprev.ar_len AS INT),

0)

) AS week_1_bytes,

SUM(

IF(DATEDIFF(acurr.ar_timestamp, first_revision) BETWEEN 8 AND 13,

CAST(acurr.ar_len AS INT) - CAST(aprev.ar_len AS INT),

0)

) AS week_2_bytes,

SUM(

IF(DATEDIFF(acurr.ar_timestamp, first_revision) BETWEEN 14 AND 20,

CAST(acurr.ar_len AS INT) - CAST(aprev.ar_len AS INT),

0)

) AS week_3_bytes,

SUM(

IF(DATEDIFF(acurr.ar_timestamp, first_revision) BETWEEN 21 AND 27,

CAST(acurr.ar_len AS INT) - CAST(aprev.ar_len AS INT),

0)

) AS week_4_bytes,

COUNT(DISTINCT

IF(DATEDIFF(acurr.ar_timestamp, first_revision) < 7 AND acurr.ar_user > 0,

acurr.ar_user_text,

NULL)

) AS week_1_unique_users,

COUNT(DISTINCT

IF(DATEDIFF(acurr.ar_timestamp, first_revision) < 7 AND acurr.ar_user = 0,

acurr.ar_user_text,

NULL)

) AS week_1_unique_anons,

COUNT(DISTINCT

IF(DATEDIFF(acurr.ar_timestamp, first_revision) BETWEEN 8 AND 13 AND acurr.ar_user > 0,

acurr.ar_user_text,

NULL)

) AS week_2_unique_users,

COUNT(DISTINCT

IF(DATEDIFF(acurr.ar_timestamp, first_revision) BETWEEN 8 AND 13 AND acurr.ar_user = 0,

acurr.ar_user_text,

NULL)

) AS week_2_unique_anons,

COUNT(DISTINCT

IF(DATEDIFF(acurr.ar_timestamp, first_revision) BETWEEN 14 AND 20 AND acurr.ar_user > 0,

acurr.ar_user_text,

NULL)

) AS week_3_unique_users,

COUNT(DISTINCT

IF(DATEDIFF(acurr.ar_timestamp, first_revision) BETWEEN 14 AND 20 AND acurr.ar_user = 0,

acurr.ar_user_text,

NULL)

) AS week_3_unique_anons,

COUNT(DISTINCT

IF(DATEDIFF(acurr.ar_timestamp, first_revision) BETWEEN 21 AND 27 AND acurr.ar_user > 0,

acurr.ar_user_text,

NULL)

) AS week_4_unique_users,

COUNT(DISTINCT

IF(DATEDIFF(acurr.ar_timestamp, first_revision) BETWEEN 21 AND 27 AND acurr.ar_user = 0,

acurr.ar_user_text,

NULL)

) AS week_4_unique_anons

FROM halfak.nov13_limited_page

INNER JOIN archive acurr USE INDEX (page_timestamp) ON

acurr.ar_page_id = page_id AND

acurr.ar_timestamp <= DATE_FORMAT(DATE_ADD(first_revision, INTERVAL 28 DAY), "%Y%m%d%H%i%S")

LEFT JOIN archive aprev ON

acurr.ar_parent_id = aprev.ar_rev_id

GROUP BY 1,2,3)

) AS unioned_page_stats

GROUP BY 1,2,3;

|

Now, as soon as my indexes are ready, I'm gonna kick this off and go to bed. --EpochFail (talk) 02:47, 15 April 2014 (UTC)

So, it's tomorrow, but I'm going to keep working from this entry because I need to start the day job soon anyway. The query finished over night, but now I'm stuck with the problem of having too much data. I have about 6 million rows and I'd like to have about 500k. Right now I'm running a query to join all the relevant tables together and gather a 1/10th sample for generating stats. Regretfully, I don't think I'll have this ready for my meeting with Jodi.a.schneider, so we're going to have to talk framing rather than results. Boo. --EpochFail (talk) 13:30, 15 April 2014 (UTC)

I have data!

$ wc datasets/limited.page_stats.sample.tsv 734519 18362975 74946310 datasets/limited.page_stats.sample.tsv

--EpochFail (talk) 13:43, 15 April 2014 (UTC)

Results[edit]

Results! Woo!