Research talk:Measuring edit productivity/Work log/2014-12-5

Friday, December 5, 2014[edit]

It's been a little while. I have been working with hadoop and trying to build up some robust strategies for working with diff and persistence data. I made some substantial progress in working out how I can chop Wiki actions by different dimensions. See my blog on the subject.

TL;DR: I can do unix style stream processing in Hadoop and I can use Hadoop's secondary sort to cut up data by dimensions. Woot.

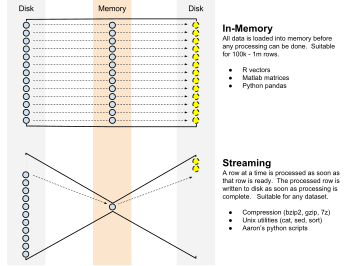

Now I'm working on building up a library of streaming utilities that will let me slice and dice MediaWiki database dumps. See the repo.

Right now, the pipeline for getting to persistence stats looks like this:

cat mw_xml_dump.xml |

dump2json | \

json2tsv page.id timestamp id - | \

sort -k1,1n -k2,2 -k3,3n | \

cut -f4 | \

json2diff | \

<token_persistence> | \

json2tsv id - | \

sort -k1,1n | \

<revision_stats>

I've put <token_stats> and <revision_stats> in brackets because those scripts have yet to be written at this point. Writing this out is helping me think about how they will function.

- dump2json: Converts a mediawiki XML dump to a sequence of json blobs that represent a revision with this schema

- json2tsv page.id timestamp id -: Extracts fields from a JSON into tsv format. Note that "-" prints the json blob as the 4th column

- sort -k1,1n -k2,2 -k3,3n: Partitions by <page.id> and sorts revisions by <timestamp> ASC, <id> ASC

- cut -f4: Trims all of the tsv columns but the JSON one to return as plain ol' JSON

- json2diff: Generates diff information. This is the *very* CPU intensive part of the work

- <token persistence>: Generates statistics about how tokens persist in a page. This is a *large* dataset. It will have a record for every token that was ever added to any page.

- json2tsv id -: Extract the revision ID field from the token persistence JSON blob

- sort -k1,1n: Partition by revision.

- <revision stats>: Generates statistics about how a revision's tokens persisted overall. This will enable quality/productivity measures to be made.

My plan is to output a full dataset at every step to allow for debugging and reuse. We might not output the <token persistence> dataset because it will be too big. Then again we have a whole cluster to hold the data -- and it would be really cool to be able to re-process it. --Halfak (WMF) (talk) 20:33, 5 December 2014 (UTC)