Research talk:Task recommendations

Work log[edit]

Archive

|

|---|

|

Thursday, October 2nd[edit]

Given the low number of observations, I'm using a en:beta distribution to approximate the error around proportion measurements. This results in some unusual-looking error bars where the observed proportion can appear outside of them. This is actually more honest to the underlying uncertainty though, so I'll risk the confusion.

At what rate do users see recommendations?[edit]

Here, we can see a clear difference between the flyout-only condition and the conditions that contain post-edit. There's even a significant difference between the rate at which users were impressed in the both vs. post-edit condition for large wikis. This is likely due to users clicking on the "Recommendations" link before they have edited an article.

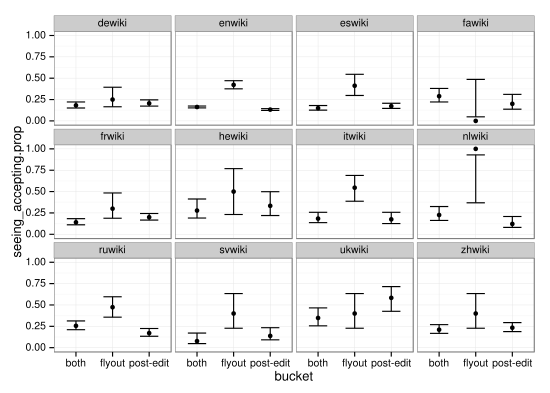

At what rate do users accept recommendations?[edit]

Here, we see roughly the same trends that we saw with impressions. This is probably because seeing a recommendation is required for accepting it. Let's look at the conditional proportion next.

At what rate do users who see recommendations accept (at least one) recommendation?[edit]

Here, we can see "flyout" shining in the way that we suspected. For large wikis, the flyout condition see a substantially larger proportion of impressed users clicking (accepting) at least one recommendation. There's two potential explanations that I can think of:

- (Ready for recommendation) When users ask for recommendations, they are in a better position to accept them.

- (Propensity) Users who notice the "recommendations" link on the personal bar are simply more likely to accept a recommendations.

At what rate do users edit recommended articles?[edit]

Like we saw when looking at the raw impression rate and acceptance rate, we see post-edit conditions resulting in a higher rate of edited recommended articles. I don't think this is telling us much.

At what rate do users who accepted recommendations edit at least one recommendation?[edit]

Here, we're looking at conditional probabilities again. It looks like editors were not significantly more likely to edit an article recommendation that they accepted the flyout condition. This runs counter to my hypothesis -- that users would actually have better recommendations and would therefore be more likely to follow-through with an edit in the flyout condition.

End[edit]

That's all for today folks. --Halfak (WMF) (talk) 22:24, 2 October 2014 (UTC)

Discussion[edit]

Task similarity vs. topic similarity[edit]

I speculate whether the hypotheses are validated or not depends heavily on the quality and nature (micro contribution or not) of what is recommended. For example, for new users, if what recommended is: "we noticed you fixed a typo. here is another article that needs to a type fix." vs "We noticed you edited an article on polar bears, we think you may be interested to edit the Global warming article.", the results you get can be quite different. Given that the target audience is new commers, it would be good to try different types of recommendations (micro and very specific vs general article suggestion). LZia (WMF) (talk) 15:39, 19 August 2014 (UTC)

- +1 Good points. Right now, we're looking at personalization based on topic similarity -- not task similarity. This would be a good thing to specify and it would be good to state some hypotheses about task similarity. Thanks! --Halfak (WMF) (talk) 09:50, 26 August 2014 (UTC)

TODO: Add RQ about task similarity (as opposed to just work templates).

A few questions[edit]

Thanks for putting together this detailed documentation, a few questions below:

- Target

- Is this proposal meant to design recommender systems targeted at any registered user, newly registered users/new editors only or anyone including anons? The wording (starting from the nutshell) is a bit inconsistent and we now have some standardized terminology on user classes we can try to adopt.

- Good Q. Right now, we're looking at newly registered users, but I think that asking the research questions and stating the hypotheses broadly (e.g. for all editors) is a good idea right now. We can report results based on different sub-populations. --Halfak (WMF) (talk) 09:50, 26 August 2014 (UTC)

- Task design

- It strikes me that the proposed recommendation is about editing a similar article, it is not really formulated as a task. I was wondering if you had plans to test recommendations based on topic and task type or only use similarity-based recommendations (e.g. Edit a similar article)). If anything, it would be a really interesting research question to figure out if task-specific recs combined with similarity are more or less effective than recs based on similarity alone (more below).

- Indeed. We discussed this and it looks like recommending based on topic similarity is the easiest first step to explore, but we *should* be able to filter recommended articles by task categories with ElasticSearch with a little bit more engineering work according to NEverett. We've delayed doing that for the first set of experiments, but I think that will be a crucial set of additional research questions to explore.

TODO: Add RQ about recommending articles with work templates.

- Personalization

- I couldn't find a definition of what is meant by personalized vs non-personalized. It would be good to expand a little bit on assumptions on how editor interest areas are modeled.

TODO: Clarify type of personalization (topic similarity).

- Edit classification

- is there any plan in the pipeline to classify the type of edit an editor just made and deliver a matching recommendation on the fly, via one of the proposed delivery mechanisms. For example, if an edit adds a citation or an image or a category, removes vandalism, fixes a typo, adds structured data via a template, could this be detected alongside the topic area to generate more personalized recommendations?

- Nothing in the pipeline, but worth talking about. See LZia (WMF)'s comment and my todo item above. --Halfak (WMF) (talk) 09:50, 26 August 2014 (UTC)

- Features of an ideal article recommendation

- I'd love if we could expand here (or as part of a follow up project) and summarize existing research on which features of an article or an article's editing population (crowding, number of watchers, controversiality etc) are good predictors of a newbie subsequent engagement. In other words, how do we find the best possible articles that drive early engagement within a newbie's areas of interest.

--DarTar (talk) 22:35, 19 August 2014 (UTC)

- This seems like a bigger question. I bet that Nettrom has some things to day. Hopefully, he'll see my ping. :) --Halfak (WMF) (talk) 09:50, 26 August 2014 (UTC)

Results?[edit]

In what time frame will there be results of Research:Task recommendations/Experiment one? --Atlasowa (talk) 13:49, 21 October 2014 (UTC)

- Same question here. We've been serving a big popup after every edit to all new users since... September? Do you have enough data for this research? If you did, but there isn't enough analysis bandwidth now, it would be wise to disable the recommendations until they can be evaluated. --Nemo 08:01, 6 November 2014 (UTC)

- @Halfak (WMF): @Nemo bis: This is not accurate. Only people in a certain brief two-week date range even could have seen the post-edit (even they were bucketed so not all did). See https://git.wikimedia.org/blob/mediawiki%2Fextensions%2FGettingStarted.git/9395b3c0df01adf2c64cde32a342b858e7ff70e1/TaskRecommendationsExperimentV1.php#L26 . New users registering after the end date did not see it. Mattflaschen (WMF) (talk)

Ping @ Halfak (WMF) ;-) --Atlasowa (talk) 16:05, 7 November 2014 (UTC)

- Thanks for the ping Nemo & [User:Atlasowa|Atlasowa]]. This project ended up in a weird spot due to deconstruction of the Growth team and that's why the write-up was not completed. The gist is that while user-testing showed that people appreciated the recommendations, the experiment concluded no effect on newcomer behavior/productivity. I think that the primary reason that recommendations were ineffectual was because they were relatively hidden from the user. To WMF Product, I've advocated keeping recommendations available as a beta feature and performing a follow-up study to make the availability of recommendations more salient.

- Regretfully, I'm booked solid for the next couple of weeks, so a proper summary of the results is still far out. I'll let you know as soon as I have something ready for review. --Halfak (WMF) (talk) 16:52, 7 November 2014 (UTC)

- Ok. Thank you very much for the interesting sneak preview into the results. It seems to be implied that enough data was collected, so I'll proceed to ask disabling of the feature. It can be re-enabled when requirements/benefits are defined, but the original purpose of conducting an experiment seems over. --Nemo 14:23, 9 November 2014 (UTC)

- Hi Halfak (WMF), thanks a lot for the information! Some comments:

- You can read some feedback on german WP concerning Task recommendations, de:Wikipedia_Diskussion:Kurier/Archiv/2014/09#Empfehlungen, mostly longtern users that were targeted by mistake. user:Gestumblindi appreciated that the tool was aimed at content contribution ("right direction") not at UI cosmetics (i agree absolutely). These users mostly didn't think the recommendations were relevant or useful to them and found them annoying. At the time it was also rather unclear what the Task recommendations were, a new WMF feature or a research project or a beta feature... If this is supposed to come back as a beta feature, the WMF should be very, very clear on what the objective is: Testing a beta that will become default? for whom? or collecting feedback for improvement... for whom, at WMF? and for how long will this be a beta feature? what is the intended audience? what is the goal?

- "the experiment concluded no effect on newcomer behavior/productivity. I think that the primary reason that recommendations were ineffectual was because they were relatively hidden from the user." Hm, if they had no effect on newcomer behavior/productivity except for being a bit annoying, this does not mean they should be made more annoying!

- I think it is not WMFs job to give tasks to users. It is WMFs job to give tools to users to choose their own tasks. For instance, user:Superbass proposed a different recommendation: "Du interessierst Dich für Schifffahrt in Norwegen? Schau mal in unsere Kategorie dazu, dort findest Du mehr zum Thema" ("You're interested in norwegian ships? Find more articles on the topic in the corresponding category"). One of the longstanding bugzilla requests is Bug 7148 - Requesting watchlist for changes to category content. (since 2006!!) You find this and 2 more related feature requests on the deWikipedia Top20 technical wishlist (de:Wikipedia:Umfragen/Technische Wünsche/Top 20: #3 #10 #20), compiled 1 year ago. In short, we use categories for topical interest and day-to-day maintenance. Mediawiki does not support that, so there are several lifesaving tools like catscan, or Merlbot or erwin85/relatedchanges or CatWatch or OgreBot@commons etc. WMF has failed miserably to support this watching by category. I believe this Task recommendations tool is going in the general direction of interest graph. But Wikipedia already has an interest graph: it's the watchlist! And, even more important: The user watchlists are the engine of Wikipedia. Watchlists are about the content, not like the colourful me-me-me notifications that currently grub away the new user attention (Research:Notifications/Experiment_1). --Atlasowa (talk) 21:49, 10 November 2014 (UTC)

- @Atlasowa: If I recall correctly, the long-term users were targeted due to a temporary bug (fixed shortly after the experiment started). Mattflaschen (WMF) (talk) 03:31, 14 November 2014 (UTC)

- @Mattflaschen (WMF): you do recall correctly, see bug 70759.

- The thing is: Longterm editors know how to give feedback, and where, and they can even use bugzilla. New editors can not give feedback, they don't know how, where or even that they are supposed to give feedback (and apparently they aren't supposed). They can live/be distracted with annoying popups or they can leave the place. Longterm editors are the ones that can tell you if the feature is not helpful and annoying. So, for WMFs solely "experimenting with the newbies", the nice thing is: no negative feedback. The bad thing is, the experiment can fuck things up without anyone noticing. Maybe you will notice, if you have chosen the relevant metrics beforehand, and if you do the analysis, and if you interpret the data correctly, and if you don't dismiss the inopportune results of the analysis. For example, user feedback says they feel patronized by the software - how do you track that?

- Two months ago, Steven (WMF) announced: "It will run for two weeks, then we will be turning it off in order to analyze whether it helped new Wikipedians or not."

- Two months later Mattflaschen writes: "The fact that the experiment is done does not mean removing this code had no effect. It was designed to temporarily continue showing the same experience to these users." --Atlasowa (talk) 11:06, 14 November 2014 (UTC)