Wikimedia monthly activities meetings/Quarterly reviews/Analytics/September 2014

The following are notes from the Quarterly Review meeting with the Wikimedia Foundation's Analytics team, September 25, 2014, 9:30AM - 11:00AM PDT.

- Attending

Present (in the office): Erik Möller, Anasuya Sengupta, Rachel diCerbo, Ellery Wulcyzn, Leila Zia, Toby Negrin, Dario Taraborelli, Lila Tretikov, Kevin Leduc, Tilman Bayer (taking minutes), Carolynne Schloeder, Howie Fung, Jeff Gage, Abbey Ripstra, Daisy Chen; participating remotely: Aaron Halfaker, Jonathan Morgan, Oliver Keyes, Dan Andreescu, Andrew Otto, Christian Aistleitner, Nuria Ruiz, Arthur Richards, Erik Zachte

Please keep in mind that these minutes are mostly a rough transcript of what was said at the meeting, rather than a source of authoritative information. Consider referring to the presentation slides, blog posts, press releases and other official material

[slide 2]

Agenda

1. Welcome/Agenda

2. Performance against goals

3. Research and Data (includes Q&A)

4. Development (includes Q&A)

5. Summary/Asks

Toby: Welcome

This has been a very collaboratively written slide deck

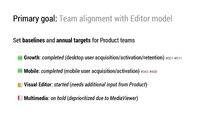

[slide 3]

priorities:

standardize baseline metrics

focus on editors this q, then readers

hit most of those goals

on dev side, focused on dashboard and vital signs

[slide 4]

[slide 5]

Research and Data[edit]

Dario:

Q1 goals

[slide 10]

Team started in Q1 on new editor metrics

Q2, Q3...

[slide 11]

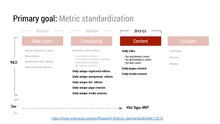

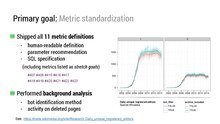

goal: ship definitions (human readable and machine readable version)

involves identifying bots,....

[slide 12]

alignment with editor model (for Growth and Mobile teams)

began work with Multimedia team, but on hold

[slide 13]

progress towards traffic definitions (pageviews)

both project and article level metrics (community cares a lot about the latter)

Oliver: including mobile views...

(Dario:)

unique client definition (on hold)

Lila: do we have a path to get unblocked?

Dario, Toby: yes

[slide 14]

(Dario:)

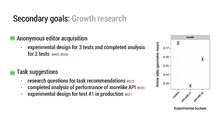

Growth research - led by Aaron

anon acq, task suggestions

[slide 15]

did extensive analysis of mobile app traffic

mobile traffic and participation trends, effect of tablets switchover

Lila: in this chart, editor activated on mobile means what?

Dario: registered on mobile device[?], and makes 5 edits within 24h

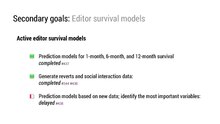

[slide 16]

Prediction models for survival periods (worked on this earlier this year, still needs final completion)

Lila: (question about time limitation)

Leila: this is from 2001 onwards

Lila: wonder about 5 edit threshold, what about someone who does a 100 edits in a short burst? What was the rationale to focus on this metric (active editors)?

in the end, what matters is content - whether it is being created and kept up to date

Howie: should have a session examining what this metric is good for (and what not) - there has been a lot of research about this in recent years

Lila: also, e.g. Commons will have different usage patterns

Dario: yes, we mostly do these analyses on a per-project basis

Lila: ...

[slide 17]

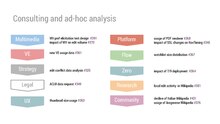

Dario: also, supported a number of other teams with smaller requests, in consultation mode

This slide is actually not comprehensive, need to get better at capturing everything

Lila: this kind of "response train" is normal, it's not bad that requests are coming

but needs to be managed

Toby: sometimes Oliver and Leila were hit by 3 things at once...

[slide 18]

Dario: Team process:

joint standups with other teams

Suffer from lack of scrum master, project management support

Lila: where are the inefficienceies - when interacting with other teams, or within team?

Dario: both

Leila: btw, there are also community requests, not just other WMF teams

Lila: for capacity planning, it would be more of a project[product ?] management role which requires deeper understanding, scrum master is more for supporting workflow

Erik: this is the kind of thing I have asked Arthur to support/advise on (team practices)

Lila: and sometimes it's ok to share that role within team

Toby: but I need my people (like Dario) focus on their skills

[slide 19]

Dario:

Formal collaborations/hiring

onboarded Ellery as FR analyst

started collaboration with Morten (external researcher) on task recommendations

Aaron: Morten is processing data on task recommendations right now

(Dario:)

collaboration with LANL on anonymizing pageview data - on hold

opened traffic research analyst position - on hold

[slide 20]

Outreach: Wikimania, showcases, ...

[slide 22]

Q2 goals

Lila: coming out of standardization process, what gaps we still have?

Dario: have specifications and raw data, not yet the numbers

[slide 23]

strategic research: close to our strategic priorities

APIs, also for external research

Lila: anonymized?

(Dario:)

This is mostly about data that is public anyway

product research:

discontinue "embedded" model

[slide 23]

strategic research

understanding reader behavior - is becoming a priority

detect knowledge gaps

value-based measures - go beyond mere edit count

microcontributions

uplevel the FR experimental strategy

Anasuya: reader behavior - will this differentiate between mature/ small wikis?

Dario: look for definitions that work across the board

but e.g. Wikidata differs a lot

Anasuya: I'm thinking about differences between Wikipedias, too

Lila: need to wrap up Q1 work

goals on this slide make a lot of sense to me

Dario:

[slide 24]

Data services

A/B testing

Lila: Core team leading this? yes

Public data sources:

this is making already public data more accessible - e.g. revert metadata: would need lots of manual work now

Lab tools (stretch goal)

[slide 25]

Product support

move toward consulting model

should allow team to allocate resources more flexibly

Lila: should require advance notice from Engineering / Product teams

avoid resetting priorities

Erik: already have a cross-team process

Toby: both Dan and Andrew have chaired this

Erik: moving from long-term (yearly) goal-setting to quarterly priority setting, have separate meetings for this

Toby: get into a data/metrics driven mindset

Lila: no project should start without having a way to measure impact

Erik: need to be aware on whether it's possible or not, which data expectation is realistic

it's already the case that all the current priorities have measurable goals

Toby: suggest separate conversation on this

[slide 26]

Dario:

some support for IEG

Outreach:

Leila: Aaron does a lot of this (outreach)

Lila: it's OK for experiments, but does it scale?

Anasuya: also, brings external researchers into contact with our community much faster

[slide 27]

[slide 28]

staff outlook

slide 29-35: appendix (skipped, can take this offline)

Engineering[edit]

Kevin:

[slide 39]

main goal was to implement dashboard - accomplished

break down by target site (desktop/mobile/apps) - not reached

blocked on ...

[slide 40]

UV: blocked on tech proposal

[slide 41]

[slide 42]

Vital Signs: Value prop

[slide 43]

demo https://metrics.wmflabs.org/static/public/dash/ , e.g. newly registered on Armenian WP, rolling surviving new editors

Lila: I'm going to play with this... can we note the definitions at the bottom?

Dario: they are on Meta, just need to cross-link

Toby: check out Wikidata, compare to ENWP

this may represent a future trend: a lot more smaller edits (some of them by machines)

(Kevin:)

[slide 44]

Vital Signs: backlog

lots of feedback coming in

[slide 45]

Wikimetrics:

builds the data that Vital Signs uses

value prop:...

[slide 46]

Wikimetrics accomplishments: ...

a lot more robust than cronjobs

Lila: allows to identify fast growing/slow growing projects? yes

(Kevin:)

[slide 47]

backlog:

new hire will take on backlog for Grantmaking

extend to CentralAuth (users with edits on several wikis)

Lila: so that's a filter by user name (instead of project)? yes

Kevin:

tagging cohorts - classify

Lila: tag reports too?

Kevin: yes, we could do that

Lila: just want to make sure we do this in a general way

Kevin: add ability to remove users from cohort for privacy reasons

Lila: can we also integrate usage (traffic) data?

Toby: Wikimetrics is not primarily about that

friction is between more functionality desired by Grantmaking and...

Anasuya: content metrics are really important for us too, but that's separate

Toby: this is pretty general (using off-the shelf components, e.g. Hadoop),

might be able to quickly plug it into other databases as well, e.g. pageviews

Dan: ...

also interesting: Yuvi's Quarry (allows db queries via web interface on Labs)

Oliver (on chat): +1. Quarry is awesome.

Jonathan (on chat): incredibly useful

[slide 48]

Kevin:

Eventlogging

publicly documented schemas

[slide 49]

Backlog

maintenance, sanitize data

[slide 50]

Refinery

data processing ecosystem, addresses dissatisfaction with data quality

[slide 51]

accomplishments: operational since July

a lot of cooperation with Ops team

turned off legacy data feeds (after contacting people) - no complaints so far

port Webstatscollector to Hadoop

significantly reduced error rate (from 3-6%)

Toby: decided to have Christian work very closely with Ops team on this

good partnership

[slide 52]

backlog

compile PVs per page and make data public

- community has been asking about this for years

Erik: we have the historical PV data, it has been used for many research projects

Oliver: We can backfill for 1.5 years, using the new definition and the sampled logs, but it'll be a bit inaccurate, because..sampled logs.

[slide 54]

Team

Lila: squares on the bottom mean what?

Kevin: blue = addition (e.g. Q4 : myself), red = losses

[slide 55]

Process & Metrics

Lila: using point system?

Kevin: yes, Fibonacci

defined 34 points = one developer with little or no distraction during the sprint

7/24 outlier = Dan and Nuria on vacation

metrics show team was more focused in later sprints

Toby: visualization helped team focus

[slide 57]

preliminary Q2 goals

[slide 58]

Out of scope:

need to put a brake on Vital Signs

Lila: tradeoffs?

Dario: ...

Lila: can we break this down, make a list

Toby: did a back-of the envelope velocity calculation, saw we could not do all of them

Lila: just make sure you get the things you commit to done, rest as stretch goals

[slide 59]

Toby: Visualization

Limn tried to do too much

Vital Signs as basis to make this available to community

ErikM: using "Vega" open source library

Dan: ...

Lila: managing another tool is also work...

Toby: ...

Erik: good to do a spike on it, if it's not worthwhile, we will find out quickly

Lila: I have worked myself with Saiku and Tableau[?]

do what you think works, be aware of "human" (work) cost

Toby: should be easy to put contractor on this

Kevin:

(skip "Unique Visitors: Proposed Milestones" slide)

Asks[edit]

[slide 64]

Toby:

continue with space and support - keep developers focused

recruit / staffing: need to tweak job description to attract right people

promote data informed perspective