Learning and Evaluation/Logic models

A logic model is a great way to document the programming process – from the reasoning behind and the intended goals (theory of change) to the inputs, outputs and outcomes you expect. By using a logic model template for your programs, you can think about what you really want to put into, and get out of, your program. When your program event has ended, you can use a logic model to evaluate it—did the inputs you put into planning the event produce the outputs you expected? And did those outputs develop into longer lasting impacts?

Presentations[edit]

-

Theory of Change and Logic Models as Evaluation Tool

-

Logic Models - A tool for planning and evaluation

Blank logic model template[edit]

A template for a logic model, can be downloaded as OpenDocument or other formats; download and please use it!) Be sure to share your template here.

Alternatively, you can find blank templates in spreadsheet format along with other useful templates for project and evaluation planning which you can copy by opening via google docs and selecting "Make a copy" from the File menu. Then you will have your own template copies to modify and use as you wish.

Steps to creating a logic model[edit]

- Start with your theory of change. A description or vision of how and why a program will work. It explains the reasoning behind the actions you're doing and the results you expect the actions to have. Usually, you base the theory of change on past experience, or best practices. You can place bullet points of your theory of change in the blue Situation section. Example:

This evaluation looks at two events, both titled “WikiWomen's Edit-a-thon.” The two events took place in March 2012 and June 2012, in San Francisco, California. Both events were held at the Wikimedia Foundation offices. The idea to host these two events came out of the desire to get more women to edit Wikipedia. A gender gap exists in Wikipedia—more men edit than women, which causes a systemic bias in the content. The goal of both events was to engage more women to edit Wikipedia and to improve content on English Wikipedia about women's history and related subjects.

— Wikimedia Evaluation Library, “WikiWomen's Edit-a-thons” case study - In the light blue section, list what your priorities are related to your theory of change. These are the big picture goals—for the WikiWomen's edit-a-thon, the priorities were have participants improve content, have participants socialize, and to train new editors to edit Wikipedia.

- In the yellow section, you'll place inputs. Every program takes something, to make something happen, right? That's what inputs are. Inputs are what you invest in the program. Inputs may include: staff and/or volunteer time, money, research tools (i.e. books about the subject theme, Jstor accounts for a contest), materials (handouts, note pads, swag, etc.), equipment (camera lens, laptops,), partnerships (sponsors, GLAM).

- In the green section, go outputs. Outputs consist of three categories that you expect do to make the program happen, who you expect to be involved in the program, and what you expect to be produced while the program is taking place. Outputs are broken into three sections:

- Participants: Who you reach—for example: existing contributors, new potential contributors, donors, GLAMs, professors/teachers, people who use Wikimedia websites.

- Activities: This is what you do to make the program happen, and during the program itself. For example: write a how-to guide on how to upload to Commons, promote your Wiki Loves event, do the event itself, book the conference room, have a meeting with organizers afterwards to talk about the workshop, etc.

- Direct products: What you expect to come out of the program while it's happening—it's what you create during the event. For example: new editors out of a workshop, new photographs of monuments, new articles during a contest. These aren't the things that happen a day, week, or month (etc.) after the program stops. It's during the program.

- Next, you'll fill in your expected outcomes in the pink section. These are the deliverables and impacts you expect to take place AFTER the program is over. These are the things you hope and wish will happen after the program or event ends. It's broken down into three time periods:

- Short term outcomes: What do you hope, expect, and wish to happen just after the program is over (day after until about 3 months after). Examples include: participants leave workshop with a better understanding of how Wikipedia works, contest participants will continue to expand contest articles, edit-a-thon participants become closer/friends, organizers to plan next event, judges will judge photographs from Wiki Loves Monuments, and even this one: organizers send survey to participants via email.

- Intermediate outcomes: These are the impacts and outcomes you expect to happen three to twelve months after the event. These can be a little more broad. For example: All edit-a-thon participants keep editing, contest articles developed into featured articles, a second program takes place, a new GLAM partnership develops out of a content donation.

- Long term outcomes: These take place one year to five years after the program ends. Examples include: editors are retained (making 5+ edits a month), more women edit Wikipedia, all monuments have photographs and a new subject is chosen for Wiki Loves, university makes Wikipedia editing a permanent class component.

- Don't forget to think about assumptions and external factors. Assumptions are things that you think will happen without having proof. For example—you'll assume every participant at your edit-a-thon owns a laptop they can bring. Don't assume that, make sure to have extra laptops just in case. External factors are things that could effect your outcomes, such as culture, economy, politics, belief systems, laws, etc. For example: you want to retain editors one year later, but, most people in your country don't own computers at home, and connectivity is weak, so you have to consider that—perhaps a retained editor means someone making five edits every two months.

Voila! You're done. Great job! Be sure to refer to your logic model throughout the planning process, and when you are evaluating your program.

Voila! You're done. Great job! Be sure to refer to your logic model throughout the planning process, and when you are evaluating your program.

Example Community created logic models[edit]

Action Logic Models[edit]

-

Edit-a-thons and Workshops

-

Wikipedia Education Program

-

GLAM Partnerships

-

Image upload competitions

-

On-wiki Writing Contests

-

Ramona Park Edit-a-thon

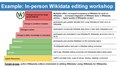

Staircase Logic Models[edit]

As an easy start for working with logic models, a slightly simplified step-by-step model (aka staircase logic model) can be useful.

To better understand how to make a staircase logic model, visit the presentation Logic models. A tool for planning and evaluation.

-

Wikidata editing workshop

-

Candy writing contest

-

Gender Gap editathon

-

Girls Wiki Club

-

Wiki Camp

More on Commons

Need feedback or help with your logic model?[edit]

Ask for feedback and/or help by posting on the talk page or email eval![]() wikimedia

wikimedia![]() org.

org.