Research:A System for Large-scale Image Similarity

This page documents a research project in progress.

Information may be incomplete and change as the project progresses.

Please contact the project lead before formally citing or reusing results from this page.

After several requests from different parts of the movement, the Research team is working on an image similarity tool. The tool will take as input an image and return the most similar images in Wikimedia Commons.

Methods[edit]

This project is done in two parts:

- Calculate the embeddings of the images stored as string(base64) in csv file.

- Create the index tree using embeddings generate in above step, for recommendations. For this step we will be using AnnoyIndex from annoy.

Step 1: Calculating embeddings from the image base64 strings[edit]

As, image strings are stored in csv format so we decided to go with pandas and loaded the csv file into pandas Dataframe. After this we extracted the column of image base64 representation. Now, after getting Image base64 strings we passed the strings to generate_image function which used these strings to generate image array. We then finished out final step to calculate image embeddings which then again stored in csv file with one additional column of image urls.

Step 2: Creating Index tree using embeddings[edit]

After getting the embedding of the images, we created index tree using AnnoyIndex from annoy. This index tree will we used to get similar images at the time of APIs calling.

Timeline[edit]

- Investigate the best tools and methods to efficiently compute image similarity at scale

- Implement a first prototype for large-scale image similarity

- Iterate on the prototype and implement a public-facing tool

Experiments[edit]

Experiment 1: Model selection for Embedding generation[edit]

We mainly want to test EfficientNet against the ResNet50, that is why we only took variants of these models.

Observation: While, doing experiment we observe one strange thing variants of EfficientNet taking much time to load on memory otherwise they are faster in terms of model inference.

| name | parameters | images | time_took(sec) | testing_image | overall_acc |

|---|---|---|---|---|---|

| EfficientNetV2B3 | 12930622 | 101733 | 298.12573432922363 | 1180 | 48.42% |

| EfficientNetB4 | 17673816 | 101733 | 543.248486995697 | 1180 | 42.33% |

| ResNet50V1 | 23561152 | 101733 | 187.57541871070862 | 1180 | 40.51% |

| EfficientNetB7 | 64097680 | 101733 | 867.1762790679932 | 1180 | 41.60% |

| ResNet50V2 | 23564800 | 101733 | 365.39129114151 | 1180 | 40.14% |

Experiment 2: Finding the scalability of AnnoyIndex tree[edit]

Experiment 3: Selecting the n_components of PCA for embeddings[edit]

To selected the best PCA n_components, we experimented with different models by selecting different n_components. To compare different models with different PCA components we used to matrix

1. class_accuracy and

2. overlap_acc.

Columns of Table[edit]

1. name: Name of the model used in the experiment.

2. n_components: It defines the number of principle components we used for PCA.

3. variance_ratio: It is the ratio of the total information to the information represented by principle components of PCA on images.

4. class_accuracy: It the the accuracy of the predicted class of top 10 predicted image of belongs to same class as the source image. It is done for all images in testing dateset.

5. overlap_acc: It is quite interesting, start with assumption that images predicted by tree(annoy) of original images(let's say ideal_list) without any PCA is ideal. Now, images predicted after applying PCA with different n_components, if image present in ideal_list, we will call it Hit otherwise Miss, then sum of all hits divided by total predicted images(we took 100) gave us this accuracy for that n_component of PCA.

6. tree_size: We also keep track of size of tree(annoy) created after applying PCA.

| name | n_components | variance ratio | class_accuracy | overlap_acc | tree_size |

|---|---|---|---|---|---|

| EfficientNetB3V2 | -1 | 100.00% | 48.42% | 100.00% | 726.94MB |

| EfficientNetB3V2 | 1024 | 94.47% | 48.25% | 81.85% | 525.67MB |

| EfficientNetB3V2 | 512 | 83.62% | 47.96% | 80.22% | 334.65MB |

| EfficientNetB3V2 | 256 | 73.22% | 47.50% | 77.42% | 240.83MB |

| EfficientNetB3V2 | 128 | 60.28% | 46.32% | 69.26% | 194.31MB |

| EfficientNetB3V2 | 64 | 46.31% | 40.97% | 52.70% | 166.54MB |

| name | n_components | variance ratio | class_accuracy | overlap_acc | tree_size |

|---|---|---|---|---|---|

| EfficientNetB4 | -1 | 100.00% | 42.33% | 100.00% | 825.95MB |

| EfficientNetB4 | 1024 | 93.45% | 42.32% | 85.10% | 526.46MB |

| EfficientNetB4 | 512 | 85.37% | 42.25% | 84.09% | 336.73MB |

| EfficientNetB4 | 256 | 74.33% | 42.08% | 76.84% | 241.28MB |

| EfficientNetB4 | 128 | 58.49% | 40.48% | 67.35% | 194.53MB |

| EfficientNetB4 | 64 | 43.50% | 34.98% | 50.88% | 168.76MB |

| name | n_components | variance ratio | class_accuracy | overlap_acc | tree_size |

|---|---|---|---|---|---|

| EfficientNetB7 | -1 | 100.00% | 41.60% | 100.00% | 1132.17MB |

| EfficientNetB7 | 1024 | 92.78% | 41.69% | 85.67% | 532.97MB |

| EfficientNetB7 | 512 | 87.51% | 41.90% | 85.05% | 343.51MB |

| EfficientNetB7 | 256 | 76.62% | 40.76% | 75.54% | 250.43MB |

| EfficientNetB7 | 128 | 60.11% | 38.07% | 63.64% | 201.94MB |

| EfficientNetB7 | 64 | 44.96% | 36.08% | 53.85% | 174.78MB |

| name | n_components | variance ratio | class_accuracy | overlap_acc | tree_size |

|---|---|---|---|---|---|

| ResNet50V1 | -1 | 100.00% | 40.51% | 100.00% | 917.29MB |

| ResNet50V1 | 1024 | 93.57% | 40.31% | 83.25% | 523.87MB |

| ResNet50V1 | 512 | 84.63% | 40.10% | 80.77% | 331.79MB |

| ResNet50V1 | 256 | 73.91% | 39.24% | 74.97% | 238.33MB |

| ResNet50V1 | 128 | 61.83% | 38.88% | 69.67% | 190.20MB |

| ResNet50V1 | 64 | 49.22% | 35.58% | 55.98% | 162.61MB |

| name | n_components | variance ratio | class_accuracy | overlap_acc | tree_size |

|---|---|---|---|---|---|

| ResNet50V2 | -1 | 100.00% | 40.14% | 100.00% | 916.58MB |

| ResNet50V2 | 1024 | 95.73% | 40.25% | 82.71% | 526.60MB |

| ResNet50V2 | 512 | 86.23% | 39.92% | 80.06% | 334.51MB |

| ResNet50V2 | 256 | 74.25% | 39.01% | 73.46% | 239.71MB |

| ResNet50V2 | 128 | 60.57% | 38.21% | 66.84% | 192.16MB |

| ResNet50V2 | 64 | 46.84% | 35.47% | 55.34% | 164.16MB |

Experiment 4: End-to-End(in reference to Time) testing of models[edit]

In this experiment what we want to test, is mainly timing of the model. Like how much time it will take to generate result. In this experiment time is calculated from passing to url to getting the recommendation of similar images.

PIPELINE FOLLOWED[edit]

1. Downloading of image from the URL and loading it into numpy array.

2. Loading of the PCA.

3. Loading of the tree(annoy).

4. Calculation of the embeddings of image.

5. Applying of the PCA to embeddings.

6. Getting the ids of recommended images.

7. Fetching the URL of recommended image from CSV file.

NOTE: We preloaded the model into RAM.

| name | n_components | already_loaded | iter | time_took(sec) |

|---|---|---|---|---|

| EfficientNetB3V2 | -1 | True | 1 | 2.993394613265991 |

| EfficientNetB3V2 | -1 | True | 2 | 0.8071532249450684 |

| EfficientNetB3V2 | -1 | True | 3 | 0.8314507007598877 |

| EfficientNetB3V2 | 1024 | True | 1 | 2.900543689727783 |

| EfficientNetB3V2 | 1024 | True | 2 | 0.8321168422698975 |

| EfficientNetB3V2 | 1024 | True | 3 | 0.8280463218688965 |

| EfficientNetB3V2 | 512 | True | 1 | 2.4594342708587646 |

| EfficientNetB3V2 | 512 | True | 2 | 0.8171608448028564 |

| EfficientNetB3V2 | 512 | True | 3 | 0.8010208606719971 |

| EfficientNetB3V2 | 256 | True | 1 | 1.8577890396118164 |

| EfficientNetB3V2 | 256 | True | 2 | 0.8222873210906982 |

| EfficientNetB3V2 | 256 | True | 3 | 0.8436849117279053 |

| EfficientNetB3V2 | 128 | True | 1 | 0.8022160530090332 |

| EfficientNetB3V2 | 128 | True | 2 | 0.7558486461639404 |

| EfficientNetB3V2 | 128 | True | 3 | 0.7694368362426758 |

| EfficientNetB3V2 | 64 | True | 1 | 0.8248932361602783 |

| EfficientNetB3V2 | 64 | True | 2 | 0.8313982486724854 |

| EfficientNetB3V2 | 64 | True | 3 | 0.7825984954833984 |

| name | n_components | already_loaded | iter | time_took(sec) |

|---|---|---|---|---|

| EfficientNetB4 | -1 | True | 1 | 31.089104413986206 |

| EfficientNetB4 | -1 | True | 2 | 0.8255059719085693 |

| EfficientNetB4 | -1 | True | 3 | 0.7638154029846191 |

| EfficientNetB4 | 1024 | True | 1 | 0.8171260356903076 |

| EfficientNetB4 | 1024 | True | 2 | 0.8361170291900635 |

| EfficientNetB4 | 1024 | True | 3 | 0.8306858539581299 |

| EfficientNetB4 | 512 | True | 1 | 0.7886874675750732 |

| EfficientNetB4 | 512 | True | 2 | 0.8270878791809082 |

| EfficientNetB4 | 512 | True | 3 | 0.7964653968811035 |

| EfficientNetB4 | 256 | True | 1 | 0.7913780212402344 |

| EfficientNetB4 | 256 | True | 2 | 0.7964553833007812 |

| EfficientNetB4 | 256 | True | 3 | 0.7966964244842529 |

| EfficientNetB4 | 128 | True | 1 | 0.7879147529602051 |

| EfficientNetB4 | 128 | True | 2 | 0.8378396034240723 |

| EfficientNetB4 | 128 | True | 3 | 0.8303694725036621 |

| EfficientNetB4 | 64 | True | 1 | 0.7877256870269775 |

| EfficientNetB4 | 64 | True | 2 | 0.7898170948028564 |

| EfficientNetB4 | 64 | True | 3 | 0.7847003936767578 |

| name | n_components | already_loaded | iter | time_took(sec) |

|---|---|---|---|---|

| EfficientNetB7 | -1 | True | 1 | 7.930605411529541 |

| EfficientNetB7 | -1 | True | 2 | 0.835761308670044 |

| EfficientNetB7 | -1 | True | 3 | 0.8150243759155273 |

| EfficientNetB7 | 1024 | True | 1 | 0.813317060470581 |

| EfficientNetB7 | 1024 | True | 2 | 0.8263413906097412 |

| EfficientNetB7 | 1024 | True | 3 | 0.8415734767913818 |

| EfficientNetB7 | 512 | True | 1 | 0.8173937797546387 |

| EfficientNetB7 | 512 | True | 2 | 0.8228459358215332 |

| EfficientNetB7 | 512 | True | 3 | 0.8680799007415771 |

| EfficientNetB7 | 256 | True | 1 | 0.8364121913909912 |

| EfficientNetB7 | 256 | True | 2 | 0.8222959041595459 |

| EfficientNetB7 | 256 | True | 3 | 0.8135280609130859 |

| EfficientNetB7 | 128 | True | 1 | 0.787550687789917 |

| EfficientNetB7 | 128 | True | 2 | 0.8863904476165771 |

| EfficientNetB7 | 128 | True | 3 | 0.8605077266693115 |

| EfficientNetB7 | 64 | True | 1 | 0.8497884273529053 |

| EfficientNetB7 | 64 | True | 2 | 1.692915678024292 |

| EfficientNetB7 | 64 | True | 3 | 0.8910524845123291 |

| name | n_components | already_loaded | iter | time_took(sec) |

|---|---|---|---|---|

| ResNet50V1 | -1 | True | 1 | 1.8402047157287598 |

| ResNet50V1 | -1 | True | 2 | 0.8064224720001221 |

| ResNet50V1 | -1 | True | 3 | 0.7804932594299316 |

| ResNet50V1 | 1024 | True | 1 | 0.8325328826904297 |

| ResNet50V1 | 1024 | True | 2 | 0.7556729316711426 |

| ResNet50V1 | 1024 | True | 3 | 0.7284393310546875 |

| ResNet50V1 | 512 | True | 1 | 0.7692885398864746 |

| ResNet50V1 | 512 | True | 2 | 0.7979185581207275 |

| ResNet50V1 | 512 | True | 3 | 0.7842895984649658 |

| ResNet50V1 | 256 | True | 1 | 0.8078567981719971 |

| ResNet50V1 | 256 | True | 2 | 0.7786800861358643 |

| ResNet50V1 | 256 | True | 3 | 0.7741687297821045 |

| ResNet50V1 | 128 | True | 1 | 0.8202202320098877 |

| ResNet50V1 | 128 | True | 2 | 0.8022265434265137 |

| ResNet50V1 | 128 | True | 3 | 0.7970542907714844 |

| ResNet50V1 | 64 | True | 1 | 0.7963719367980957 |

| ResNet50V1 | 64 | True | 2 | 0.8078024387359619 |

| ResNet50V1 | 64 | True | 3 | 0.8760688304901123 |

| name | n_components | already_loaded | iter | time_took(sec) |

|---|---|---|---|---|

| ResNet50V2 | -1 | True | 1 | 1.9190337657928467 |

| ResNet50V2 | -1 | True | 2 | 0.8073186874389648 |

| ResNet50V2 | -1 | True | 3 | 0.8345324993133545 |

| ResNet50V2 | 1024 | True | 1 | 0.8155877590179443 |

| ResNet50V2 | 1024 | True | 2 | 0.8098921775817871 |

| ResNet50V2 | 1024 | True | 3 | 0.8261690139770508 |

| ResNet50V2 | 512 | True | 1 | 0.8170630931854248 |

| ResNet50V2 | 512 | True | 2 | 0.7819721698760986 |

| ResNet50V2 | 512 | True | 3 | 0.7720069885253906 |

| ResNet50V2 | 256 | True | 1 | 0.7744569778442383 |

| ResNet50V2 | 256 | True | 2 | 0.8012771606445312 |

| ResNet50V2 | 256 | True | 3 | 0.7616298198699951 |

| ResNet50V2 | 128 | True | 1 | 0.7649922370910645 |

| ResNet50V2 | 128 | True | 2 | 0.7748057842254639 |

| ResNet50V2 | 128 | True | 3 | 0.7836484909057617 |

| ResNet50V2 | 64 | True | 1 | 0.7733273506164551 |

| ResNet50V2 | 64 | True | 2 | 0.7945435047149658 |

| ResNet50V2 | 64 | True | 3 | 0.8264520168304443 |

We also observe same thing which we discussed in Experiment 1.

RESULT: We decided to go with EfficientNetB3V2 with PCA(n_components=256).

Experiment 5: accuracy of selected model with different classes[edit]

After selecting the model we decided to do one class accuracy check with model.

| category | accuracy |

|---|---|

| Architecture | 12.40% |

| Drawings | 32.80% |

| Flags | 42.50% |

| Fossils | 61.10% |

| Paintings | 32.20% |

| Politician | 48.10% |

| Sculptures | 21.80% |

| aircraft | 69.20% |

| apple | 70.30% |

| banner | 27.10% |

| bed | 49.10% |

| bicycle | 56.90% |

| bird | 54.50% |

| boat | 45.70% |

| book | 39.50% |

| bottle | 62.90% |

| brick | 25.40% |

| bridge | 49.80% |

| building | 13.80% |

| bus | 67.60% |

| cake | 46.90% |

| car | 73.90% |

| cat | 73.60% |

| chair | 43.50% |

| clothes | 34.90% |

| cow | 54.30% |

| dog | 51.30% |

| door | 43.50% |

| fence | 18.10% |

| fire_hydrant | 53.00% |

| flower | 37.90% |

| fog | 26.50% |

| food | 20.80% |

| fruit | 40.00% |

| furniture | 37.10% |

| hill | 39.80% |

| horse | 56.30% |

| house | 24.20% |

| leaves | 37.80% |

| light | 60.10% |

| motorcycle | 82.80% |

| mountain | 33.60% |

| mud | 38.20% |

| paper | 83.30% |

| person | 13.80% |

| plastic | 35.80% |

| potted_plant | 69.70% |

| river | 31.60% |

| road | 48.30% |

| rock | 28.40% |

| roof | 25.00% |

| rug | 57.70% |

| sand | 31.40% |

| sea | 29.70% |

| sheep | 65.00% |

| shoe | 62.60% |

| sky | 15.50% |

| snow | 30.80% |

| stairs | 23.80% |

| stone | 28.60% |

| street_sign | 74.50% |

| table | 34.20% |

| textile | 50.90% |

| tile | 41.10% |

| toilet | 74.40% |

| train | 73.50% |

| tree | 22.10% |

| truck | 55.30% |

| vase | 62.10% |

| vegetable | 49.30% |

| wall | 19.20% |

| water | 21.80% |

| water_droplets | 53.10% |

| window | 31.30% |

| wood | 30.20% |

| Overall Accuracy | 43.80% |

After seeing these low accuracy of classes, we were confident that there is something wrong class labels, to confirm this we perform one more experiment Experiment 6

Experiment 6: Testing model with critical classes(having low accuracy)[edit]

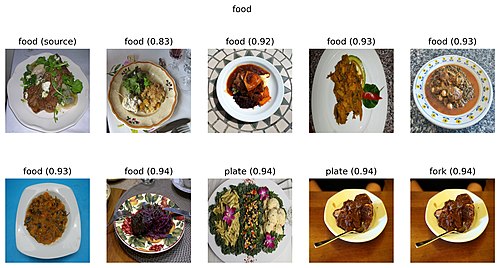

These are some sample images of this experiment where we can easily observe that although images are similar to each other content-wise but they are present under different categories.

Above (source) image belongs to Food category, notice last three images contains food but they belongs to different category which was the cause of low score in preview experiment.

This is second sample of recommendation (source)image belongs to House category.

Spark: Recommendation Tree Generation[edit]

1. PCA generation for mapping embeddings to [256,] vector[edit]

When we were starting with this step there were mainly two choices available -

1. pyspark.ml.feature.PCA

2. sklearn.decomposition.IncrementalPCA

and both were have there problems -

The problem with pyspark.ml.feature.PCA was that it required spark context everytime even at the time of inference which was not possible in our case. And, the problem with sklearn.decomposition.IncrementalPCA was that it can't be distributed with spark.

We choose sklearn.decomposition.IncrementalPCA because we could fill it with batches of data, which will reduce the spark efficiency but it would not be required spark context at the time of inference which was required in our case.

# Created the instance of PCA with 256 components

pca = IncrementalPCA(n_components=256, batch_size=50000)

# this function will fill the batches of data to pca

def fill_pca(df):

global pca

df_list = df.collect()

features = []

for row in tqdm(df_list):

features.append(list(row['features']))

arr = np.array(features)

pca = pca.partial_fit(arr)

These are the part of code which is used to fill pca with required data batch by batch

with open('pca256.pkl', 'wb') as f:

pickle.dump(pca, f)

At the end we just dumped the pca instance for using it later for PCA generation of embeddings.

2. Index to URL map file generation[edit]

OK, we had the small problem is that annoy will return index of the matched embeddings of image, but the limitation with annoy was that only integers can be used as index but we needed URL of the matched image.

SO, to solve this problem we came up with a map which will accept index of the image and return URL of the image. To generate this map we used following code -

tree = AnnoyIndex(256, 'angular')

# will work as map file for index->URL

d = dict()

ite = 0

def fill_tree(df, ite):

df_list = df.collect()

# following will iterate batch row by row

for row in tqdm(df_list):

data = np.array(list(row['features'])).reshape(1, -1)

# this will map image_url from index

d[ite] = row['image_url']

tree.add_item(ite, pca.transform(data).reshape(-1))

ite += 1

return ite

At last we dumped the map file to use at the time of inferencing the API.

with open('./idx2url.pkl', 'wb') as f:

pickle.dump(d, f)

3. Annoy tree generation for recommendation[edit]

After generating pca and idx2url map, it was time to complete the final step of generating annoy tree for making recommedation of similar image. Nothing much left to tell about annoy as we discussed every thing about this in this document, we will direct jump to code we use to fill embedding to tree:

# this is a instance of annoy tree

tree = AnnoyIndex(256, 'angular')

d = dict()

ite = 0

# this function will fill embeddings to tree row by row

def fill_tree(df, ite):

df_list = df.collect()

for row in tqdm(df_list):

data = np.array(list(row['features'])).reshape(1, -1)

d[ite] = row['image_url']

# notice we are adding embeddings to tree after applying pca on it

tree.add_item(ite, pca.transform(data).reshape(-1))

ite += 1

return ite

In order to understand why 100 is passed in build method, you can refer to following paragraph which was taken from the annoy github page.

a.build(n_trees, n_jobs=-1) builds a forest of n_trees trees. More trees gives higher precision when querying. After calling build, no more items can be added. n_jobs specifies the number of threads used to build the trees. n_jobs=-1 uses all available CPU cores.

We saved tree to use it in API

tree.build(100)

tree.save('./tree.ann')

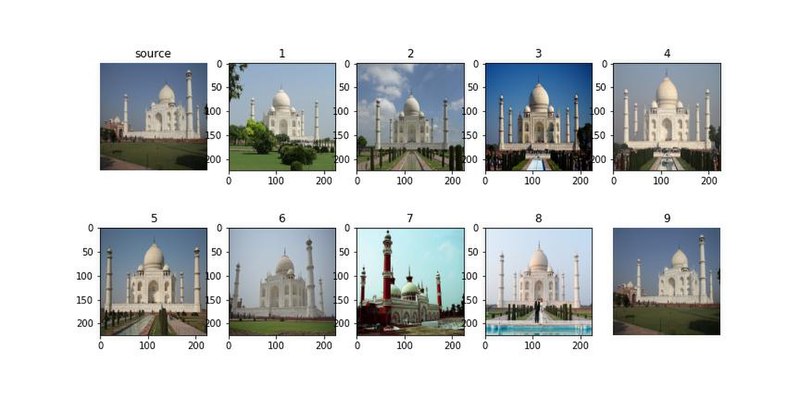

4. Testing everything till now, before moving further[edit]

# We took url of image

url = 'https://www.malaysia-today.net/wp-content/uploads/2019/11/Taj-Mahal-Agra-India.jpg'

# downloading image data form URL

img_data = urllib.request.urlopen(url).read()

# image data to image conversion

image = generate_image(img_data)

# first we passed image to model(efficientNetB3)

features = model.predict(np.expand_dims(image, axis=0))

# here we are applying pca to embeddings

feature_pca = loaded_pca.transform(features)

# using tree to generate index of similar images which then mapped to image_url

res = [d[i] for i in tree.get_nns_by_vector(feature_pca.reshape(-1), n=10)]

Codes[edit]

API Codes Please refer to this page

UI Codes Check this out

Results[edit]

You can test result by yourself: [[1]]

On Phabricator[edit]

Follow this task