Research:Article feedback/Final quality assessment

| Article Feedback v5 | Data & Metrics | Stage 1: Design (December 2011 - March 2012) |

Stage 2: Placement (March 2012 - April 2012) |

Stage 3: Impact on engagement (April 2012 - May 2012) |

|

WP:AFT5 (Talk) Dashboards |

Volume analysis |

Conversions and newcomer quality Final tests Quality assessment |

Overview[edit]

In this study we sought to extend the evaluation of the quality of feedback peformed in stage 1 and stage 2 for a few reasons:

- The design of AFT has changed substantially since the last evaluation

- We want to build a training/test set for a machine classifier to aid in feedback moderation

- We'd like to more thoroughly explore quality differences based on the popularity of the articles on which feedback is posted.

In order to perform this evaluation, we generated a new sample of feedback posted using the most recent version of the article feedback tool. By building a stratified sample based on quartiles of article popularity and employing a random resampling method, we statistically evaluate both the overall quality of feedback and compare the quality of feedback between different *types* of articles.

Methods[edit]

The Sample[edit]

In order to both get a sense the usefulness of a random sample of feedback *and* make comparisons between articles that received different rates of feedback, we performed a stratified sample. To identify the potential levels of the strata, we sampled all feedback submissions per page from the month between Nov. 22nd and Dec. 21st. Figure 1 shows the long tail distribution whereby most articles received few submissions, but a couple receive quite a lot. Based on this histogram, we approximated four quartiles of feedback submission rates. Note that the 1st approximate quartile is much larger (37.9%) due to the discrete value of the number of submissions.

- 1 submission: 37.90% of feedback, 70.4% of articles

- 2-3 submissions: 25.77% of feedback, 21.1% of articles

- 4-14 submissions: 24.98% of feedback, 7.7% of articles

- 15 or more submissions: 11.35% of feedback, 0.8% of articles

From each of these quartiles, we sampled 300 feedback submissions to obtain a stratified sample of n=1200. From this sample, we can build high-confidence usefulness evaluations from each quartile *and* re-sample in order to get a representative random sample of feedback based on the observed proportions that the approximate quartiles represent (n=808).

Evaluation[edit]

To evaluate the usefulness of feedback, we employed the Feedback Evaluation System as we did in phase 1 and phase 2 of our earlier analyses. However, this time we redesigned the tool to mimic the moderation actions that users can perform on-wiki

- Useful - This comment is useful and suggests something to be done to the article.

- Unusable - This comment does not suggest something useful to be done to the article, but it is not inappropriate enough to be hidden

- Inappropriate - This comment should be hidden: examples would be obscenities or vandalism.

- Oversight - Oversight should be requested. The comment contains one of the following: phone numbers, email addresses, pornographic links, or defamatory/libelous comments about a person.

We assigned each sampled feedback submissions to at least two volunteer Wikipedians. Out of the 1200 feedback submissions, our volunteers agreed 834 times (69.3%), and disagreed by one rank 356 times (29.6%). To make sense of these disagreements, we used two aggregations strategies: best & worst

- best: Best errs by choosing most optimistic evaluation. For example, if the two volunteers came up with "useful" and "unusable", best chooses the better rating: "useful".

- worst: Worst errs by choosing the most pessimistic evaluation. For example, if the two volunteers came up with "useful" and "unusable", worst chooses the worse rating: "unusable".

In the results section below, we report our results using both aggregation strategies.

Results[edit]

What proportion of feedback is useful?[edit]

In order to obtain a sample that was representative of all of the feedback in Wikipedia from our stratified sample, we resampled from each strata proportionally.

- 1 submission: 37.90/37.90 = 100% * 300 = 300

- 2-3 submission: 27.77/37.90 = 73.37% * 300 = ~220

- 4-14 submissions: 24.98/37.90 = 65.91% * 300 = ~198

- 15 or more submissions: 11.35/37.90 = 29.94% * 300 = ~90

With this resampling, we are left with 808 of the original 1200 feedback submissions. Figure 2 plots the proportion of feedback that fell into the 4 categories with 95% confidence intervals. The figure makes the skewing effect of the aggregation strategies apparent. For example, while 66% of feedback was deemed useful by at least one volunteer (best aggregation), only 39% was deemed useful by both volunteers (worst aggregation).

| Best | ||

|---|---|---|

| utility | count | prop |

| useful | 530 | 0.66 |

| unusable | 251 | 0.31 |

| inappropriate | 26 | 0.03 |

| oversight | 1 | 0.00 |

| Worst | ||

| utility | count | prop |

| useful | 319 | 0.39 |

| unusable | 429 | 0.53 |

| inappropriate | 56 | 0.07 |

| oversight | 4 | 0.00 |

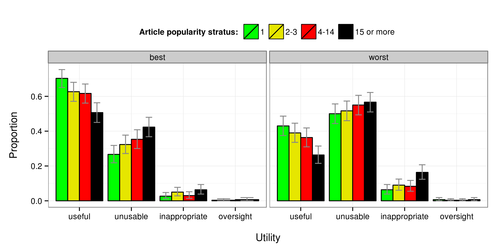

How is feedback utility affected by the article popularity?[edit]

To answer this question, we performed the same analysis as above, but compared between the strata. Figure 3 plots the proportion of useful feedback for each of the four strata of feedback rates. As predicted, the proportion of useful feedback decreases as articles increase in popularity. Using the "best" aggregation, the proportion of useful feedback decreases from 70.3% for articles that received only one feedback submission to 50.6% for articles that received more than 15 submissions.

| Best | ||

|---|---|---|

| strata | count | prop |

| 1 | 211 | 0.70 |

| 2-3 | 188 | 0.63 |

| 4-14 | 185 | 0.62 |

| 15- | 152 | 0.51 |

| Worst | ||

| strata | count | prop |

| 1 | 129 | 0.43 |

| 2-3 | 117 | 0.39 |

| 4-14 | 109 | 0.36 |

| 15- | 79 | 0.26 |