Research:Surveys on the gender of editors/Report

This report summarizes the findings and recommendations based on surveys of the editor population in Arabic, English, and Norwegian Wikipedia that ran from 5 August - 13 August 2019. The current QuickSurveys approach used in this pilot would need some changes to be more viable long-term but asking about gender as part of the onboarding experience would be currently feasible and complement existing user preference and Community Insights data.

Overview[edit]

See the main page for more context on the survey design. There are a few key aspects of the survey that are worth repeating here though:

- The survey was designed to have minimal barriers to responding -- i.e. it was a widget that displayed directly on Wikipedia pages to editors who were signed in (and randomly sampled into the study) and it had a single question about gender so took almost no time to respond. In theory, this should lead to a more representative group of respondents as it will not just sample for more dedicated editors who, for instance, are willing to go through a longer survey or navigate user preferences.

- The QuickSurveys extension was used because it also provides additional metadata for the responses such as the user's edit count (in buckets of 0 edits, 1-4 edits, 5-99 edits, 100-999 edits, and 1000+ edits) and allows us to roughly reconstruct reading sessions to get a sense of how representative the respondents are of the larger editor population (debiasing).

Debiasing Process[edit]

A large suite of contextual features are gathered for each survey response that seek to capture the measurable aspects that might relate to how likely an individual is to respond to the survey. For instance, most obviously, more frequent users of Wikipedia will have more chances to see the survey and therefore will be more likely to respond to it. To counteract this bias -- i.e. to provide results that are representative of the broader population and not just the highly-active user population -- we weight each response so that weighted respondent population resembles the representative population of editors for that wiki.

In general, we see the following characteristics of respondents that were most likely to take the survey:

- longer reading sessions (# of page views and time spent)

- attempt an edit during the session

- visited a Wikipedia Main Page

- read pages that are more specific (fewer interlanguage links)

We see little or mixed effects with regards to some of these other aspects:

- country

- topic of articles being read

- number of Wikipedia language editions viewed

- demand (average number of page views to pages that were read)

- quality (length of pages viewed)

In practice, the debiasing procedure downweights responses from very active editors and upweights responses from editors with low edit counts. In English, we see that men were slightly more likely to respond to the survey. In Norwegian and Arabic, we see the opposite: women were slightly more likely to respond to the survey. We present the results below using the debiased data. The process at most changes the results by 1-2% and thus is a useful check but in practice does not change the conclusions substantially.

Survey Results[edit]

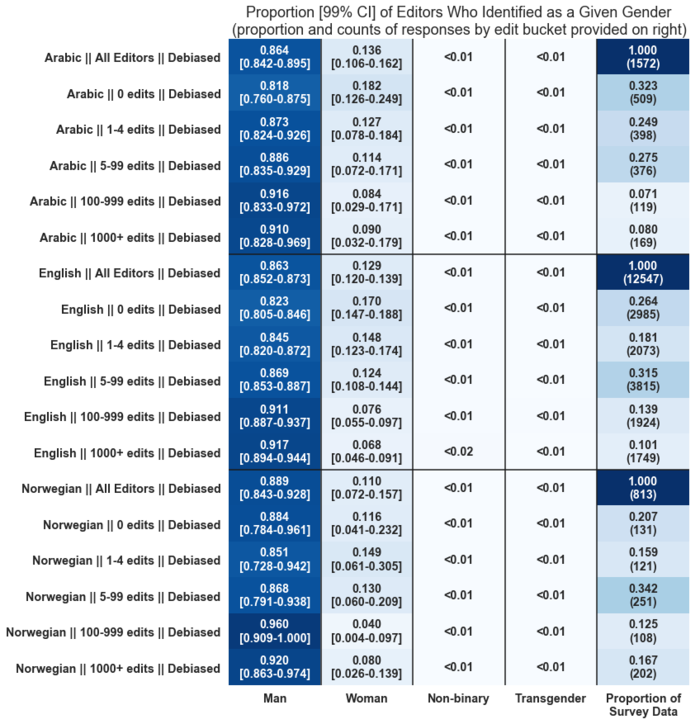

The results below include the following categories of responses: Men, Women, Non-binary (based on coding of open-text responses), and Transgender (based on coding of open-text responses). I remove responses where the user clicked that they preferred not to take the survey or wrote information unrelated to gender identity in the open-text response. In general, a small proportion of responses indicated some annoyance with this survey and that signal along with feedback through the talk page, task T227793, and Village Pump posts (linked on phab task) are taken into consideration for the recommendations below. While we do not know exactly how many editors saw the survey, our best estimates based on user-agent and IP address are response rates 20%, 20%, and 15% for Arabic, English, and Norwegian respectively.

We had to balance privacy and inclusiveness when choosing how to report the data around non-binary and transgender users. We chose not to display the exact proportions of respondents given that the counts behind these proportions are relatively small. We still felt it important to include the categories and general proportions though given that these users had chosen to disclose this information via the survey and there is a very minimal risk of de-identification given that the survey ran for a week and no other information could pinpoint whether a given editor saw or responded to the survey.

Of note, across all three languages we see that the vast majority of editors identify as men. This corresponds with other efforts to study the gender gap on Wikipedia and is not surprising. The exact overlap in results with other approaches are discussed individually below.

The next takeaway is that the gender of the editor population is progressively more skewed in higher activity thresholds. This can be viewed in two ways: it indicates that the incoming editor populations are more balanced with respect to gender, which is positive, but it also indicates that the dropout rate for editors is higher among women.

The final takeaway from these results is that the gender balance of even new editors is still highly skewed towards men. This suggests that while improving tools and support is critical work to bring in a more diverse editor population, there is still a large gender gap for new editors. Addressing this would likely require efforts around awareness of editing (see Shaw and Hargittai[1] for more information regarding this pipeline).

Comparison to Alternative Approaches[edit]

There have been a few alternative approaches to measuring the gender balance of the editor population that are worth discussing as far as what we can infer about their benefits / drawbacks based on this survey.

User Preferences[edit]

For an extended discussion of the gender setting in Special:Preferences, see Phabricator task task T61643. In general, users can set their preferred pronouns ("How do you prefer to be described?"), which defaults to gender-neutral but, for example in English, also allows for "He" and "She". This data is then surfaced in the user_properties table. There are three major concerns with using this data:

- it is opt-in so a very non-random population is likely to provide this information and specifically many new users have not set this information.

- given that it is not fully anonymous, more privacy-conscious individuals may not wish to provide this data

- while the default is gender-neutral, it really is only limited to a binary regarding gender

To gather this data for a given wiki, the following query was used:

SELECT up_user, up_value, user_editcount, user_touched

FROM user_properties LEFT JOIN user ON user_properties.up_user = user.user_id

WHERE up_property = 'gender';

| Process SQL query results (click button on right to expand) |

|---|

import argparse

import pandas as pd

def main():

parser = argparse.ArgumentParser()

parser.add_argument("--editor_gender_count_fn",

help="Output from SQL query on user_properties table")

parser.add_argument("--date_threshold",

default="20010115",

help="Date in format YYYYMMDD. User must have touched their account on this date or later to be included.")

args = parser.parse_args()

# load in all data

df = pd.read_csv(args.editor_gender_count_fn, sep='\t', dtype={'user_touched':str})

users_before_filtering = len(df)

# trim down to only recently active users

df = df[df['user_touched'].apply(filter_by_activity, args=[args.date_threshold])]

users_after_filtering = len(df)

print("{0}: retaining users who have been active since {1}. Went from {2} users to {3}.".format(args.editor_gender_count_fn, args.date_threshold, users_before_filtering, users_after_filtering))

# bucket users by edit count (to match QuickSurveysResponses EventLogging Schema)

df['edit_bucket'] = df['user_editcount'].apply(edit_count_to_bucket)

# write gender proportions by edit count to CSV

df.groupby(['edit_bucket'])['up_value'].value_counts().to_csv(

args.editor_gender_count_fn.replace('.tsv', '_bucketed_since{0}.csv'.format(args.date_threshold)), header=True, sep=',')

def filter_by_activity(user_touched, date_threshold):

if user_touched >= date_threshold:

return True

return False

def edit_count_to_bucket(ec):

if ec == 0:

return '0 edits'

elif ec < 5:

return '1-4 edits'

elif ec < 100:

return '5-99 edits'

elif ec < 1000:

return '100-999 edits'

elif ec >= 1000:

return '1000+ edits'

else:

return 'unknown'

if __name__ == "__main__":

main()

|

User Preference Results[edit]

The tables below give statistics around the proportion men (because user preferences is a binary), total number of users, and response rate according to the user_properties table based on users who have identified their gender according to certain filter criteria. Notably, the labels "Last Week", "Last Month", "Last Year", and "All Time" refer to the user_touched property and give a sense of how many users were active in a given time period.

English is the only one of these three languages with sufficient editor activity to achieve a reasonable sample size for timespans less than a year. This is reflected in the 99% confidence intervals in the first table. This would make it difficult to use the user_properties table to track changes in editor gender at more frequent intervals. English is also the only language with sufficient number of newer users who have set the property but the fact that less than 1% of users without edits set their gender property at all means that it is still highly unstable as a measure -- e.g., a edit-a-thon or educational initiative that encouraged new users to set the property might be enough to sway the counts.

In general, though, we see that there is good agreement between the numbers here and the survey results for highly active editors. This makes sense as a much higher proportion of highly-active editors have set the gender preference property. For less active editors though, we see that data from the user properties table is less accurate (and more variable) as compared to the survey results.

| Language | Edit Bucket | Last Week | Last Month | Last Year | All Time |

|---|---|---|---|---|---|

| Arabic | 0 edits | 0.600 [0.084-1.000] | 0.711 [0.485-0.936] | 0.810 [0.747-0.874] | 0.822 [0.815-0.829] |

| Arabic | 1-4 edits | 0.846 [0.565-1.000] | 0.865 [0.709-1.000] | 0.839 [0.782-0.895] | 0.837 [0.827-0.847] |

| Arabic | 5-99 edits | 0.857 [0.692-1.000] | 0.882 [0.780-0.983] | 0.845 [0.806-0.884] | 0.843 [0.830-0.855] |

| Arabic | 100-999 edits | 0.778 [0.491-1.000] | 0.797 [0.645-0.948] | 0.853 [0.797-0.910] | 0.847 [0.818-0.877] |

| Arabic | 1000+ edits | 0.822 [0.708-0.937] | 0.868 [0.791-0.944] | 0.905 [0.858-0.952] | 0.908 [0.872-0.944] |

| Arabic | Any edit count | 0.813 [0.735-0.891] | 0.842 [0.793-0.892] | 0.849 [0.827-0.870] | 0.831 [0.826-0.835] |

| English | 0 edits | 0.809 [0.740-0.879] | 0.835 [0.802-0.869] | 0.849 [0.839-0.860] | 0.818 [0.816-0.820] |

| English | 1-4 edits | 0.831 [0.766-0.897] | 0.850 [0.817-0.884] | 0.862 [0.851-0.873] | 0.831 [0.829-0.834] |

| English | 5-99 edits | 0.872 [0.841-0.902] | 0.879 [0.861-0.896] | 0.875 [0.868-0.882] | 0.851 [0.848-0.853] |

| English | 100-999 edits | 0.887 [0.862-0.912] | 0.895 [0.879-0.910] | 0.905 [0.897-0.913] | 0.886 [0.881-0.891] |

| English | 1000+ edits | 0.931 [0.919-0.944] | 0.932 [0.922-0.943] | 0.930 [0.922-0.937] | 0.924 [0.918-0.930] |

| English | Any edit count | 0.901 [0.891-0.912] | 0.896 [0.889-0.904] | 0.882 [0.879-0.886] | 0.832 [0.831-0.833] |

| Norwegian | 0 edits | 1.000 [1.000-1.000] | 1.000 [1.000-1.000] | 0.900 [0.822-0.978] | 0.763 [0.740-0.787] |

| Norwegian | 1-4 edits | (No data) | 0.889 [0.602-1.000] | 0.881 [0.793-0.970] | 0.803 [0.777-0.830] |

| Norwegian | 5-99 edits | 0.933 [0.761-1.000] | 0.857 [0.707-1.000] | 0.852 [0.793-0.911] | 0.839 [0.816-0.861] |

| Norwegian | 100-999 edits | 0.786 [0.467-1.000] | 0.895 [0.759-1.000] | 0.906 [0.843-0.969] | 0.918 [0.880-0.957] |

| Norwegian | 1000+ edits | 0.930 [0.839-1.000] | 0.937 [0.864-1.000] | 0.930 [0.869-0.990] | 0.923 [0.870-0.976] |

| Norwegian | Any edit count | 0.909 [0.830-0.988] | 0.911 [0.856-0.966] | 0.886 [0.857-0.915] | 0.807 [0.795-0.818] |

| Language | Edit Bucket | Last Week | Last Month | Last Year | All Time |

|---|---|---|---|---|---|

| Arabic | 0 edits | 10 | 38 | 311 | 24830 |

| Arabic | 1-4 edits | 13 | 37 | 335 | 10800 |

| Arabic | 5-99 edits | 35 | 76 | 665 | 6977 |

| Arabic | 100-999 edits | 18 | 59 | 307 | 1166 |

| Arabic | 1000+ edits | 90 | 151 | 284 | 467 |

| Arabic | Any edit count | 166 | 361 | 1902 | 44240 |

| English | 0 edits | 262 | 954 | 9605 | 365446 |

| English | 1-4 edits | 261 | 876 | 7973 | 150534 |

| English | 5-99 edits | 921 | 2702 | 18345 | 138356 |

| English | 100-999 edits | 1224 | 2858 | 9976 | 29079 |

| English | 1000+ edits | 2902 | 4196 | 8100 | 13400 |

| English | Any edit count | 5570 | 11586 | 53999 | 696815 |

| Norwegian | 0 edits | 2 | 12 | 110 | 2826 |

| Norwegian | 1-4 edits | 0 | 9 | 101 | 1907 |

| Norwegian | 5-99 edits | 15 | 42 | 283 | 2113 |

| Norwegian | 100-999 edits | 14 | 38 | 159 | 367 |

| Norwegian | 1000+ edits | 57 | 79 | 128 | 182 |

| Norwegian | Any edit count | 88 | 180 | 781 | 7395 |

| Language | Edit Bucket | Last Week | Last Month | Last Year | All Time |

|---|---|---|---|---|---|

| Arabic | 0 edits | 0.002 | 0.002 | 0.002 | 0.018 |

| Arabic | 1-4 edits | 0.013 | 0.010 | 0.010 | 0.041 |

| Arabic | 5-99 edits | 0.111 | 0.075 | 0.068 | 0.095 |

| Arabic | 100-999 edits | 0.231 | 0.299 | 0.347 | 0.297 |

| Arabic | 1000+ edits | 0.573 | 0.590 | 0.563 | 0.451 |

| Arabic | Any edit count | 0.028 | 0.017 | 0.010 | 0.026 |

| English | 0 edits | 0.005 | 0.005 | 0.004 | 0.014 |

| English | 1-4 edits | 0.017 | 0.017 | 0.015 | 0.020 |

| English | 5-99 edits | 0.073 | 0.078 | 0.064 | 0.044 |

| English | 100-999 edits | 0.219 | 0.224 | 0.194 | 0.133 |

| English | 1000+ edits | 0.415 | 0.402 | 0.363 | 0.274 |

| English | Any edit count | 0.057 | 0.037 | 0.018 | 0.019 |

| Norwegian | 0 edits | 0.003 | 0.005 | 0.004 | 0.009 |

| Norwegian | 1-4 edits | 0.000 | 0.014 | 0.016 | 0.019 |

| Norwegian | 5-99 edits | 0.106 | 0.100 | 0.080 | 0.053 |

| Norwegian | 100-999 edits | 0.250 | 0.281 | 0.250 | 0.161 |

| Norwegian | 1000+ edits | 0.425 | 0.407 | 0.378 | 0.239 |

| Norwegian | Any edit count | 0.090 | 0.045 | 0.019 | 0.016 |

Community Insights Surveys[edit]

The Community Insights Surveys are an annual survey distributed to editors (among other groups). There are a few salient aspects of this survey:

- It is distributed to a pre-set of editors that are randomly selected given that they meet some criteria (see sampling strategy). Traditionally this criteria has been some relatively high level of activity and thus the survey tends to represent the more veteran, highly active editor population. This makes it difficult to compare directly with our results because an editor's activity in a shorter time period does not clearly map to the edit buckets we use to separate users.

- The survey is distributed via MassMessage, which posts a link to the survey to each selected user's talk page. For newer users, they might be less aware of the invitation, though they are generally given several weeks to respond.

- Gender is just one of many questions asked in the survey. The survey can take quite some time to complete, which might lead to higher dropout among certain populations.

From the 2016-2017 surveys, we can compare the Arabic and English results with our recent surveys (Norwegian was not a language in the Community Insights surveys). We find that for Arabic, the numbers from the Community Insights survey look very similar to the numbers from this gender survey. The low sample size from the Community Insights Survey though means that we should be careful to not read too much into that result. For English, we find that the results from this gender survey are almost 10% more skewed. A few potential explanations:

- Women may be more likely to respond to the Community Insights survey than men. Only 15% and 35% of active and highly active editors responded to their invitations for the 2016-2017 Community Insights Survey, respectively. Of that group, about 70% completed the survey.

- Our survey only ran for a week, but it is possible with more time to see and respond to the survey, we would have gotten different results.

| Language | Survey | Activity Level | %-Men | Sample Size | Survey | Activity Level | %-Men | Sample Size |

|---|---|---|---|---|---|---|---|---|

| Arabic | ComIns 16-17 | Very Highly Active | 94% | <100 | GenSur 19 | 1000+ edits | 91% | 100-200 |

| Arabic | ComIns 16-17 | Highly Active | 90% | <100 | GenSur 19 | 100-999 edits | 92% | 300-400 |

| English | ComIns 16-17 | Very Highly Active | 82% | 700-800 | GenSur 19 | 1000+ edits | 93% | 1700-1800 |

| English | ComIns 16-17 | Highly Active | 85% | 300-400 | GenSur 19 | 100-999 edits | 92% | 1900-2000 |

2013 Gender New User Surveys[edit]

The 2013 gender micro-surveys surveyed English Wikipedia editors as they registered for new accounts. The options they provided in the survey were male, female, and opt-out. Of the respondents who did not opt out, they found that 75% of new editors identified as men and 25% as women. These results are more balanced than what was found in our survey, even for users with 0 edits. There are at least two possible explanations for this:

- a disproportionate number of women who sign up for an account do not actually use it (while men, even if they do not edit, do stay logged in and as a result took our survey).

- the gender gap among new editors has not improved in the intervening six years.

Recommendations[edit]

Based on these results, I would make the following recommendations:

QuickSurveys[edit]

Before QuickSurveys is used in follow-up surveys, a few issues should be addressed:

- The tool should provide the ability to dismiss the survey without answering "Prefer not to say" -- e.g., an "X" or similar option for dismissal for the survey widget.

- The expectation of users who receive this survey is that it is tied to their account. Thus, when they open Wikipedia on a different device, they expect the same experience. In reality, the survey samples randomly by browser (and this likely cannot be changed with compromising user privacy). Thus, an editor might respond to the survey on one device and then see it again on another. This is incredibly frustrating and confusing for some users. For the small minority of editors who constantly clear the local storage of their browser, they also can be resampled into the survey in the same browser after responding.

- The only good fix for this is running surveys with low enough sampling rates that it is unlikely that a reader will see the survey twice even if they clear cookies or switch browsers.

- There was also the desire to opt out completely from surveys like this. This would likely be a more complicated fix as I cannot think of anything client-side that would do it effectively. For instance, to opt out of a survey in your browser, you have to know the name of the survey. Enabling Do Not Track also opts you out from all surveys, but it has other implications beyond surveys on Wikipedia. Thus, it likely would have to be a change to the user settings, databases, etc. and we would have to determine if it only applied to QuickSurveys or other extensions as well.

- Finally, there is a low-level of frustration among editors about the presence of any survey, so as always consideration should be given to alternative approaches before proceeding.

The initial plans were to re-run these surveys only for new editors (who never would have seen this survey before). I do not think, with the exception of perhaps English, that the editor population is large enough to get a sufficient sample size without frustrating new editors who clear cookies or use multiple devices. This survey has been valuable as far as checking other approaches and providing some robust data on how the gender balance of editors changes depending on edit activity, but it does not appear to be the right tool going forward.

Validity of alternative approaches[edit]

Given the improvements that would be necessary to use QuickSurveys for these types of surveys, it is worth considering alternative approaches. Community Insights surveys (or other surveys conducted via talk page requests) in particular are a good source of data for the more experienced editor population. Depending on self-report via user preferences clearly has some large drawbacks as well for anything other than reporting on the gender gap among highly active editors. It is possible that this data would be more useful if new users were nudged to set the property (and potentially greater anonymity around the setting was offered to reduce privacy concerns). In the meantime, it is possible that it at least can be helpful for estimating the gender gap in other languages that have not been surveyed.

Reaching newer editors[edit]

To complement the relatively rich sources of data on more established editors, asking about gender as part of the user onboarding process would be the best approach. This could be as part of the welcome survey that has been piloted in several languages. That data could potentially then be used to set the user's gender preferences. Alternatively, we could also adopt the approach taken by the 2013 gender micro-surveys, which asked editors to disclose their gender identity when they first registered their account but was anonymous and did not record this information in the user preferences. This latter approach would preserve anonymity while not running the risk of frustrating new editors with a recurring survey request.

References[edit]

- ↑ Shaw, Aaron; Hargittai, Eszter (1 February 2018). "The Pipeline of Online Participation Inequalities: The Case of Wikipedia Editing". Journal of Communication 68 (1): 143–168. ISSN 0021-9916. doi:10.1093/joc/jqx003.