Research:Wikipedia Knowledge Integrity Risk Observatory

This page documents a research project in progress.

Information may be incomplete and change as the project progresses.

Please contact the project lead before formally citing or reusing results from this page.

Wikipedia is one of the main repositories of free knowledge available today, with a central role in the Web ecosystem. For this reason, it can also be a battleground for actors trying to impose specific points of view or even spreading disinformation online. There is a growing need to monitor its "health" but this is not an easy task. Wikipedia exists in over 300 language editions and each project is maintained by a different community, with their own strengths, weaknesses and limitations. The output of this project will multi-dimensional observatory to monitor knowledge integrity risks across different Wikimedia projects.

Introduction[edit]

The Web has become the largest repository of knowledge ever known in just three decades. However, we are witnessing in recent years the proliferation of sophisticated strategies that are heavily affecting the reliability and trustworthiness of online information. Web platforms are increasingly encountering misinformation problems caused by deception techniques such as astroturfing[1], harmful bots[2], computational propaganda[3], sockpuppetry[4], data voids[5], etc.

Wikipedia, the world’s largest online encyclopedia in which millions of volunteer contributors create and maintain free knowledge, is not free from the aforementioned problems. Disinformation is one of its most relevant challenges[6] and some editors devote a substantial amount of their time in patrolling tasks in order to detect vandalism and make sure that new contributions fulfill community policies and guidelines[7]. Furthermore, Knowledge Integrity is one of the strategic programs of Wikimedia Research with the goal of identifying and addressing threats to content on Wikipedia, increasing the capabilities of patrollers, and providing mechanisms for assessing the reliability of sources[8].

Many lessons have been learnt from fighting misinformation in Wikipedia[9] and analyses of recent cases like the 2020 United States presidential election have suggested that the platform was better prepared than major social media outlets[10]. However, there are Wikipedia editions in more than 300 languages, with very different contexts. To provide Wikipedia communities with an actionable monitoring system, this project will focus on creating a multi-dimensional observatory across different Wikimedia projects.

Methodology[edit]

Taxomony of Knowledge Integrity Risks[edit]

Risks to knowledge integrity in Wikipedia can arise in many and diverse forms. Inspired by a recent work that has proposed a taxonomy of knowledge gaps for Wikimedia projects, we have conducted a review of by the Wikimedia Foundation, academic researchers and journalists that provided empirical evidence of knowledge integrity risks. Then, we have classified them by developing a hierarchical categorical structure.

We initially differentiate between internal and external risks according to their origin. The former correspond to issues specific to the Wikimedia ecosystem while the latter involve activity from other environments, both online and offline.

For internal risks, we have identified the following categories focused on either community or content:

- Community capacity: Pool of resources of the community. Resources relate to the size of the corresponding Wikipedia project such as the volume of articles and active editors[6][7], but also the number of editors with elevated user rights (e.g., admins, checkusers, oversighters) and specialized patrolling tools[7][11][12].

- Community governance: Situations and procedures involving decision-making within the community. The reviewed literature has identified risks like the unavailability of local rapid-response noticeboards on smaller wikis[7] or the abuse of blocking practices by admins[13][12][11].

- Community demographics: Characteristics of community members. Some analyses highlight that the lack of geographical diversity might favor nationalistic biases[13][12]. Other relevant dimensions are editors' age and activity since misbehavior is often observed in editing patterns of newly created accounts[14][15][16] or accounts that have been inactive for a long period to avoid certain patrolling systems or that are no longer monitored and became hacked[7].

- Content verifiability: Usage and reliability of sources in articles. This category is directly inspired by one the three principal core content policies of Wikipedia (WP:V) which states that readers and editors must be able to check that information comes from a reliable source. It is referred to in several studies of content integrity[17][18][6].

- Content quality: Criteria used for the assessment of article quality. Since each Wikipedia language community decide its own standards and grading scale, some works have explored language-agnostic signals of content quality such as the volume of edits and editors or the appearance of specific templates[19][18]. In fact, there might exist distinctive cultural quality mechanisms as these metrics do not always correlate with featured status of articles[13].

- Content controversiality: Disputes between community members due to disagreements about the content of articles. Edit wars are the best known phenomenon that occurs when content becomes controversial[20][13], requiring sometimes articles to be protected[21].

For external risks, we have identified the following categories:

- Media: References and visits to the Wikipedia project from other external media on the Internet. Unusual amount of traffic to specific articles coming from social media sites or search engines may be a sign of coordinated vandalism[7].

- Geopolitics: Political context of the community and content of the Wikipedia project. Some well resourced interested parties (e.g., corporations, nations) might be interested in externally-coordinated long-term disinformation campaigns in specific projects[7][22].

Dashboard (v1)[edit]

The first version of the Wikipedia Knowledge Integrity Risk Observatory is built as a dashboard on the WMF's Superset instance. On the one hand, this system allows us to easily deploy visualizations built from various analytics data sources. On the other hand, wmf or nda LDAP access is needed (for more details, see [1]).

The following indicators are available (those with the notation (T) are computed on a temporal basis, usually monthly):

Community capacity[edit]

- General statistics: pages count, articles count, edits count, images count, users count, active users count, admins count, ratio of admins per active users, average count of edits per page.

- Editors: active editors count (T), new active editors count (T), active admins count (T), active admins vs new active editors, number and percentage of editors from specific group.

- Edits: edit count (T), special user group edits ratio (T).

- Tools: AbuseFilter edits count (T), AbuseFilter hits (T), Spamblacklist hits (T).

Community governance[edit]

- Admins: addition of admins (T), removal of admins (T)

- Locks: globally locked editors count (T), locally locked editors count (T)

Community demographics[edit]

- Country: gini index of country views count (T), gini index of country edits count (T)

- Age: age of admins, age of active editors over time (T)

Content quality[edit]

- ORES: ORES average scores

- Articles: average number of editors per article, percentage of stub articles (less than 1,500 chars)

- Edits: special user group edits ratio (T), bot edits ratio (T), IP edits ratio (T), content edits ratio (T), minor edits ratio (T).

Content verifiability[edit]

TBD

Content controversiality[edit]

- Reverts: reverts ratio (T), IP edits reverts ratio (T), IP edits reverts ratio vs active editors, IP edits reverts ratio vs active admins

- Protected pages: percentage of protected pages

Media[edit]

- Traffic: traffic from social media (T) extracted from the Social media traffic report pilot, traffic from search engines (T).

Geopolitics[edit]

- Press freedom: Viewing rate with press freedom index, editing share with press freedom index

Dashboard (v2)[edit]

The first version of the Wikipedia Knowledge Integrity Risk Observatory allows exploring data and metrics from different categories of the taxonomy. The potential of having a broad set of indicators implies a great complexity in prioritising Wikipedia language versions according to their knowledge integrity risk status. For this reason, following efforts have focused on generating an alternative dashboard that reduces risk measurement to a few indices.

Knowledge integrity risks are captured through the volume of high risk revision (using a novel ML-based service to predict reverts on Wikipedia) and their revert ratios. A dataset was built with revisions of all Wikipedias in 2022, including their revert risk scores. The distribution of the scores for reverted and non-reverted revisions is presented below.

Given the differences found in the data exploration, multiple thresholds of high risk revisions for different Wikipedias need to be set. Classification evaluation metrics (precision, recall, accuraccy, f1) were computed for 100 score thresholds [0, 0.01, 0.02 ... 1] in each wiki. This analysis was extended by splitting the dataset by editor type (IP editors vs registered editors) in order to examine whether the thresholds are consistent. The expected bias towards IP edits is observed, having high revert risk scores for both reverted and non-reverted IP edits. In fact, the AUC of the language-agnostic ML model is higher for registered user edits than for IP edits (see illustrative figures below).

Note that the revert risk model particularly works well detecting registered user edits that will NOT get reverted. For registered user edits that will get reverted, the model seems to be more effective for large wikis than medium-size wikis (see illustrative figures below).

This could be indirectly produced by an imbalance in the training dataset (English Wikipedia and other very large wikis might be over-represented) and/or NOT including wiki_db as model feature. Furthermore, some revert activity seems not to be captured in some wikis like cawiki. Data from this case was examined and it was found reverts risk scores were were impacted by a user reverting its own bot activity https://ca.wikipedia.org/w/index.php?title=Especial:Contribucions/Pere_prlpz&target=Pere+prlpz&offset=20220503160427&limit=500. For that reason, the dataset of registered editors revisions was again splitted to distinguish bot edits vs non-bot user edits which revealed that bot edits tend to exhibit low revert risk scores, regardless of whether they are later reverted or not (see illustrative figures below).

Given these findings, knowledge integrity risk of a Wikipedia language edition is operationalized through the revert risk score of registered non-bot editors revisions. In particular, a registered non-bot editor revision is considered of high risk if its revert risk score is upper the threshold in which the model accuracy is maximum for that wiki. The table below shows the thresholds of a subset of large wikis.

| enwiki | frwiki | eswiki | dewiki | ruwiki | jawiki | itwiki | zhwiki | arwiki | kowiki | plwiki | hewiki | idwiki | cawiki | nlwiki |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.97 | 0.96 | 0.92 | 0.95 | 0.94 | 0.98 | 0.96 | 0.98 | 0.78 | 0.98 | 0.95 | 0.93 | 0.98 | 0.93 | 0.94 |

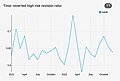

Once the thresholds were established, the dataset has been extended by calculating the risk score of all revisions of all Wikipedias in the period 2021-2022. Using the corresponding threshold of each wiki, three metrics have been computed for each pair (month,wiki): reverted revision ratio, high risk revision ratio, reverted high risk revision ratio.

The images above are charts from the new dashboard that display the monthly values of these three metrics for Russian Wikipedia. Peaks are observed for the high risk revision ratio from March 2022, the time when the Russian invasion of Ukraine started. In order to facilitate the interpretation of these metrics, filterable tables of high risk revisions have been added in the Superset dashboard, also including aggregations by editor and page. As one could expect, the article with the largest number of high risk revisions in Russian Wikipedia is "Russian invasion of Ukraine".

| month | wiki | page | rev. count | high risk rev. count | high risk rev. ratio | rev. revert risk (avg) |

|---|---|---|---|---|---|---|

| 2022-03 | ruwiki | Вторжение_России_на_Украину_(с_2022) | 3517 | 542 | 0.15 | 0.54 |

| 2022-04 | ruwiki | Вторжение_России_на_Украину_(с_2022) | 744 | 283 | 0.38 | 0.91 |

| 2021-04 | ruwiki | Протесты_в_поддержку_Алексея_Навального_(2021) | 409 | 275 | 0.67 | 0.94 |

| 2022-05 | ruwiki | Вторжение_России_на_Украину_(с_2022) | 341 | 260 | 0.76 | 0.95 |

| 2021-02 | ruwiki | Протесты_в_поддержку_Алексея_Навального_(2021) | 569 | 178 | 0.31 | 0.88 |

| 2022-06 | ruwiki | Вторжение_России_на_Украину_(с_2022) | 225 | 80 | 0.36 | 0.9 |

| 2021-03 | ruwiki | Протесты_в_поддержку_Алексея_Навального_(2021) | 122 | 56 | 0.46 | 0.92 |

| 2021-05 | ruwiki | Протесты_в_поддержку_Алексея_Навального_(2021) | 66 | 29 | 0.44 | 0.92 |

The current version of the dashboard is a prototype to guide Disinformation Specialists at the Trust & Safety team of the Wikimedia Foundation. Following the WMF Guiding Principles of openness, transparency and accountability, we expect to release the Wikipedia Knowledge Integrity Risk Observatory as a dashboard available to the global movement of volunteers. Therefore, future work will focus on designing an open technological infrastructure to provide Wikimedia communities with valuable information on knowledge integrity.

References[edit]

- ↑ Zhang, Jerry and Carpenter, Darrell and Ko, Myung. 2013. Online astroturfing: A theoretical perspective.

- ↑ Ferrara, Emilio and Varol, Onur and Davis, Clayton and Menczer, Filippo and Flammini, Alessandro. 2016. The rise of social bots. Communications of the ACM 59, 7, 96–104.

- ↑ Woolley, Samuel C and Howard, Philip N. 2018. Computational propaganda: political parties, politicians, and political manipulation on social media. Oxford University Press.

- ↑ Kumar, Srijan and Cheng, Justin and Leskovec, Jure and Subrahmanian, VS. 2017. An army of me: Sockpuppets in online discussion communities. In Proceedings of the 26th International Conference on World Wide Web. 857–866.

- ↑ Golebiewski, Michael and Boyd, Danah. 2018. Data voids: Where missing data can easily be exploited.

- ↑ a b c Saez-Trumper, Diego. 2019. Online disinformation and the role of wikipedia. arXiv preprint arXiv:1910.12596

- ↑ a b c d e f g Morgan, Jonathan. 2019. Research:Patrolling on Wikipedia. Report-Meta

- ↑ Zia, Leila and Johnson, Isaac and Mansurov, Bahodir and Morgan, Jonathan and Redi, Miriam and Saez-Trumper, Diego and Taraborelli, Dario. 2019. Knowledge Integrity. https://doi.org/10.6084/m9.figshare.7704626

- ↑ Kelly, Heather. 2021. On its 20th birthday, Wikipedia might be the safest place online. https://www.washingtonpost.com/technology/2021/01/15/wikipedia-20-year-anniversary/

- ↑ Morrison, Sara. 2020. How Wikipedia is preparing for Election Day. https://www.vox.com/recode/2020/11/2/21541880/wikipedia-presidential-election-misinformation-social-media

- ↑ a b Song, Victoria. 2020. A Teen Threw Scots Wiki Into Chaos and It Highlights a Massive Problem With Wikipedia. https://www.gizmodo.com.au/2020/08/a-teen-threw-scots-wiki-into-chaos-and-it-highlights-a-massive-problem-with-wikipedia

- ↑ a b c Sato, Yumiko. 2021. Non-English Editions of Wikipedia Have a Misinformation Problem. https://slate.com/technology/2021/03/japanese-wikipedia-misinformation-non-english-editions.html

- ↑ a b c d Rogers, Richard and Sendijarevic, Emina and others. 2012. Neutral or National Point of View? A Comparison of Srebrenica articles across Wikipedia’s language versions. In unpublished conference paper, Wikipedia Academy, Berlin, Germany, Vol. 29

- ↑ Kumar, Srijan and Spezzano, Francesca and Subrahmanian, VS. 2015. Vews: A wikipedia vandal early warning system. In Proceedings of the 21th ACM SIGKDD international conference on knowledge discovery and data mining. 607–616.

- ↑ Kumar, Srijan and West, Robert and Leskovec, Jure. 2016. Disinformation on the web: Impact, characteristics, and detection of wikipedia hoaxes. In Proceedings of the 25th international conference on World Wide Web. 591–602.

- ↑ Joshi, Nikesh and Spezzano, Francesca and Green, Mayson and Hill, Elijah. 2020. Detecting Undisclosed Paid Editing in Wikipedia. In Proceedings of The Web Conference 2020. 2899–2905.

- ↑ Redi, Miriam and Fetahu, Besnik and Morgan, Jonathan and Taraborelli, Dario. 2019. Citation needed: A taxonomy and algorithmic assessment of Wikipedia’s verifiability. In The World Wide Web Conference. 1567–1578.

- ↑ a b Lewoniewski, Włodzimierz and Węcel, Krzysztof and Abramowicz, Witold. 2019. Multilingual ranking of Wikipedia articles with quality and popularity assessment in different topics. Computers 8, 3, 60.

- ↑ Lewoniewski, Włodzimierz and Węcel, Krzysztof and Abramowicz, Witold. 2017. Relative quality and popularity evaluation of multilingual Wikipedia articles. In Informatics, Vol. 4. Multidisciplinary Digital Publishing Institute, 43.

- ↑ Yasseri, Taha and Spoerri, Anselm and Graham, Mark and Kertész, János. 2014. The most controversial topics in Wikipedia. Global Wikipedia: International and cross-cultural issues in online collaboration 25, 25–48.

- ↑ Spezzano, Francesca and Suyehira, Kelsey and Gundala, Laxmi Amulya. 2019. Detecting pages to protect in Wikipedia across multiple languages. Social Network Analysis and Mining 9, 1, 1–16.

- ↑ Shubber, Kadhim. 2021. Russia caught editing Wikipedia entry about MH17. https://www.wired.co.uk/article/russia-edits-mh17-wikipedia-article