Research talk:HHVM newcomer engagement experiment/Work log/2014-11-13

Thursday, November 13, 2014[edit]

It's been a week of time to observe behavior. Time to look at time spent editing. I'm regenerating stats. --Halfak (WMF) (talk) 16:37, 13 November 2014 (UTC)

Here's the stats table.

| bucket | ui_type | n | editing.k | second_session.k | active.k | week_revisions.geo.mean | week_main_revisions.geo.mean | week_session_seconds.geo.mean |

|---|---|---|---|---|---|---|---|---|

| hhvm | desktop | 24732 | 8488 | 2452 | 1679 | 0.5302562 | 0.3674562 | 7.605588 |

| php5 | desktop | 25052 | 8612 | 2563 | 1821 | 0.5459564 | 0.3731869 | 7.747655 |

| hhvm | mobile | 8973 | 3026 | 612 | 397 | 0.4477305 | 0.4118277 | 6.223511 |

| php5 | mobile | 8941 | 2944 | 637 | 376 | 0.4383071 | 0.4026511 | 5.995704 |

Now to get the graphs uploaded. --Halfak (WMF) (talk) 20:29, 13 November 2014 (UTC)

Proportion measures[edit]

Here, like last time, we see no strong effects. The only difference that might be significant is the rate of editor activation in the case of Desktop, but again, it's in the opposite order we'd expect.

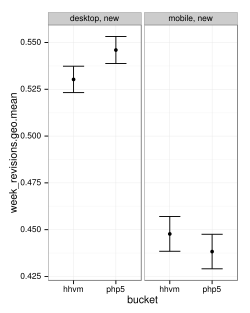

Scalar measures[edit]

Again we don't see any clear differences except for maybe a small, counter-intuitive one for the raw number of revisions saved that gets washed out when we filter for article edits and productive edits. --Halfak (WMF) (talk) 20:54, 13 November 2014 (UTC)

How could this be?[edit]

How is it that a dramatic improvement to performance that substantially affects the editing workflow did not either increase the number of edits saved or reduce the time spent completing the same number of edits?

- Bug

- This hypothesis must always be entertained. It could be a code bug in the treatment code or a bug in the analysis code. Given the review of treatment code and the multiple strategies used to measure effects (some parametric, other nonparametric) this seems unlikely.

- Small effect

- The effect exists and is positive, but it is so small that it is difficult to detect beyond the noise. If the effect is this small, it's probably negligible. That's a bummer.

- Lower bound on faster == better

- It could be that faster is better, but that the effect is only up to a certain point. Edits take 1-7 minutes on average. Improving save time by a few seconds may not affect the pattern that much. However, I suspect that if saving was 5-10 seconds slower, we'd see a loss. There also might be steps worth considering. Recent work in the rhythms of human behavior in online systems suggest that activities tend to clusters at certain time intervals[1]. Operations exist at 3-15 seconds. Actions exist at 1-7 minutes. Activity sessions occur between 1 day and 1 week apart.

- Applying this to Wikipedia, it is common to set aside time on a regular basis to spend doing “wiki-work”. Activity Theory would conceptualize this wiki-work overall as an activity and each unit of time spent engaging in the wiki-work as an “activity session”. The actions within an activity session would manifest as individual edits to wiki pages representing contributions to encyclopedia articles, posts in discussions and messages sent to other Wikipedia editors. These edits involve a varied set of operations: typing of characters, copy-pasting the details of reference materials, scrolling through a document, reading an argument and eventually, clicking the “Save” button.

- This could imply that speeding up "operations" may have more of a substantial affect than speeding up the last step in an "action". So we might expect to see more dramatic improvements by making it faster to perform sub-edit actions like looking up references & formatting or previewing the current edit. --Halfak (WMF) (talk) 21:21, 13 November 2014 (UTC)