Research:Post-edit feedback

The purpose of this experiment is to test whether various types of positive feedback after submission of an edit increase the productivity and retention of Wikipedia editors.

Background

[edit]Previous research demonstrated that feedback mechanisms have a positive effect on incentivizing repeated contributions in collaborative communities.[1][2]. According to the currently available research literature, providing feedback to wiki editors -- whether that feedback is a simple confirmation, an expression of gratitude, or user contribution statistics -- offers a potential retention incentive, or a "feedback mechanism that encourage individuals to continue contributing over time".[1]

Research questions

[edit]In this experiment, we are testing the following hypotheses:

- Providing any kind of feedback after a user successfully edits will motivate them to edit more, as compared to users who receive no feedback post-edit.

- Providing post-edit feedback specific to the user and their experience will be more effective at encouraging further participation, compared to generalized feedback or none at all.

Once we have proven or disproven the hypotheses above, we'd also like to answer more specific hypotheses about how much editors are motivated by post-edit feedback: Those hypotheses include:

- Providing positive feedback will increase the number of edits a contributor makes in an edit session because their contributions are being acknowledged as they edit.

- Providing positive feedback will shorten the time it takes new contributors to reach editing milestones, especially the 10, 50, and 100 edit marks because their contributions are being acknowledged. In the historical feedback test, the value of achieving contribution levels is reflected back to the editor.

- Providing positive feedback improves the long-term retention of editors over 30, 60, and 90 days because new editors will be receiving constant affirmation for their contributions.

- At what stage in an editor's lifecycle is feedback most effective, as measured by edit count?

Assumptions

[edit]- Post-edit feedback will be most likely to have an impact on newbie editors who do not already have the expectation that they will not receive feedback.

- Newbie editors may not always know that they've saved an edit without receiving feedback.

- If we choose to display post-edit feedback for all edits, users will develop "blindness" to the message over time.

- Historical feedback, social ranking, and similar forms of more contextual, intermittent feedback will be more effective than simple repetitive gratitude.

In order to verify that bucketing and data collection are functioning as required, we need...

- To be able to create accounts, in order to see that newly-registered editors (and only new editors) are being bucketed into control or experimental conditions.

- To be able to make edits to any number or type of pages, and ensure all edits (not a sample) are logged along with normal revision data like who made them, the page, and the time. This data should include whether the editor was in the control or one of the two experimental conditions.

See also: #Data collection below

Overall metrics

[edit]- The following will be collected for each editor in the experiment.

- The event referred to can be any type of feedback, including none at all.

- Each metric may be measured from a point originating before the experiment and to some point after its conclusion. Let the interval be defined where X and Y may be tuned for each metric.

- Pre-Event Edit Counts (non-negative integer)

- Edit counts over fixed period before event (where applicable).

- Post-Event Edit Counts (non-negative integer)

- Edit counts over a fixed period after event.

- Time to Milestone (non-negative integer)

- The time taken by an editor to reach milestones (e.g., n edits) after the event

- Edits per session (non-negative real)

- Rate of editing per session

- k/n-Retention (binary)

- Whether an editor has made at least k edit(s) at minimum n days after the event

Methodology

[edit]Activation

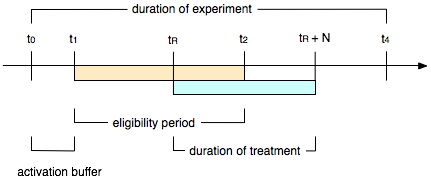

[edit]- each experiment will honor an initial activation buffer period of a few hours after the deployment;

- the eligible population of users for each experiment will be determined by considering users registered within a predefined eligibility period; the beginning of the eligibility period will fall after the end of the activation buffer;

- for this experiment, there is no gap between the user registration time and the beginning of the treatment (the treatment starts immediately upon registration);

- the treatment will remain active for a minimum duration for all eligible users, regardless of when they registered an account.

| t0 | deployment completed |

| t1 | beginning of eligibility period |

| t2 | end of eligibility period |

| [t0,t1] | activation buffer |

| [t1,t2] | eligibility period |

| tR | registration time |

| [tR, tR+N] | duration of treatment |

| tR+N | end of treatment |

| t4 = t2 +N | end of the experiment |

Sampling

[edit]- we will run an eligibility check on all new users by checking their account registration against the eligibility period;

- eligible users will then be assigned to a given experimental condition or to a control group via a hashing function applied to their user ID;

- a predefined eligibility period combined with a deterministic bucketing function based on user IDs will allow us to establish at any time to what experimental condition a given user was exposed without the need of storing additional data.

- Note: accounts will all be newly-registered on English Wikipedia, but may be registering automatically through the Single User Login system. Post-collection filtering for accounts that have edited on other Wikimedia projects will likely be a good step toward cleaning up the data.

Data collection

[edit]- we will rely on data collected via the MediaWiki DB (revision and user tables) and the clicktracking extension;

- we will use the clicktracking extension to store events served for successfully completed edits. The event count in the log (non sampled) should match the number of revisions originating from users in the corresponding bucket. We will not capture any impression or click data.

Iterations

[edit]PEF-0 (Dry Run)

[edit]Methods

[edit]This test was activated on 2012-07-19 and re-deployed on 2012-07-23. The goal was to dry-run the code in production with no visible change to the user for the purpose of assessing whether:

- we can effectively bucket users

- activate experiments to eligible users based on registration time or other user data

- serve different experimental code by condition

- accurately match revision and log event data

- verify that bucketing is working per spec.

We used the following parameters for the activation/eligibility window in this iteration:

| [t0,t1] | 3 hours |

| [t1,t2] | 2 days |

| N | 1 day |

| [t0,t4] | 4 days |

The dry run gave us the following samples for the purpose of the data integrity tests:

- 8K eligible participants

- 2.6K participants for each condition (33% of the eligible population)

- 650 active editors (with at least 1 edit) per condition

- 2K revisions

PEF-1: Confirmation vs. Gratitude

[edit]Methods

[edit]The first iteration of this experiment tested the effect of a simple confirmation message or a "thank-you" message on a sample of new registered users.

We randomly assigned all eligible participants to one of the following three conditions:

- confirmation group

- participants in this condition received the following message: "Your edit was saved." after successfully completing an edit for the duration of the treatment.

- gratitude group

- participants in this condition received the following message: "Thank you for your edit!" after successfully completing an edit for the duration of the treatment.

- control group

- participants in the control group did not receive any message upon completion of an edit.

The treatments were delivered in all namespaces and for all edits, except for page creation edits. We used the following parameters to determine the eligibility window and duration of the treatment:

| [t0,t1] | 3 hours |

| [t1,t2] | 7 days |

| N | 7 days |

| [t0,t4] | 15 days |

This resulted in the following timeline (all times UTC)

| deployment | 2012-07-30 19:00:00 |

| eligibility starts (deployment +3h) | 2012-07-30 22:00:00 |

| first edit event logged | 2012-07-30 22:03:09 |

| eligibility ends (eligibility +7d) | 2012-08-06 22:00:00 |

| last edit event logged | 2012-08-13 06:13:26 |

Results

[edit]The results from the first iteration of R:PEF indicate that post-edit feedback produces a significant increase in the volume of newbie contributions without negative side effects on quality. Read a summary of the results on the Wikimedia blog, read the full report or check out the PostEdit extension that we are using to productize this UI change on the English Wikipedia.

- Screenshot of the experimental message

PEF-2: Historical Feedback

[edit]Methods

[edit]The second iteration of the experiment will test only delivering a message to new registered users when they reach certain milestones in their edit count.

We randomly assign all eligible participants to one of the following two conditions:

- historical feedback group

- participants in this condition receive the following message: "Success! You made your Nth edit." after successfully completing their 1st, 5th, 10th, 25th, 50th and 100th edit.

- control group

- participants in the control group don't receive any message upon completion of an edit.

The treatments are delivered in all editable namespaces and for all edits, except for page creation edits. We used the following parameters to determine the eligibility window and duration of the treatment (log data will be included once the experiment is completed):

| [t0,t1] | 3 hours |

| [t1,t2] | 7 days |

| N | 14 days |

| [t0,t4] | 21 days |

This is the resulting timeline for the experiment (all times UTC)

| deployment | 2012-09-20 02:00:00 |

| eligibility starts (deployment +3h) | 2012-09-20 05:00:00 |

| first edit event logged | 2012-09-20 05:03:14 |

| eligibility ends (eligibility +7d) | 2012-09-27 05:00:00 |

| last edit event logged | 2012-10-11 01:00:53 |

- Screenshots of experimental messages

Results

[edit]See Research:Post-edit feedback/PEF-2.

Feature requirements and user experience

[edit]See the documentation on MediaWiki.org

See also

[edit]- Research:Editor milestones - thanking editors (with a barnstar) who reach a 1,000 edit milestone

References

[edit]- ↑ a b Cheshire, C., & Antin, J. (2008). The Social Psychological Effects of Feedback on the Production of Internet Information Pools. Journal of Computer-Mediated Communication, 13(3), 705-727. DOI PDF

- ↑ Mazarakis, A., & van Dinther, C. (2011) Feedback Mechanisms and their Impact on Motivation to Contribute to Wikis in Higher Education, WikiSym '11. DOI PDF