Research:WikiCredit

This page documents a research project in progress.

Information may be incomplete and change as the project progresses.

Please contact the project lead before formally citing or reusing results from this page.

A commonly cited reason why academics and other subject matter experts don't edit Wikipedia is because they can't claim credit for their work. While contributing information to Wikipedia is arguably a very high impact activity due to the massive amount of viewership, this impact is not easy to claim.

Due to recent advances in effective tracking of authorship in Wikipedia[1] and past research on strategies for calculating the value individual editors have added to Wikipedia[2], we are now able to allow editors to claim credit for direct contributions to articles on a granular level.

But should we? Research on volunteer motivation patterns suggests that offering external incentives for intrinsically motivated actions undermines intrinsic motivation. Further, bad measures of what constitutes value could encourage disruptive behaviors in Wikipedia in the search for more wiki credit.

Value-added formalization

[edit]Based on insights from previous work[2], we will formalize value added to Wikipedia as a function of the productivity of an editor and the importance of their work.

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "http://localhost:6011/meta.wikimedia.org/v1/":): {\displaystyle \text{Value} = \text{Productivity} \times \text{Importance}}

While previous work discusses measures of value-added, there are no standards in place. In an effort to both a system up and running and explore the potential problem space, we will implement a series of metric design iterations. The first iteration will compromise towards simplistic measures. Future iteration will progressively experiment with increased nuance and complexity.

Measuring productivity

[edit]| iteration | metrics |

|---|---|

| 1. | For this iteration, we will use the count of minimally persisting tokens added as a measurement for productivity. We choose thresholds and for the minimum number of revisions and seconds a token must survive in order to be counted as minimally persisting.

def persisting_revisions(token): # Returns the number of revisions a token persists

def persisting_seconds(token): # Returns the number of seconds a token persists

tokens_added = # Tokens added in a revision

r = # Minimum number of revisions to persist before being considered "good"

t = # Minimum amount of time to persist before being considered "good"

# The count of tokens that persist

def minimally_persisting_tokens(tokens_added, r, t):

return sum(

persisting_revisions(token) >= r and

persisting_seconds(token) >= t

for token in tokens_added

)

|

| 2. | See the discussion: Second iteration of productivity measurement.

For this iteration, we'll explore edit type classification and strategies for assigning weights to specific edit types. tokens_added = # Tokens added in a revision

r = # the minimum number of revisions to persist before being considered "good"

t = # the minimum number of revisions to persist before being considered "good"

base = # a base score for type of edit

weight = # a weighting for the type of edit

# The count of tokens that persist

def weighted_minimally_persisting_tokens(tokens_added, r, t, base, weight):

persisting_tokens = minimally_persisting_tokens(tokens_added, r, t)

persist_rate = persisting_tokens/(tokens_added or 1)

return base*persist_rate + persisting_tokens*weight

|

| ... | See the discussion: Ideas on productivity measurement. |

Measuring importance

[edit]| iteration | metrics |

|---|---|

| 1. | In the first iteration, we'll compare two measures: monthly page views, incoming wikilinks.

page_id = # Revision's page identifier

log(monthly_page_views(page_id))

SET @page_id = (select page_id from page where page_namespace = 0 and page_title = "Japan");

/* Results in the logged count of unique pages that link to the page being edited or a redirect thereof. */

SELECT

LOG(COUNT(DISTINCT linking_page_id))

AS log_distinct_inlinks

FROM (

SELECT pl_from AS linking_page_id /* Direct links */

FROM pagelinks

INNER JOIN page ON

pl_namespace = page_namespace AND

pl_title = page_title

WHERE page_id = @page_id

UNION ALL

SELECT link.pl_from AS linking_page_id /* Redirected links */

FROM pagelinks redirect_link

INNER JOIN page ON

pl_namespace = page_namespace AND

pl_title = page_title

INNER JOIN page redirect_page ON

redirect_link.pl_from = page.page_id AND

redirect_page.page_is_redirect

INNER JOIN pagelinks link ON

link.pl_namespace = redirect_page.page_namespace AND

link.pl_title = redirect_page.page_title

WHERE page.page_id = @page_id

) AS direct_and_indirect_page_links;

|

| ... | See the discussion: Ideas on importance measurement. |

Socio-technical effects

[edit]Through ascribing value to editors' work, WikiCredit has the potential to change the social-technical functioning of Wikipedia in dramatic ways. We seek to minimize negative repercussions through open consideration of the system's design as well as through experimentation.

Social & behavioral implications

[edit]Conflating personal effort & group value. Through clearly tying individual effort (value added by a user) to group outcomes (high quality encyclopedia), the Collective Effort Model (CEM) predicts a tighter coupling between individual actions and group efforts. This may result in reduced social loafing, and therefore, more productivity.

Undermining personal motivation. An undermining effect or "overjustification effect" occurs when an expected external incentive such as money or prizes decreases a person's intrinsic motivation to perform a task. By providing an external measure that can be used as a means for attaining something else, we might undermine editors' innate motivations.

Privacy, ranking & competition. While competition seems to be a motivating factor for males, it seems to be more likely to be a demotivating factor for females[3]. This is particularly concerning given Wikipedia's well documented gender gap[4] and general retention issues[5]. An effective system should be able to take the best advantage of editors who are motivated by competition and ranked lists while not requiring editors who are demotivated by competition to compete -- or be ranked.

Gaming and other deviant behavior

[edit]Just as search engines have experienced attacks by agents trying to take advantage of an algorithm for their own benefit, we might see a productivity/value-based measurement algorithm gamed. Robust strategies for mitigating the detrimental effects will need to be implemented and maintained.

Since deviant behavior can only really be detected after the fact, changes to the algorithm to discourage such behavior will have to apply retroactively. Sometimes counter-deviant changes will affect the score of non-deviant editors. A clear contract between consumers of productivity/value-based measurements and the maintainers of WikiCredit must negotiated and made clear.

System components

[edit]

(Magical) Difference Engine

[edit]Revision difference detection. See http://pythonhosted.org/deltas for ongoing work on anti-gaming algorithms. See https://github.com/halfak/Difference-Engine for work on the server component.

- Output format

{

id: 34567890

timestamp: 1254567890

user: {

id: 456789

text: "EpochFail"

},

page_id: 2314,

delta: {

bytes: 10,

chars: 10,

operations: [

{'+': [0, 0, 0, 14, ["This", " ", "is", " ", "the", " ", "first",

" ", "bit", " ", "of", " ", "content", "."]]},

{'=': [0, 10, 14, 24]},

{'-': [10, 14, 24, 24, ["This", " ", "is", " ", "getting", " ",

"removed", "."]]}

]

}

}

Persistence tracker

[edit]Content authorship tracking and persistence statistics.

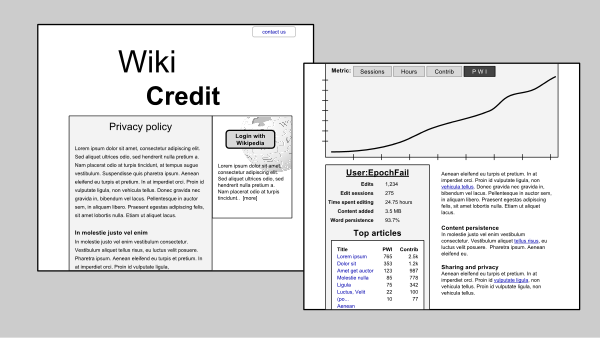

WikiCredit: Personal wiki stats

[edit]Presentation and dissemination of productivity/value-added statistics.

References

[edit]- ↑ WikiWho: Precise and Efficient Attribution of Authorship of Revisioned Content Proceedings of the 23rd international conference on World Wide Web, ACM, April, 2014 pdf

- ↑ a b Priedhorsky, R., Chen, J., Lam, S. T. K., Panciera, K., Terveen, L., & Riedl, J. (2007, November). Creating, destroying, and restoring value in Wikipedia. In Proceedings of the 2007 international ACM conference on Supporting group work (pp. 259-268). ACM.

- ↑ Gneezy, U., M. Niederle, and A. Rustichini “Performance in competitive environments: Gender differences,” Quarterly Journal of Economics, August 2003, p. 1049-1074.

- ↑ Lam, S. T. K., Uduwage, A., Dong, Z., Sen, S., Musicant, D. R., Terveen, L., & Riedl, J. (2011, October). WP: clubhouse?: an exploration of Wikipedia's gender imbalance. In Proceedings of the 7th International Symposium on Wikis and Open Collaboration (pp. 1-10). ACM.

- ↑ Halfaker, A., Geiger, R. S., Morgan, J. T., & Riedl, J. (2012). The rise and decline of an open collaboration system: How Wikipedia’s reaction to popularity is causing its decline. American Behavioral Scientist, 0002764212469365.