Usenetpedia

- The following is not yet ready for the New project process, and is not limited to what can be proposed by that means. This is a draft in progress.

- Note: a previous proposal to distribute Wikipedia via Usenet was made in 2005 at User:TomLord/distributed wikis. This page was created independently of (indeed in ignorance of) that proposal.

| This page in a nutshell: Wikipedia is declining due to the w:resource curse of its accumulated content -- specifically, the battle for control over what material is covered, with what point of view, in the current version of the site. We can avoid or respond to the future collapse of Wikipedia by merging MediaWiki markup with a substantially upgraded Usenet protocol to create a new method of distributed downloading that distributes the articles without central authority and serving a wider range of purposes. |

The problem

[edit]

As described in a 2012 study, Wikipedia went quite abruptly from exponential growth of participation and coverage to a pattern of steady decline. The reason from this was the abrupt rise of "deletionism", specifically the reversion and deletion of content contributed in good faith by volunteers. Many began placing more of a focus on removing content that they disagreed with, while others pressed for policies such as WP:BLP that called for editors to use "editorial judgment" in deciding what information to suppress. Naturally this judgment passes steadily upward and away from the common contributor.

As anarchic communities tend to be very resistant to change, this change could not be accomplished merely by a wave of editing; rather, tools emerged by which editors could leave automated messages on the talk pages of new users, beginning with informative messages that automatically and rapidly escalated to threats of administrative action. In 2008 the ability to "roll back" edits was also greatly expanded.

While Wikipedia traditionally had claimed not to be censored, this is no longer very convincing. Even after years of sanctions one of the most prolific editors, formerly one of its best admins, continues to be denied the chance to contribute to "biographies of living persons" because of an edit in which he related the star of a widely-known porn video to her real name, now used in Hollywood. This is despite the fact that this is a top hit in any Google search.[1] Explicit images have been removed from articles where they are encyclopedically appropriate, such as the successful and enduring removal of an image from Fellatio by spree killer Elliot Rodger in 2013.[2]

The control of content is epitomized by the rise of paid editing on behalf of PR corporations, whose frequency is unknown. The recent Terms of use/Paid contributions amendment nominally provides some disclosure from paid editors, but also seems to largely legitimize their activities while permitting a wide range of means of disclosure that frustrates any single automated tool from detecting them without human intervention.

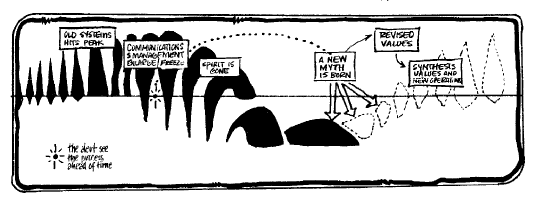

Conceptually, Wikipedia's position is much like that of a weak country like the Congo or South Sudan, whose mineral wealth is a "resource curse" that causes only harm to its citizens as those who rob it assert their power. There is little unity among editors, and powerful external forces desire control of our resources. When the Wikipedia community began, it had very little accumulated content, much less traffic, and a large community of volunteers whose sense of fairness could hold off these influences. But as the total amount of accumulated content has increased, Wikipedia has become like an overloaded cargo vessel that is unsteady in the water. As editor involvement continues to decline, the forces keeping it on an even keel grow ever weaker, until eventually it must capsize. This approaches as aggregators such as Google begin to present snippets of content that keep readers from coming to the site (in part helpfully compiled by Wikidata, which places a far higher priority on APIs for external use than, say, making it possible to access its data from inside a Wikipedia article or script). It is difficult to predict when the end will come, but it may be quite abrupt as participation, readership, and revenue all collapse at once.

The vision

[edit]

Wikipedia has been the realization of a beautiful myth, a myth in which the people of the world would work together for the common knowledge. This myth has had the strength but now also the problems of many a communist society. But instead of giving up, going back to a world where your work is the property of some company that sells some people the right to know, can we move onward and upward to a still greater freedom?

Imagine that, as in Star Trek: The Motion Picture, we could fuse our foundering little probe, Wikipedia, with a whole world of liberated machines, allowing the hybrid entity to go on with a new sense of purpose and collect all the knowledge of the cosmos. But where to find the needed planet of machines? Well, that's where Usenet comes in. Though Usenet is frequently derided for spam that floods it with a volume of literally terabytes daily... that volume is comparable to the entire Wikipedia database! And it is a volume of content that is already distributed all over the world, near to the reader and with no central server to censor. These are the broad shoulders on which a new kind of Wikipedia can stand.

The goal of the Usenetpedia should be to make it so that any CC-licensed + Fair Use encyclopedia - whether Wikipedia, Scholarpedia, or Uncyclopedia - can be distributed as a series of edits (technically implemented as Usenet posts) which are indexed by other files (distributed as Usenet posts) to perform all the standard functions of a Mediawiki encyclopedia. Apart from some aspects considered below, the visual interface for the user should look and feel very similar to that of our present Wikipedia. Additionally, it should be possible to choose whose index files you wish to follow, so that there can be more than one top-level perspective about what a given encyclopedia looks like, i.e. whose version is "current" (e.g. whether a given edit was reverted or not). The goal is to fracture the Wikipedia power structure and send it across the web, leaving decisions of what content to accept to individuals and decisions about page and revision deletion/suppression to the myriad NNTP hosts. The result should be an encyclopedia far more robustly protected from censorship, and with a somewhat improved average potential to resist PR lobbying.

The effect on Usenet should be positive. Encyclopedia edits don't have to be resent every day, but judging by the numbers it should be possible to keep every history revision available somewhere for at least an indirect retrieval. If some smart features for reader-controlled decisions about archiving and retrieval of posts from remote hosts on request can be implemented, it should be possible to make a fast, stable, and complete interface that rivals what Wikipedia does now. But by providing Wikipedia via Usenet, we give that sometimes embattled mechanism a sense of purpose that it sometimes seems to lack. Usenet has proved remarkably resilient against legal threats, but with so many pundits accusing it of being nothing legitimate, it would be a great thing for it to be seen providing a resurgent Wikipedia freed from edit wars and inquisitions. So this is something that Usenet hosts should consider putting real effort into making happen, hopefully even to the point of making the Wiki interface available for free to all readers.

Technical implementation

[edit]At its core, Usenet is implemented as a simple Network News Transfer Protocol by which a number of headers control the processing of the body of the text. For Usenetpedia the distribution of postings (edits, history versions) should be possible to do in compliance with standard NNTP[3] so that even if a given NNTP site is not itself intended for Wiki browsing, it can still relay the content between sites that are. That said, support for full article UTF-8 needs to be made as robust as possible. Some headers include:

- Newsgroups:. The Usenet structure of "newsgroups" is a basic part of how Usenet posts are grouped on the server (e.g. with the GROUP command), but it is not the way that wiki edits are generally meant to be read. While it is tempting to give each article a newsgroup (wiki.wikimedia-meta.usenetpedia for this entry), a range of technical limitations such as the asynchronous handling of contested moves make this undesirable. Therefore, it is proposed that all posts from a particular wiki or major subdivision thereof are in a single newsgroup, permitting site operators to permit or refuse posts from it as a whole but without enmeshing article naming decisions with the basic Usenet interface. The newsgroup name might identify only the encyclopedia as a whole (e.g. Newsgroups: wiki.wikipedia or Newsgroups: wiki.encyclopedia-dramatica) or be subdivided somewhat further as needed for administrative or technical reasons (Newsgroups: wiki.wikipedia.template-talk). Retrieving recent posts for a newsgroup would be roughly equivalent to it would be a rough approximation of Special:RecentChanges. For wiki content (as for so much else on Usenet) the ability to cross-post to more than one newsgroup is at best highly problematic, and disabling it would probably be desirable.

- In-Reply-To: In Usenet threads, this identifies the post a user is responding to. In a wiki it should work the same way, to identify the history version that has been edited to produce the current article. This is particularly important to keep track of because people editing Usenetpedia sites may edit conflict with one another over a period of an hour or more as posts propagate from server to server, and because Usenetpedia should be able to accommodate a wider variety of present versions than a single wiki. The result is that the article history on Usenetpedia can (at least optionally) be represented like a github timeline with forks and merges rather than a purely linear list of edits in chronological order.

- References: The NNTP standard appears to leave considerable latitude for the implementation of "references" in a thread,[4] so this field can be co-opted to contain the list of all the articles which predictably need to be transcluded to complete the current post as a wiki page. This includes any new mechanism of transclusion meant to reduce the traffic needed to make minor edits to pages, but excludes some pages which are transcluded in response to data (in parser functions and Lua modules, for example).

Additional extensions for the wiki could include:

- X-Article. Holding the name of the article, (e.g. X-Article: Usenetpedia) Unfortunately, there is no way in a standard NNTP server to directly index articles by these names; however, it is possible for other posts to provide indexes of past revisions.

- X-Merge-From. This is meant to facilitate advanced mechanisms of edit conflict resolution. When two versions are merged, the more recent is tagged In-Reply-To, and the older version is tagged with X-Merge-From. When the article timeline (history) is displayed, the two should be displayed with a graphic arrow to indicate the merge in content.

- X-simple-transclusion This should indicate which posts are sources for simply transcluded text (see below). The current post can be put back together from these upon receipt if it is only to be used for reading. Or this data might be tracked to indicate that the source post should not be deleted from the local server until the latest post using it is expired.

Mediawiki expansions

[edit]The Usenetpedia version of Mediawiki should have additional capabilities to facilitate the new method of distribution.

Altered edit conflict resolution

[edit]The current Mediawiki software attempts to force users to hand-resolve all edit conflicts before submitting an edit, but this does not work on Usenet when it can take hours for an edit to propagate. Instead, edit conflicts are allowed to enter the article timeline. When the user presses the tab to edit an article, the existing revisions (within some time or article limit configurable at the individual server, and in any case going back no further than the point where the user's trusted authorities last weighed in on a preferred version) should be compared for edit conflicts. The history of the conflicting edits, with branches and merges based on In-Reply-To and X-Merge-From fields, should be displayed. Ideally the editor should start up with the maximum amount of text salvageable from all edits, with the differing portions displayed in color coded segments that can be clicked on and off (removing the text and allowing display of conflicting alternates) from the graphical history. The user should be able to edit the text in any of these states. In this way, since it is not possible for users to solve their own edit conflicts, they are asked to solve those of the preceding users instead. While this imposes some unavoidable inconvenience (and users often will not choose very carefully), hopefully the advantage of submitting the conflicts for multiple rounds of third opinions will have some beneficial effects.

Simple transclusion within an edit history

[edit]In order to minimize the amount of network traffic, it would be desirable to allow an edit to cut-and-paste text from a preceding edit, without otherwise altering it, before the usual Mediawiki rules are applied to the assemblage. Thus if you reply to a thread in the middle of a 100kb talk page with "Me too", you should be able to generate a usenet post whose body is

- {{simple:<1234@example.net> 1-53123}}

- :Me too. Wnt (talk) 22:10, 6 July 2014 (UTC)

- {{simple:<1234@example.net> 53124-}}

Another case for this occurs if you want to make a specialized encyclopedia based on the work for another. For example, a "Decentpedia" might distribute an article based on a Wikipedia article by cutting out and replacing certain words and pictures. In the event that someone wanted to run a Usenet node that contained Decentpedia but not Wikipedia, there would need to be a mechanism by which their server retrieved the missing post from another site and did the splicing, then it might distribute that in an expanded version to like-minded sites. For this purpose the newsgroup (project and possibly namespace) of the simple: transclusion could be specified when it differs from that of the post itself, and it may be desirable to have an additional header "X-substituted:" that lists the newsgroups of any substitutions that have been made by the server before further distribution of the post, with distribution of those posts to servers that carry the X-substituted newsgroups to be avoided, so that most sites distribute only the lower-bandwidth version.

Wiki server

[edit]The server for the actual page parsing should reside close to the user. Optionally, the user should be able to run his own parsing server, much as he would run a Usenet client, which would request and post appropriate Usenet messages to support the interface. (A somewhat similar idea was suggested at MediaWiki@Home) However, this software is bulky, and it is wasteful to parse every page you read, rather than pulling up a cached version. Therefore, third party sites (often but not always those running the NNTP server) should be in a position to offer a large cache of pre-parsed wiki pages to users who simply browse the web, just when we browse Wikipedia; and to support posting by web-based users in the same way as Wikipedia does.