User:Sumit.iitp/Research

Hi, I'm Sumit, bachelors in Computer Sc. and Engg. from IIT Patna. I'm interested in Artificial Intelligence and NLP. Applying mathematics and computer science algorithms to make sense of data is what excites me the most.

This page is to track my research projects with Wikimedia Foundation as part of Scoring Platform Team and elsewhere over the course of time. I also maintain an online blog where I write about technical topics related to my work from time to time.

- Click on the image to explore the project

-

Automatic suggestion of topics to drafts

-

Undergrad research thesis

-

Wikidata PageBanner extension

-

Project to visually simulate graph algorithms using d3 with controls for simulations

-

Number of re-reviews that patrollers perform on Wikipedia

-

Number of people who performed new page patrol

-

Test timeout for ORES

-

AI@IITP

-

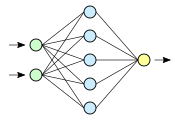

Question answering using CNN

-

Digital Image Processing to detect calcifications in mammograms

Wikimedia Foundation

[edit]I've been associated with the Scoring platform team of Wikimedia Foundation since May 2017 and have been contributing to a number of projects aimed at building and deploying Machine Learning models on Wikipedia to ease the task of editors. Some of them are:

Automatic suggestion of topics to new drafts

[edit]This is the latest project I'm currently driving under the guidance of User:Halfak_(WMF) who manages the Scoring platform team. The project is about using the data around existing en:Wikipedia:WikiProject to gather topical information on these WikiProjects and then using this information to predict topics of new drafts on Wikipedia.

- Published the machine readable WikiProjects dataset at https://doi.org/10.6084/m9.figshare.5503819.v1 . The dataset was generated by parsing the WikiProjects directory using a handwritten parser through recursion. The work log provides detailed steps on how the dataset was generated. Github commit - link

- Script to extract and generate mid-level wikiprojects mapping - This change takes a bunch of fine grained WikiProject topics and groups them in higher level categories called mid-level categories. The idea for mid-level categories comes from the categorization given at WikiProjects Directory. E.g WikiProject:Albums and WikiProject:Music both come under a broader category Performing Arts. The aim of this step is to reduce the classification to a few broad level categories which is both feasible as well as logical. Pull requrest - link

For a total of 93,000 observations(page_ids), the labeling operation took about 1:18:28(hh:mm:ss.ms)

- Script that given a list of page-ids extracts all wikiproject templates and mid-level categories associated with the page by querying the mediawiki api. This prepares the final dataset that will be used to train the multi-label classifier by additionally fetching text associated with each page for training. Pull request - link

- Published the labeled WikiProjects set containing 94,500 observations having talk-page-id to mid-level-categories mapping - https://doi.org/10.6084/m9.figshare.5640526.v1

- Changes to the revscoring library to include a tfidf weighting for multi-label classification for WikiProjects data or a word-vector based approach to classify new drafts, whichever works better. The basic challenge is handling the scale of data. - In progress

- The classification was done using RandomForests and GradientBoosting classifiers using word2vec as features. The binary-relevance model was used for multilabel-classification and achieved good results.

PR curve with random forest classifier for select mid-level-categories:

ROC curve with random forest classifier for select mid-level-categories:

Draftquality

[edit]This project deals with determining the quality of new drafts on Wikipedia drafts and classify them into the categories spam, vandalism, attack or OK. The project is aimed at automating the task of editors regarding speedy deletion criteria where editors are authorized to delete certain articles violating Wikipedia policies without broader consent. I worked on:

- Experiment with sentiment feature on draftquality modeling - Phabricator - phab:T167305, blog post

Editquality

[edit]Completed generation of models for few of the languages:

- Damaging and goodfaith models for Albanian Wikipedia - phab:T163009

- Damaging and goodfaith models for Romanian Wikipedia - phab:T156503

- Testing the sentiment feature on edtis - phab:T170177

ORES

[edit]- Add timeout test for functions taking too long phab:T170205

New Page Patrol Research

[edit]

Research work around new page review user right on Wikipedia introduced in November 2015:

- The number of re-reviews that patrollers perform - link

- Number of people who performed new page patrol - link

Undergraduate Thesis

[edit]My undergraduate thesis work was on the WSDM Cup 2017 triple scoring task. The poster can be found here.

More info can be sought on the blog post. The work will be published in the short paper/poster track of ICON 2017.