WikiXRay

This page is outdated, but if it was updated, it might still be useful. Please help by correcting, augmenting and revising the text into an up-to-date form. Note: The source code seems to be unavailable. |

The main goal of this project is to develop a robust and extensible software tool for an in-depth quantitative analysis of the whole Wikipedia project. This project is currently developed by José Felipe Ortega (GlimmerPhoenix) at the Libresoft Group, at Universidad Rey Juan Carlos.

Currently, this tool includes a set of Python and GNU R scripts to obtain statistics, graphics and quantitative results for any Wikipedia language version. Current functionality includes:

- Downloading the 7zip database dump of the target language version.

- Construction and decompression of the database dump in a local storage media.

- Creating additional database tables with useful statistics and quantitative information.

- Generating graphics and data files with quantitative results, adequately organized in a per-language directory substructure.

Some of these capabilities still require manual insertion of parameters in a common configuration file, though also some of them work automatically.

The source code is publicly available under the GNU GPL license, and could be found in LibreSoft tools git repository, or alternatively on the WikiXRay project at Gitorious.

Please note that this software is still in a very early development stage (pre-alpha level). Any useful contributions will be of course welcomed (first contact with the project administrators).

Following, we summarize some of the most relevant results we have obtained so far. These results comes from a quantitative analysis focused on the top 10 Wikipedias (attending to their total number of articles).

Python Parser[edit]

WikiXRay comes with a Python parser to process compressed XML dumps of Wikipedia. More information in WikiXRay/Python parser.

Article size distribution (HISTOGRAMS)[edit]

Regarding articles size, one of the most interesting aspects to study is their distribution for each language. We can plot the histogram for the article size distribution in each language to evaluate the most frequent size intervals.

As we can see, one common feature is that in each histogram we can identify two different populations. The lower half represents the tiny articles redirects population, most probably composed by stubs. The higher half represents the standard articles population.

-

Articles size histogram English Wikipedia

-

Articles size histogram German Wikipedia

-

Articles size histogram French Wikipedia

-

Articles size histogram Japanese Wikipedia

-

Articles size histogram Polish Wikipedia

-

Articles size histogram Dutch Wikipedia

-

Articles size histogram Italian Wikipedia

-

Articles size histogram Portuguese Wikipedia

-

Articles size histogram Swedish Wikipedia

-

Articles size histogram Spanish Wikipedia

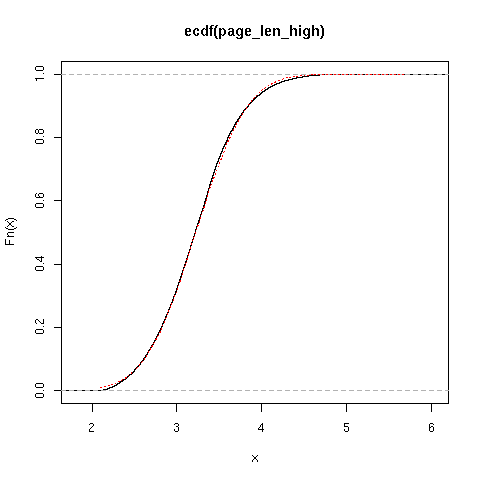

We can also explore the statistical distribution exhibited in each half (stubs redirects population and standard population). This can be achieved working out the empirical cumulative distribution function (ecdf) for each half in every histogram. Results show normal distributions.

-

ECDF for article size in English Wikipedia

-

ECDF for article size in German Wikipedia

-

ECDF for article size in French Wikipedia

-

ECDF for article size in Japanese Wikipedia

-

ECDF for article size in Polish Wikipedia

-

ECDF for article size in Dutch Wikipedia

-

ECDF for article size in Italian Wikipedia

-

ECDF for article size in Portuguese Wikipedia

-

ECDF for article size in Swedish Wikipedia

-

ECDF for article size in Spanish Wikipedia

We can appreciate the normal distribution comparing the ECDF of each population for each language with the ECDF of a perfectly normal distribution (red line).

[edit]

Bots have a significant effect in the number of edits received in Wikipedia. The following figures present the percentage of total number of edits from logged users and the percentage of total number of edits (including annonymous users) generated by bots in the top-ten Wikipedias.

Correlation between relevant parameters[edit]

The following graphics show the relationship between number of different authors who has contributed to a certain article, and the article size (in bytes).

-

Diff authors against page len German

-

Diff authors against page len French

-

Diff authors against page len Japanese

-

Diff authors against page len Polish

-

Diff authors against page len Dutch

-

Diff authors against page len Italian

-

Diff authors against page len Portuguese

-

Diff authors against page len Swedish

-

Diff authors against page len Spanish