Abstract Wikipedia/Plan/ar

إن هذه page محفوظة لأغراض الاهتمام بما جرى في السابق. قد تكون أية سياسات مذكورة هنا متقادمة. لو أردت إعادة تنشيط الموضوع، يمكنك استخدام صفحة النقاش أو بدء نقاش عن الأمر على منتدى المجتمع. |

| ويكيبيديا المجردة |

|---|

| (نقاش) |

| عام |

| خطة التطوير |

| ملاحظات ومسودات ونقاش |

|

| أمثلة ونماذج مشابهة |

| أدوات بيانات |

| أمور سابقة |

- This is a one page version of the individual sections which are transcluded. Click on the titles to go the individual pages.

هذا الاقتراح هو مشروع ومن المتوقع أن يشهد تغييرات كبيرة على أساس القرارات والمناقشات مع المجتمعات وأصحاب المصلحة الآخرين. وهذا بدوره يعني أيضا أن التعليقات والمناقشات هي أكثر من موضع ترحيب من أجل تحسين وتشكيل الاقتراح. |

في "Abstract Wikipedia ، يتم تمثيل "المحتوى التجريدي" في شكل مستقل عن اللغة يمكن تحريره مباشرة من قبل المجتمع. يمكن لويكيبيديا المحلية الوصول إلى محتواها الخاضع للرقابة المحلية وإثراءه من خلال "المحتوى التجريدي" (من ويكيبيديا المجردة). بهذه الطريقة، طبعات المترجمة من ويكيبيديا يمكن مع جهد أقل بكثير توفير محتوى أكثر من ذلك بكثير لقرائهم، والمحتوى الذي هو أكثر شمولا، وأكثر حداثة، وأكثر فحصا مما يمكن أن توفر معظم ويكيبيديا المحلية.

وبالنظر إلى نتائج البحوث والنماذج الأولية في مجال جيل اللغة الطبيعة ، فمن الصحيح للأسف أن توليد اللغة الطبيعية من "المحتوى التجريدي" المستقل عن اللغة يتطلب " تورينج كاملة" النظام. ولكن من أجل تغطية عدد اللغات التي تحتاج ويكيبيديا إلى تغطيتها ، يجب أن يكون هذا النظام بالاستعانة بمصادر خارجية. لذلك نقدم 'Wikifunctions ، وهو مشروع لإنشاء وفهرسة وصيانة مكتبة مفتوحة (أو مستودع) من "وظائف" ، والتي لديها العديد من حالات الاستخدام المحتملة.

حالة الاستخدام الرئيسية هي تطوير "المقدمين" ('أي' بعض الوظائف المستضافة أو المشار إليها في Wikifunctions) التي تحول "المحتوى التجريدي" المستقل باللغة إلى لغة طبيعية ، باستخدام المعرفة اللغوية والأونولوجية المتاحة في Wikidata (أو المعرفة من مصادر البيانات المفتوحة الأخرى ذات متطلبات الترخيص المناسبة القابلة للاستخدام في ويكيبيديا ، بما في ذلك بيانات محتملة من مشاريع ويكيميديا مثل ويكشناري أو ويكيسبيكس).

سيبدأ المشروع بإنشاء مشروع Wikifunctions ، ثم استخدام هذا من أجل تمكين إنشاء Abstract Wikipedia. سيتم إطلاق Wikifunctions خلال السنة الأولى، وسنضيف تطوير ويكيبيديا المجردة في السنة الثانية. بعد عامين ، سنكون قد أنشأنا نظاما بيئيا يسمح بإنشاء وصيانة "محتوى تجريدي" مستقل عن اللغة ، ودمج هذا المحتوى داخل ويكيبيديا ، مما يزيد بشكل كبير من التغطية والعملة والدقة للعديد من ويكيبيديا الفردية. وهذا من شأنه أن يقربنا بشكل كبير من عالم حيث يمكن للجميع المشاركة في مجموع كل المعرفة.

تم اختيار "ويكيfunctions" كاسم للويكي الجديد، في ويكي وظائف تسمية المسابقة. |

All names – Abstract Wikipedia and Wikilambda – are preliminary and meant mostly for writing this proposal and discussing it.

تستند الأسماء الحالية إلى الفكرة التالية:

- ويكيبيديا المجردة: المحتوى في ويكيبيديا المجردة المجردة بعيدا عن لغة ملموسة.

- ويكيلامبيدا: هذا يستند إلى فكرة أن جميع الوظائف يمكن أن ترتكز على لامبيدا حساب التفاضل والتكامل. أيضا ، Wλ تبدو كيندا technophile ("المهوس") ، مع خطر أن ينظر إليها على أنها لا تلبي الجمهور المقصود وتدار مع التحيز فقط من قبل المتخصصين ، وميزة بالفعل من خلال الوصول إلى أكبر المعارف المتاحة في ثقافتهم الخاصة.

لاحظ أن اسم "Abstract Wikipedia" لن يبقى في الواقع. عندما ينتهي المشروع، ستكون ويكيبيديا المجردة مجرد جزء من ويكي بيانات. هذا مجرد اسم لأعمال التطوير ، وبالتالي فإن التسمية ليست حاسمة. ويكيلامبيدا من ناحية أخرى سيكون مشروع ويكيميديا جديد، وبالتالي فإن الاسم سيكون له رؤية عالية إلى حد ما. سيكون من الجيد التوصل إلى اسم جيد لذلك.

== الاعتراضات ==

كانت هناك ثلاثة أسباب وجيهة ضد اسم ويكيلامبيدا التي أثيرت حتى الآن:

- من الصعب حقا تهجئة لكثير من الناس (يقول إفيتساندرز).

- بعض الناس يستمرون في قراءتها على أنها ويكيلامبادا (يقول جان فريديريك).

- كما أنه يخطئ بسهولة كما WikiIambda / Wikiiambda (وهذا هو ، مع آخر أنا / أنا بدلا من ل / ل) ، لذلك ينبغي أن يكون على الأقل ويكيلامبيدا مع L رأس المال (اقترح من قبل فوزهيدو).

== البدائل المقترحة ==

الأسماء البديلة التي تم النظر فيها أو اقتراحها:

| هذا أرشيف. وينبغي اقتراح أسماء جديدة في ويكي وظائف تسمية المسابقة. |

|---|

ونرحب باقتراحات أخرى. |

في الواقع، المهمة الأولى P1.1 للمشروع ستكون اتخاذ قرار مع المجتمع معا على اسم وعلى شعار. وكان لذلك الأسبقية على المشاريع السابقة (شعار ويكي بيانات، اسم ويكيفوياج).

The project has ambitious primary and a whole set of secondary goals.

الأهداف الأساسية

- Allowing more people to read more content in the language they choose.

- Allowing more people to contribute content for more readers, and thus increasing the reach of underrepresented contributors.

الأهداف الثانوية

Secondary goals include, but are not limited to:

- Reusable and well-tested natural language generation.

- Allowing other Wikimedia communities and external parties to create content in more languages.

- Improving communication and knowledge accessibility well beyond the Wikipedia projects.

- Develop a novel, much more comprehensive approach to knowledge representation.

- Develop a novel approach to represent the result of natural language understanding.

- A library of functions.

- Allowing the development and sharing of functions in the user’s native languages, instead of requiring them to learn English first.

- Allowing everyone to share in functions and to run them.

- Introducing a new form of knowledge asset for a Wikimedia project to manage.

- Introducing novel components to Wikipedia and other Wikimedia projects that allow for interactive features.

- Create functions working on top of Wikidata’s knowledge base, thus adding inference to increase the coverage of Wikidata data considerably.

- Catalyzing research and development in democratizing coding interfaces.

- Enabling scientists and analysts to share and work on models collaboratively.

- Share specifications and tests for functions.

- The possibility to refer to semantics of functions through well-defined identifiers.

- Faster development of new programming languages due to accessing a wider standard library (or repository) of functions in a new dedicated wiki.

- Defining algorithm and approaches for standards and technique descriptions.

- Providing access to powerful functions to be integrated in novel machine learning systems.

القائمة غير شاملة.

We assume a core team, employed by a single hosting organization, that will work exclusively on Wikifunctions and Abstract Wikipedia. It will be supported by other departments of the hosting organization, such as human resources, legal, etc.

The team will explicitly be set up to be open and welcoming to external contributions to the code base. These may be volunteers or paid (e.g. through grants to movement bodies or by other organizations or companies). We aim to offer volunteers preferred treatment in order to increase the chances of creating the healthy volunteer communities that we need for a project of such ambition.

The project will be developed in the open. Communication channels of the team will be public as far as possible. Communication guidelines will be public. This will help with creating a development team that communicates publicly and that allows to integrate external contributions to the code base.

The following strong requirements are based on the principles and practices of the Wikimedia movement:

- Abstract Wikipedia and Wikifunctions will be Wikimedia projects, maintained and operated by the Wikimedia Foundation. This mandates that Abstract Wikipedia and Wikifunctions will follow the founding principles and guidelines of the Wikimedia movement.

- The software to run Abstract Wikipedia and Wikifunctions will be developed under an Open Source license, and will depend only on software that is Open Source.

- The setup for Abstract Wikipedia and Wikifunctions should blend into the current Wikimedia infrastructure as easily as possible. This means that we should fit into the same deployment, maintenance, and operations infrastructure as far as possible.

- All content of Abstract Wikipedia and Wikifunctions will be made available under free licenses.

- The success of Abstract Wikipedia and Wikifunctions is measured by the creation of healthy communities and by how much knowledge is being made available in languages that previously did not have access to this knowledge.

- Abstract Wikipedia will follow the principles defined by many of the individual Wikipedias: in particular, neutral point of view, verifiability, notability, and no original research (further developed by Community Capacity Development and by the communities for each local wiki).

- Abstract Wikipedia and Wikifunctions will be fully internationalized, and available and editable in all the languages of the Wikimedia projects. Whether it is fully localized depends on the communities.

- The primary goal is supporting local Wikipedias, Wikidata, and other Wikimedia projects, in this order. The secondary goal is to grow our own communities. Tertiary goals are to support the rest of the world.

- The local Wikipedia communities must be in control of how much Abstract Wikipedia affects them. If they don’t want to be affected by it, they can entirely ignore it and nothing changes for them.

The developers of Abstract Wikipedia do not decide on the content of Abstract Wikipedia, just like the developers of MediaWiki do not decide on the content of Wikipedia. Unlike with the other Wikimedia projects, the developers will take an active stand in setting up and kick-starting the initial set of types and functions, and creating the necessary functions in Wikifunctions for Abstract Wikipedia, and helping with getting language Renderer communities started. Unlike with other projects, the development team of Abstract Wikipedia and the wiki of functions will be originally more involved with the project but aims to hand all of that over to the communities sooner rather than later.

The following requirements are used as strong guidances that we apply in the design and development of Abstract Wikipedia:

- Abstract Wikipedia and Wikifunctions are a socio-technical system. Instead of trying to be overly intelligent, we rely on the Wikimedia communities.

- The first goal of Abstract Wikipedia and Wikifunctions is to serve actual use cases in Wikipedia, not to enable some form of hypothetical perfection in knowledge representation or to represent all of human language.

- Abstract Wikipedia and Wikifunctions have to balance ease of use and expressiveness. The user interface should not get complicated to merely cover a few exceptional edge cases.

- What is an exceptional case, and what is not, will be defined by how often they appear in Wikipedia. Instead of anecdotal evidence or hypothetical examples we will analyse Wikipedia and see how frequent specific cases are.

- Let's be pragmatic. Deployed is better than perfect.

- Abstract Wikipedia and Wikifunctions will provide a lot of novel data that can support external research, development, and use cases. We want to ensure that it is easily usable.

- Wikifunctions will provide an API interface to call any of the functions defined in it. But there will be a limit on the computational cost that it will offer.

- Abstract Wikipedia and Wikifunctions will be editable by humans and by bots alike. But the people running the bots must be aware of their heightened responsibility to not overwhelm the community.

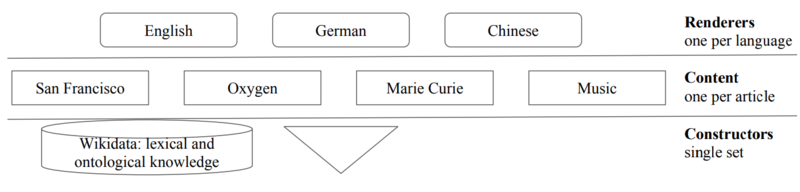

The main components of the project are the following three:

- Constructors – definitions of Constructors and their slots, including what they mean and restrictions on the types for the slots and the return type of the Constructor (e.g. define a Constructor

rankthat takes in an item, an item type, the ranking as a number, what it is ranked by, and a local constraint) - Content – abstract calls to Constructors including fillers for the slots (e.g.

rank(SanFrancisco, city, 4, population, California)) - Renderers – functions that take Content and a language and return a text, resulting in the natural language representing the meaning of the Content (e.g. in the given example it results in “San Francisco is the fourth largest city by population in California.”)

There are four main possibilities on where to implement the three different main components:

- Constructors, Content, and Renderers are all implemented in Wikidata.

- Constructors and Renderers are implemented in Wikifunctions, and the Content in Wikidata, next to the corresponding Item.

- Constructors, Content, and Renderers are all implemented in Wikifunctions.

- Constructors and Content are implemented in Wikidata, and the Renderers in the local editions of Wikipedia.

Solution 4 has the disadvantage that many functions can be shared between the different languages, and by moving the Renderers and functions to the local Wikipedias we forfeit that possibility. Also, by relegating the Renderers to the local Wikipedias, we miss out on the potential that an independent catalog of functions could achieve.

We think it is advantageous for communication and community building to introduce a new project, Wikifunctions, for a new form of knowledge assets, functions, which include Renderers. This would speak for Solution 2 and 3.

Solution 3 requires us to create a new place for every possible Wikipedia article in the wiki of functions. Given that a natural place for this already exists with the Items in Wikidata, it would be more convenient to use that and store the Content together with the Items in Wikidata.

Because of these reasons, we favor Solution 2 and assume it for the rest of the proposal. If we switch to another, the project plan can be easily accommodated (besides for Solution 4, which would need quite some rewriting). Note that solution 2 requires the agreement of the Wikidata community to proceed. If they disagree, Solution 3 is likely the next closest option.

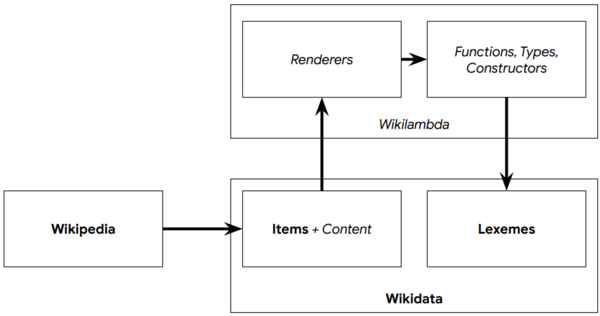

The proposed architecture for the multilingual Wikipedia looks as follows. Wikipedia calls the Content which is stored in Wikidata next to the Items. We call this extension of Wikidata Abstract Wikipedia. Note that this is merely a name for the development project, and that this is not expected to be a name that sticks around - there won’t be a new Wikiproject of that name. With a call to the Renderers in Wikifunctions, the Content gets translated into natural language text. The Renderers rely on the other Functions, Types, and Constructors in Wikifunctions. Wikifunctions also can call out to the lexicographic knowledge in the Lexemes in Wikidata, to be used in translating the Content to text. Wikifunctions will be a new Wikimedia project on par with Commons, Wikidata, or Wikisource.

(The components named in italics are to be added by this proposal, the components in bold already exist. Top level boxes are Wikimedia projects, inner boxes are parts of the given Wikimedia projects.) ("Wikilambda" was the working name for what is now known as "Wikifunctions".)

We need to extend the Wikimedia projects in three places:

- in the local editions of Wikipedia and other client projects using the new capabilities offered,

- in Wikidata, for creating Content (Abstract Wikipedia), and

- in a new project, Wikifunctions, aimed to create a library of functions.

Extensions to local Wikipedias

Each local Wikipedia can choose, as per the local community, between one of the following three options:

- implicit integration with Abstract Wikipedia;

- explicit integration with Abstract Wikipedia;

- no integration with Abstract Wikipedia.

The extension for local Wikipedias has the following functionalities: one new special page, two new features, and three new magic words.

F1: New special page: Abstract

A new special page will be available on each local Wikipedia, that is used with a Q-ID or the local article name and an optional language (which defaults to the language of the local Wikipedia). Example special page URLs look like the following:

https://en.wikipedia.org/wiki/Special:Abstract/Q62https://en.wikipedia.org/wiki/Special:Abstract/Q62/dehttps://en.wikipedia.org/wiki/Special:Abstract/San_Franciscohttps://en.wikipedia.org/wiki/Special:Abstract/San_Francisco/de

If the special page is called without parameters, then a form is displayed that allows for selecting a Q-ID and a language (pre-filled to the local language).

The special page displays the Content from the selected Q-ID or the Q-ID sitelinked to the respective article rendered in the selected language.

F2: Explicit article creation

If the local Wikipedia chooses to go for the option of integrating with Abstract Wikipedia through explicit article creation, this is how they do it.

The contributor goes to an Item on Wikidata that does not have a sitelink in the target local Wikipedia yet. They add a sitelink to a page that does not exist yet. This way they specify the name of the article. For example, if Q62 in English would not have an article yet, and thus also no Sitelink, they may add the sitelink San_Francisco for en.wikipedia.

On the local Wikipedia, this creates a virtual article in the main namespace. That article has the same content as the special page described above, but it is to be found under the usual URL, i.e.

Links to that article, using the newly specified name, look just like any other links, i.e. a link to [[San Francisco]] will point to the virtual article, be blue, etc. Such articles are indexed for search in the given Wikipedia and for external search too.

If a user clicks on editing the article, they can choose to either go to Wikidata and edit the abstract Content (preferred), or start a new article in the local language from scratch, or materialize the current translation as text and start editing that locally.

If an existing local article with a sitelink is deleted, a virtual article is automatically created (since we know the name and can retain the links).

In order to delete a virtual article, the sitelink in Wikidata needs to be deleted.

All changes to the local Wikipedia have to be done explicitly, which is why we call this the explicit article creation option. We expect to make this the default option for the local Wikipedias, unless they choose either implicit article creation or no integration.

See also the discussion on the integration here.

F3: Implicit article creation

If a local Wikipedia opts in to the Implicit article creation from Wikidata, then the result of calling the Abstract special page on all Wikidata Items that do not have a sitelink to the given Wikidata but would Render content in the given language, is indexed as if it were in the Main namespace, and made available in search as if it were in the Main namespace.

A new magic word is introduced to link to virtual articles from normal articles, see F6 LINK_TO_Q. This can be integrated invisibly into the visual editor.

This is by far the least work for the community to gain a lot of articles, and might be a good option for small communities.

F4: Links or tabs

Every article on a local Wikipedia that is connected to a Wikidata item receives a new link, either as a tab on the top or a link in the sidebar. That link displays the Content for the connected Wikidata item rendered in the local language. Virtual articles don’t have this tab, but their Edit button links directly to editing the Content in Abstract Wikipedia.

F5: New magic word: ABSTRACT_WIKIPEDIA

The magic word is replaced with the wikitext resulting from Rendering the Content on the Wikidata item that is connected to this page through sitelinks.

The magic word can be used with two optional parameters, one being a Q-ID, the other a language. If no Q-ID is given, the Q-ID defaults to the Item this page is linked to per Sitelink. If no language is given, the language defaults to the language of the given wiki.

Example calls:

{{ABSTRACT_WIKIPEDIA}}{{ABSTRACT_WIKIPEDIA:Q62}}{{ABSTRACT_WIKIPEDIA:Q62/de}}

If no Q-ID is given or chosen by default, an error message appears.

Later this will allow to select named sections from the Content.

Wikipedias that choose to have no integration to Abstract Wikipedia can still use this new magic word.

Note that the introduction of a new magic word is a preliminary plan. Task 2.3 will investigate whether we can achieve their functionalities without doing so.

F6: New magic word: LINK_TO_Q

This magic word turns into a link to either the local article that is sitelinked to the given Q-ID or, if none exists, to the Abstract Special page with the given Q-ID. This allows to write articles with links to virtual articles, which get replaced automatically once local content is created.

Example call:

{{LINK_TO_Q:Q62}}

will result in

[[San Francisco]]

if the article exists, otherwise in

[[Special:Abstract/Q62|San Francisco]]

Note that the introduction of a new magic word is a preliminary plan. Task 2.3 will investigate whether we can achieve their functionalities without doing so.

F7: New magic word: LAMBDA

This calls a function specified in Wikidata, together with its parameters, and renders the output on the page.

For example, the following call:

{{LAMBDA:capitalize("san francisco")}}

will result in “San Francisco” being outputted on the page (assuming that there is a function that has the local key and with the expected definition and implementation). It uses the language of the local wiki to parse the call.

Consider also the option to call a specific version of a function in order to reduce breakages downstream.

Note that the introduction of a new magic word is a preliminary plan. Task 2.3 will investigate whether we can achieve their functionalities without doing so.

Extensions to Wikidata

We add a new auxiliary namespace to the main namespace of Wikidata. I.e. every item page of the form www.wikidata.org/wiki/Q62 will also receive an accompanying Content page www.wikidata.org/wiki/Content:Q62. That page contains the abstract, language-independent Content, and allows its editing and maintenance.

Additional Special pages might be needed. This will be extended in the second part of the project. It requires the agreement of the Wikidata community that the project will be used for storing the abstract content, and another will be chosen if they disagree.

F8: New namespace: Content

New namespace with a lot of complex interactive editing features. Provides UX to create and maintain Content, as well as features to evaluate the Content (e.g. display how much of it is being displayed per language, etc.) This is mostly a subset of the functionality of the F11 Function namespace.

F9: New data type: Content

A new datatype that contains a (short) Content. The main use case is for the Descriptions in Items and for Glosses in the Senses of Lexemes.

F10: Index and use Descriptions in Items and Glosses in Senses

Index and surface the linearizations of Description fields for Items and Glosses in Senses, and also make sure that for Description fields in Items there are no duplicate Label / Description pairs. Allow for overwriting these both by manual edits.

Extensions to other Wikimedia projects

Other Wikimedia projects will also receive F7 LAMBDA and F5 ABSTRACT_WIKIPEDIA magic words, but none of the other functionalities, as these seem not particularly useful for them. This may change based on requests from the given communities.

Extensions for the new wiki of functions

Wikifunctions will be a new Wikimedia project on a new domain. The main namespace of Wikifunctions will be the novel Function namespace. The rest of Wikifunctions will be a traditional Wikimedia wiki.

F11: New namespace: Function

Allowing for the storage of functions, types, interfaces, values, tests, etc. There is a single namespace that contains constants (such as types or single values), function interfaces, function implementations, and thus also Constructors and Renderers. The entities in this namespace are named by Z-IDs, similar to Q-IDs of Wikidata items, but starting with a Z and followed by a number.

There are many different types of entities in the Z namespace. These include types and other constants (which are basically functions of zero arity), as well as classical functions with a positive arity.

Contributors can create new types of functions within the Function namespace and then use these.

Functions can have arguments. Functions with their arguments given can be executed and result in a value whose type is given by the function definition.

The Function namespace is complex, and will have very different views depending on the type of the function, i.e. for interfaces, implementations, tests, types, values, etc. there will be different UX on top of them, although they are internally all stored as Z-Objects. Eventually, the different views are all generated by functions in Wikifunctions.

It will be possible to freeze and thaw entities in the Function namespace. This is similar to a protected page, but only restricts the editing of the value part of the entity, not the label, description, etc.

F12: New special pages and API modules

New Special pages and API modules will be created to support the new Function namespace. This will include, in particular, a special page and an API module that allows to evaluate functions with function parameters given. Besides that it will include numerous special pages and APIs that will support the maintenance of the content (such as searches by number and types of parameters, pages with statistics of how often certain implementations are called, test pages, etc.). The goal is to implement as many as possible of these inside Wikifunctions.

Development happens in two main parts. Part P1 is about getting Wikifunctions up and running, and sufficiently developed to support the Content creation required for Part P2. Part P2 is then about setting up the creation of Content within Wikidata, and allowing the Wikipedias to access that Content. After that, there will be ongoing development to improve both Wikifunctions and Abstract Wikipedia. This ongoing development is not covered by this plan. Note that all timing depends on how much headcount we actually work with.

In the first year, we will work exclusively on Part P1. Part P2 starts with the second year and adds additional headcount to the project. Parts P1 and P2 will then be developed in parallel for the next 18 months. Depending on the outcome and success of the project, the staffing of the further development has to be decided around month 24, and reassessed regularly from then on.

This plan covers the initial 30 months of development. The main deliverables after that time will be:

- Wikifunctions, a new WMF project and wiki, with its own community, and its own mission, aiming to provide a catalog of functions and allow everyone to share in that catalog, thus empowering people by democratizing access to computational knowledge.

- A cross-wiki repository to share templates and modules between the WMF projects, which is a long-standing wish by the communities. This will be part of Wikifunctions.

- Abstract Wikipedia, a cross-wiki project that allows to define Content in Wikidata, independently of a natural language, and that is integrated in several local Wikipedias, considerably increasing the amount of knowledge speakers of currently underserved languages can share in.

Part P1: Wikifunctions

Task P1.1: Project initialization

We will set up Wikipages on Meta, bug components, mailing lists, chat rooms, an advisory board, and other relevant means of discussion with the wider community. We will start the discussion on and decide on the names for Wikifunctions and Abstract Wikipedia, and hold contests for the logos, organize the creation of a code of conduct, and the necessary steps for a healthy community.

We also need to kick off the community process of defining the license choice for the different parts of the project: abstract Content in Wikidata, the functions and other entities in Wikifunctions, as well as the legal status of the generated text to be displayed in Wikipedia. This needs to be finished before Task P1.9. This decision will need input from legal counsel.

Task P1.2: Initial development

The first development step is to create the Wikifunctions wiki with a Function namespace that allows the storage and editing of Z-Objects (more constrained JSON objects - in fact we may start with the existing JSON extensions and build on that). The initial milestone aims to have:

- The initial types: unicode string, positive integer up to N, boolean, list, pair, language, monolingual text, multilingual text, type, function, builtin implementation, and error.

- An initial set of constants for the types.

- An initial set of functions: if, head, tail, is_zero, successor, predecessor, abstract, reify, equal_string, nand, constructors, probably a few more functions, each with a built-in implementation.

This allows to start developing the frontend UX for function calls, and create a first set of tools and interfaces to display the different types of Z Objects, but also generic Z Objects. This also includes a first evaluator that is running on Wikimedia servers.

Note that the initial implementations of the views, editing interfaces, and validators are likely to be thrown away gradually after P1.12 once all of these are becoming internalized. To internalize some code means to move it away from the core and move it into userland, i.e. to reimplement them in Wikifunctions and call them from there.

Task P1.3: Set up testing infrastructure

Wikifunctions will require several test systems. One to test the core, one to test the Web UI, one to test the Wikifunctions content itself. This is an ongoing task and needs to be integrated with version control.

Task P1.4: Launch public test system

We will set up a publicly visible and editable test system that runs the bleeding edge of the code (at least one deploy per working day). We will invite the community to come in and break stuff. We may also use this system for continuous integration tests.

Task P1.5: Server-based evaluator

Whereas the initial development has created a simple evaluator working only with the built-ins, and thus having very predictable behavior, the upcoming P1.6 function composition task will require us to rethink the evaluator. The first evaluator will run on Wikimedia infrastructure, and needs monitoring and throttling abilities, and potentially also the possibility to allocate users different amounts of compute resources depending on whether they are logged-in or not.

Task P1.6: Function composition

We allow for creating new function interfaces and a novel type of implementation, which are composed function calls. E.g. it allows to implement

add(x, y)

as

if(is_zero(y), x, add(successor(x), predecessor(y))

and the system can execute this. It also allows for multiple implementations.

Task P1.7: Freeze and thaw entities

A level of protection that allows to edit the metadata of an entity (name, description, etc.), but not the actual value. This functionality might also be useful for Wikidata. A more elaborate versioning proposal is suggested here.

Task P1.8: Launch beta Wikifunctions

The beta system runs the next iteration of the code that will go on Wikifunctions with the next deployment cycle, for testing purposes.

Task P1.9: Launch Wikifunctions

Pass security review. Set up a new Wikimedia project. Move some of the wikipages from Meta to Wikifunctions.

Task P1.10: Test type

Introduce a new type for writing tests for functions. This is done by specifying input values and a function that checks the output. Besides introducing the Test type, Functions and Implementations also have to use the Tests, and integrate them into Implementation development and Interface views.

Task P1.11: Special page to write function calls

We need a new Special page that allows and supports to write Function calls, with basic syntax checking, autocompletion, documentation, etc. A subset of this functionality will also be integrated on the pages of individual Functions and Implementations to run them with more complex values. This Special page relies on the initial simple API to evaluate Function calls.

Task P1.12: JavaScript-based implementations

We allow for a new type of Implementations, which are implementations written in JavaScript. This requires to translate values to JavaScript and back to Z-Objects. This also requires to think about security, through code analysis and sandboxing, initially enriched by manual reviews and P1.7 freezing. If the O1 lambda-calculus has not yet succeeded or was skipped, we can also reach the self-hosting for Wikifunctions through this task, which would allow to internalize most of the code.

Task P1.13: Access functions

Add F7 the Lambda magic word to the Wikimedia projects which can encode function calls to Wikifunctions and integrate the result in the output of the given Wikimedia project. This will, in fact, create a centralized templating system as people realize that they can now reimplement templates in Wikifunctions and then call them from their local Wikis. This will be preceded by an analysis of existing solutions such as TemplateData and the Lua calls.

This might lead to requests from the communities to enable the MediaWiki template language and Lua (see P1.15) as a programming language within Wikifunctions. This will likely lead to requests for an improved Watchlist solution, similar to what Wikidata did, and to MediaWiki Template-based implementations, and other requests from the community. In order to meet these tasks, an additional person might be helpful to answer the requests from the community. Otherwise P2 might start up to a quarter later. This person is already listed in the development team above.

Task P1.14: Create new types

We allow for the creation of new types. This means we should be able to edit and create new type definitions, and internalize all code to handle values of a type within Wikifunctions. I.e. we will need code for validating values, construct them, visualize them in several environments, etc.. We also should internalize these for all the existing types.

Task P1.15: Lua-based implementations

We add Lua as a programming language that is supported for implementations. The importance of Lua is due to its wide use in the MediaWiki projects. Also, if it has not already happened, the value transformation from Wikifunctions to a programming language should be internalized at this point (and also done for the JavaScript implementations, which likely will be using built-ins at this point).

Task P1.16: Non-functional interfaces

Whereas Wikifunctions is built on purely functional implementations, there are some interfaces that are naively not functional, for example random numbers, current time, autoincrements, or many REST calls. We will figure out how to integrate these with Wikifunctions.

Task P1.17: REST calls

We will provide builtins to call out to REST interfaces on the Web and ingest the result. This would preferably rely on P1.16. Note that calling arbitrary REST interfaces has security challenges. These need to be taken into account in a proper design.

Task P1.18: Accessing Wikidata and other WMF projects

We will provide Functions to access Wikidata Items and Lexemes, and also other content from Wikimedia projects. This will preferably rely on P1.17, but in case that didn’t succeed yet at this point, a builtin will unblock this capability.

Task P1.19: Monolingual generation

The development of these functions happens entirely on-wiki. This includes tables and records to represent grammatical entities such as nouns, verbs, noun phrases, etc., as well as Functions to work with them. This includes implementing context so that we can generate anaphors as needed. This allows for a concrete generation of natural language, i.e. not an abstract one yet.

Part P2: Abstract Wikipedia

The tasks in this part will start after one year of development. Not all tasks from Part P1 are expected to be finished before Part P2 can begin, as, in fact, Parts P1 and P2 will continue to be developed in parallel. Only some of the tasks in Part P1 are required for P2 to start.

Task P2.1: Constructors and Renderers

Here we introduce the abstract interfaces to the concrete generators developed in P1.19. This leads to the initial development of Constructors and the Render function. After this task, the community should be able to create new Constructors and extend Renderers to support them.

- Constructors are used for the notation of the abstract content. Constructors are language independent, and shouldn't hold conditional logic.

- Renderers should hold the actual conditional logic (which gets applied to the information in the Constructors). Renderers can be per language (but can also be shared across languages).

- The separation between the two is analogous to the separation in other NLG systems such as Grammatical Framework.

Task P2.2: Conditional rendering

It will rarely be the case that a Renderer will be able to render the Content fully. We will need to support graceful degradation: if some Content fails to render, but other still renders, we should show that part that rendered. But sometimes it is narratively necessary to render certain Content only if other Content will definitely be rendered. In this task we will implement the support for such conditional rendering, that will allow smaller communities to grow their Wikipedias safely.

Task P2.3: Abstract Wikipedia

Create a new namespace in Wikidata and allow Content to be created and maintained there. Reuse UI elements and adapt them for Content creation. The UI work will be preceded by Design research work which can start prior to the launch of Part P2. Some important thoughts on this design are here. This task will also decide whether we need new magic words (F5, F6, and F7) or can avoid their introduction.

Task P2.4: Mobile UI

The creation and editing of the Content will be the most frequent task in the creation of a multilingual Wikipedia. Therefore we want to ensure that this task has a pleasant and accessible user experience. We want to dedicate an explicit task to support the creation and maintenance of Content in a mobile interface. The assumption is that we can create an interface that allows for a better experience than editing wikitext.

Task P2.5: Integrate content into the Wikipedias

Enable the Abstract Wikipedia magic word. Then allow for the explicit article creation, and finally the implicit article creation (F1, F2, F3, F4, F5, F6).

Task P2.6: Regular inflection

Wikidata’s Lexemes contain the inflected Forms of a Lexeme. These Forms are often regular. We will create a solution that generates regular inflections through Wikifunctions and will discuss with the community how to integrate that with the existing Lexemes.

Task P2.7: Basic Renderer for English

We assume that the initial creation of Renderers will be difficult. Given the status of English as a widely used language in the community, we will use English as a first language to demonstrate the creation of a Renderer, and document it well. We will integrate the help from the community. This also includes functionality to show references.

Task P2.8: Basic Renderer for a second language

Based on the community feedback, interests, and expertise of the linguists working on the team, we will select a second large language for which we will create the basic Renderer together with the community. It would be interesting to choose a language where the community on the local Wikipedia has already confirmed their interest to integrate Abstract Wikipedia.

Task P2.9: Renderer for a language from another family

Since it is likely that the language in P2.8 will be an Indo-European language, we will also create a basic Renderer together with the community for a language from a different language family. The choice of this language will be done based on the expertise available to the team and the interests of the community. It would be interesting to choose a language where the community on the local Wikipedia has already confirmed their interest to integrate Abstract Wikipedia.

Task P2.10: Renderer for an underserved language

Since it is likely that the languages in P2.8 and P2.9 will be languages which are already well-served with active and large Wikipedia communities, we will also select an underserved language, a language that currently has a large number of potential readers but only a small community and little content. The choice of this language will be done based on the expertise available to the team and the interests of the community. Here it is crucial to select a language where the community on the local Wikipedia has already committed their support to integrate Abstract Wikipedia.

Task P2.11: Abstract Wikidata Descriptions

Wikidata Descriptions seem particularly amenable to be created through Wikifunctions. They are often just short noun phrases. In this task we support the storage and maintenance of Abstract Descriptions in Wikidata, and their generation for Wikidata. We also should ensure that the result of this leads to unique combinations of labels and descriptions.

See Abstract Wikipedia/Updates/2021-07-29 for further details and discussion.

Task P2.12: Abstract Glosses

Wikidata Lexemes have Senses. Senses are captured by Glosses. Glosses are available per language, which means that they are usually only available in a few languages. In order to support a truly multilingual dictionary, we are suggesting to create Abstract Glosses. Although this sounds like it should be much easier than creating full fledged Wikipedia articles, we think that this might be a much harder task due to the nature of Glosses.

Task P2.13: Support more natural languages

Support other language communities in the creation of Renderers, with a focus on underserved languages.

Task P2.14: Template-generated content

Some Wikipedias currently contain a lot of template-generated content. Identify this content and discuss with the local Wikipedias whether they want to replace it with a solution based on Wikifunctions, where the template is in Wikifunctions and the content given in the local Wikipedia or in Abstract Wikipedia. This will lead to more sustainable and maintainable solutions that do not require to rely on a single contributor. Note that this does not have to be multilingual, and might be much simpler than going through full abstraction.

Task P2.15: Casual comments

Allow casual contributors to make comments on the rendered text and create mechanisms to capture those comments and funnel them back to a triage mechanism that allows to direct them to either the content or the renderers. It is important to not lose comments by casual contributors. Ideally, we would allow them to explicitly overwrite a part of the rendered output and consider this a change request, and then we will have more involved contributors working on transforming the intent of the casual contributor to the corresponding changes in the system.

Task P2.16: Quick article name generation

The general public comes to Wikipedia mostly by typing the names of the things they are looking for in their language into common search engines. This means that Wikidata items will need labels translated into a language in order to be able to use implicit article creation. This can probably be achieved by translating millions of Wikidata labels. Sometimes it can be done by bots or AI, but this is not totally reliable and scalable, so it has to involve humans.

The current tools for massive crowd-sourced translation of Wikidata labels are not up to the task. There are two main ways to do it: editing labels in Wikidata itself, which is fine for adding maybe a dozen of labels, but quickly gets tiring, and using Tabernacle, which appears to be more oriented at massive batch translations, but is too complicated to actually use for most people.

This task is to develop a massive and integrated label-translation tool with an easy, modern frontend, that can be used by lots of people.

Non-core tasks

There is a whole set of further optional tasks. Ideally these would be picked up by external communities and developed as Open Source outside the initial development team, but some of these might need to be kickstarted and even fully developed by the core team.

Task O1: Lambda calculus

It is possible to entirely self-host Wikifunctions without relying on builtins or implementations in other programming languages, by implementing a Lambda calculus in Wikifunctions (this is where the name proposal comes from). This can be helpful to allow evaluation without any language support, and so easier start up the development of evaluators.

Task O2: CLI in a terminal

Many developers enjoy using a command line interface to access a system such as Wikifunctions. We should provide one, with the usual features such as autocompletion, history, shell integration, etc.

Task O3: UIs for creating, debugging and tracing functions

Wikifunctions’s goal is to allow the quick understanding and development of the functions in Wikifunctions. Given the functional approach, it should be possible to create a user experience that allows for partial evaluation, unfolding, debugging, and tracing of a function call.

Task O4: Improve evaluator efficiency

There are many ways to improve the efficiency of evaluators, and thus reduce usage of resources, particularly caching, or the proper selection of an evaluation strategy. We should spend some time on doing so for evaluators in general and note down the results, so that different evaluators can use this knowledge, but also make sure that the evaluators maintained by the core team use most of the best practises.

Task O5: Web of Trust for implementations

In order to relax the conditions for implementations in programming languages, we could introduce a Web of Trust based solution, that allows contributors to review existing implementations and mark their approval explicitly, and also to mark other contributors as trustworthy. Then these approvals could be taken into account when choosing or preparing an evaluation strategy.

Task O6: Python-based implementations

Python is a widely used programming language, particularly for learners and in some domains such as machine learning. Supporting Python can open a rich ecosystem to Wikifunctions.

Task O7: Implementations in other languages

We will make an effort to call out to other programming language communities to integrate them into Wikifunctions, and support them. Candidates for implementations are Web Assembler, PHP, Rust, C/C++, R, Swift, Go, and others, but it depends on the interest of the core team and the external communities to create and support these interfaces.

Task O8: Web-based REPL

A web-based REPL can bring the advantages of the O2 command line interface to the Web, without requiring to install a CLI in a local environment, which is sometimes not possible.

Task O9: Extend API with Parser and Linearizer

There might be different parsers and linearizers using Wikifunctions. The Wikifunctions API can be easier to use if the caller could explicitly select these, instead of wrapping them manually, which would allow the usage of Wikifunctions with different surface dialects.

Task O10: Support talk pages

In order to support discussions on talk pages of Wikifunctions, develop and integrate a mechanism that allows for (initially) simple discussions, and slowly raising their complexity based on the need of the communities.

Task O11: Legal text creation

An interesting application of Wikifunctions is for the creation of legal text in a modular manner and with different levels (legalese vs human-readable), similar to the different levels of description for the different Creative Commons licenses.

Task O12: Health related text creation

An interesting application of Wikifunctions is for the creation of health-related texts for different reading levels. This should be driven by WikiProject Medicine and their successful work, which could reach many more people through cooperation with Wikifunctions.

Task O13: NPM library

We will create a Wikidata library for NPM that allows the simple usage of Functions from Wikifunctions in a JavaScript program. The same syntax should be used to allow JavaScript implementations in Wikifunctions to access other Wikifunctions functions. Note that this can be done by virtue of calling to a Wikifunctions evaluator, or by compiling the required functions into the given code base.

Task O14: Python library

We will create a Python library that allows the simple usage of Functions from Wikifunctions in a Python script. The same syntax should be used to allow Python implementations in Wikifunctions to access other Wikifunctions functions. Note that this can be done by virtue of calling to a Wikifunctions evaluator, or by compiling the required functions into the given code base.

Task O15: Libraries for other programming languages

We will call out to the communities of several programming languages to help us with the creation of libraries that allow the simple call of Wikifunctions functions from programs in their language. Note that this can be done by virtue of calling to a Wikifunctions evaluator, or by compiling the required functions into the given code base.

Task O16: Browser-based evaluator

One of the advantages of Wikidata is that the actual evaluation of a function call can happen in different evaluators. The main evaluator for Abstract Wikipedia will be server-based and run by the Wikimedia Foundation, but in order to reduce the compute load, we should also provide evaluators running in the user’s client (probably in a Worker thread).

Task O17: Jupyter- and/or PAWS-based evaluator

One interesting evaluator is from a Jupyter or PAWS notebook, and thus allowing the usual advantages of such notebooks but integrating also the benefits from Wikifunctions.

Task O18: App-based evaluator

One evaluator should be running natively on Android or iOS devices, and thus allow the user to use the considerable computing power in their hand.

Task O19: P2P-based evaluator

Many of the evaluators could be linking together and allow each other to use dormant compute resources in their network. This may or may not require shields between the participating nodes that ensure the privacy of individual computes.

Task O20: Cloud-based evaluator

One obvious place to get compute resources is by allowing to use a cloud provider. Whereas it would be possible to simply run the server-based evaluator on a cloud-based infrastructure, it is likely to be beneficial for the cloud providers to provide a thinner interface to a more custom-tailored evaluator.

Task O21: Stream type

Add support for a type for streaming data, both as an input as well as an output. Stream types means for example the recent changes stream on a Wikimedia wiki.

Task O22: Binary type

Add support for binary files, both for input and output.

Task O23: Integration with Commons media files

Allow direct access to files on Commons. Enable workflows with Commons that require less deployment machinery than currently needed. Requires O22.

Task O24: Integrate with Machine Learning

Develop a few example integrations with Machine Learning solutions, e.g. for NLP tasks or for work on image or video e.g. using classifiers. This requires how and where to store models, possibly also how to train them, and how to access them.

Task O25: Integrate into IDEs

Reach out to communities developing IDEs and support them in integrating with Wikifunctions, using type hints, documentation, completion, and many of the other convenient and crucial features of modern IDEs.

Task O26: Create simple apps or Websites

Develop a system to allow the easy creation and deployment of apps or Websites relying on Wikifunctions.

Task O27: Display open tasks for contributors

Abstract Wikipedia will require one Renderer for every Constructor for every language. It will be helpful if contributors could get some guidance to which Renderer to implement next, as this is often not trivially visible. Just counting how often a Constructor appears could be a first approximation, but if some Constructors are used more often in the lead, or in parts of the text that block other text from appearing, or in articles that are more read than others, this approximation might be off. In this task we create a form of dashboard that allows users to choose a language (and maybe a domain, such as sports, geography, history, etc., and maybe a filter for the complexity of the renderer that is expected) and then provide them with a list of unrendered Constructors ranked by the impact an implementation would have.

We could also allow contributors to sign up for a regular message telling them how much impact (in terms of views and created content) they had, based on the same framework needed for the dashboard.

This is comparable to seeing the status of translations of different projects on TranslateWiki.Net (for a selectable language), or the views on topics, organizations or authors in Scholia. For each project, it shows what % of the strings in it were translated and what % need update, and a volunteer translator can choose: get something from 98% to 100%, get something from 40% to 60%, get something from 0% to 10%, etc.

Task O28: Active view

Whereas the default view of Rendered content would look much like static text, there should also be a more active view that invites contribution, based on existing Content that failed to render due to missing Renderers. In the simplest case this can be the creation of a Lexeme in Wikidata and connecting to the right Lexeme. In more complex cases that could be writing a Renderer, or offering example sentences as text, maybe using the Casual contribution path described in P2.15. This would provide an interesting funnel to turn more readers in contributors.

There are product and design decisions on how and where the active view should be used and whether it should be the default view, or whether it should be only switched on after an invitation, etc. There could also be mode where contributors can go from article to article and fill out missing pieces, similar to the more abstract way in O27.

It would probably be really useful that ensure that the active way and the contribution path it leads to work on mobile devices as well.

Task O29: Implementation compilation

The function composition used for implementations should allow to create highly performant compilations of rather high-level functions in many different target programming languages, particularly Web Assembler and JavaScript. This should speed up evaluation by several orders of magnitude.

Task O30: Integrate with Code examples on Wikipedia, Wikibooks, etc.

Allow Wikipedia, Wikibooks, and the other projects to integrate their code examples directly with the wiki of functions, so that these can be run live.

Development of Abstract Wikipedia will proceed in two major parts, each consisting of a large number of tasks. Part P1 is about developing the wiki of functions, and Part P2 is about abstract content and natural language generation. On this page, we further break down the tasks of Part P1 into phases that each cover some of the work under a given task. The below contains links to Phabricator, where tasks and phases are broken down even further.

This wiki page might be stale. The canonical place for information about the tasks is Phabricator. Find our current state on Phabricator.

We expect to take about ten phases before launching the wiki of functions.

All the below phases cover work under Task P1.2: Initial development, unless marked otherwise.

Part P1: Wiki of functions

Phase α (alpha): store, display and edit header —  تم 2020-08-25

تم 2020-08-25

- Set up replicable development environment. — المهمة T258893

تم Start extension. — المهمة T258893

تم Start extension. — المهمة T258893 تم Config works, upload bootstrap content.

تم Config works, upload bootstrap content. تم Reuse existing JSON ContentHandler. — المهمة T258893

تم Reuse existing JSON ContentHandler. — المهمة T258893 تم Allow for entering JSON objects through the raw editing interface. — المهمة T258893

تم Allow for entering JSON objects through the raw editing interface. — المهمة T258893

تم Extend and hardcode checker for JSON objects to check for ZObject well-formedness. Nothing that is not well-formed will be further handled or stored. Well-formedness should probably be checked both in the PHP and the JS code (should be easy to write anyway).

تم Extend and hardcode checker for JSON objects to check for ZObject well-formedness. Nothing that is not well-formed will be further handled or stored. Well-formedness should probably be checked both in the PHP and the JS code (should be easy to write anyway).

تم in PHP. — المهمة T258894

تم in PHP. — المهمة T258894- Well-formedness: key syntax, allowed keys, values are strings or proto-objects or lists of values. — المهمة T258894

تم Every stored top-level ZObject must be a Z2/Persistent object. — المهمة T258897

تم Every stored top-level ZObject must be a Z2/Persistent object. — المهمة T258897 تم Create Z1/Object, offering one key, Z1K1/type.

تم Create Z1/Object, offering one key, Z1K1/type. تم Extend hardcoded validator to check Z1K1/type.

تم Extend hardcoded validator to check Z1K1/type. تم Create Z2/Persistent object. — المهمة T258897

تم Create Z2/Persistent object. — المهمة T258897 تم Z2/Persistent object has the keys Z2K1/ID and Z2K2/value, and Z2K3/Proto-Label, the latter being counterfactually just a single string with no language information. — المهمة T258897

تم Z2/Persistent object has the keys Z2K1/ID and Z2K2/value, and Z2K3/Proto-Label, the latter being counterfactually just a single string with no language information. — المهمة T258897 تم Extend hardcoded validator for Z2/Persistent object so far. — المهمة T258897

تم Extend hardcoded validator for Z2/Persistent object so far. — المهمة T258897 تم Provide hardcoded display for Z2/Persistent object (that is the header) (that is a pretty big task). — المهمة T258898

تم Provide hardcoded display for Z2/Persistent object (that is the header) (that is a pretty big task). — المهمة T258898 تم Provide generic view for the Z2K2/value object. — المهمة T258898

تم Provide generic view for the Z2K2/value object. — المهمة T258898 تم Turn Z2K3/proto-label into the proper Z2K3/label for multilingual text.

تم Turn Z2K3/proto-label into the proper Z2K3/label for multilingual text. تم Extend viewing for Z2K3/label with multilingual text.

تم Extend viewing for Z2K3/label with multilingual text.

Phase completion condition: As a user [of a site with the MediaWiki extension installed], I can create and store a string as a new ZObject, e.g. "Hello world!".

Phase β (beta): create types and instances —  تم 2021-02-04

تم 2021-02-04

تم Hardcoded validators for Z4/proto-types and Z3/proto-keys. — المهمة T258900

تم Hardcoded validators for Z4/proto-types and Z3/proto-keys. — المهمة T258900

- A Z4 has a Z4K2/keys with a json List of Z3s.

- A proto-key has a Z3K1/ID and Z3K2/type and Z3K3/label (mirror the development of label for Z2K3?).

تم Create Z4/Type and Z3/Key (Task P1.14).

تم Create Z4/Type and Z3/Key (Task P1.14). تم Search for ZObjects by label. — المهمة T260750

تم Search for ZObjects by label. — المهمة T260750 Done for this phase Use Z4 type data and key declarations for validating objects. — المهمة T260861

Done for this phase Use Z4 type data and key declarations for validating objects. — المهمة T260861 تم Use Z4 type data and key declarations for generic view of objects. — المهمة T258901

تم Use Z4 type data and key declarations for generic view of objects. — المهمة T258901 تم Use Z4 type data and key declarations for editing and creation of objects. — المهمة T258903 & المهمة T258904

تم Use Z4 type data and key declarations for editing and creation of objects. — المهمة T258903 & المهمة T258904 تم Provide hardcoded display and edit interface for Z12 type. — المهمة T258900

تم Provide hardcoded display and edit interface for Z12 type. — المهمة T258900

Phase completion condition: As a user, I can create and store an object implementing any on-wiki defined type, e.g. the positive integer one

Phase γ (gamma): functions, implementations, errors —  تم 2021-04-02

تم 2021-04-02

تم Introduce a simple error object. — المهمة T261464

تم Introduce a simple error object. — المهمة T261464 تم Introduce simple function. — المهمة T258957

تم Introduce simple function. — المهمة T258957 تم Introduce simple implementation, for now only built-ins. — المهمة T258958

تم Introduce simple implementation, for now only built-ins. — المهمة T258958 تم Create a few functions and built-ins. — المهمة T261474

تم Create a few functions and built-ins. — المهمة T261474 تم Introduce a simple function call type. — المهمة T261467

تم Introduce a simple function call type. — المهمة T261467 تم Tester type (Task P1.10). — المهمة T261465

تم Tester type (Task P1.10). — المهمة T261465

Phase completion condition: As a user, I can store a function call, a function, and a tester (only the objects, no actual evaluation yet), e.g. if(true, false, true) (read "if true then false else true", i.e. negation)

Phase δ (delta): built ins —  تم 2021-05-11

تم 2021-05-11

تم Evaluation system for built-ins. — المهمة T260321

تم Evaluation system for built-ins. — المهمة T260321 تم Enable web users to call evaluation through an API module (Task P1.5). — المهمة T261475

تم Enable web users to call evaluation through an API module (Task P1.5). — المهمة T261475 تم Special page for calling evaluation (Task P1.11). — المهمة T261471

تم Special page for calling evaluation (Task P1.11). — المهمة T261471

Phase completion condition: As a user, I can use a special page to evaluate a built-in function with supplied inputs, e.g. to check whether the empty list is empty.

Phase ε (epsilon): native function calls —  تم 2021-06-30

تم 2021-06-30

تم JavaScript implementations (Task P1.12). — المهمة T275944

تم JavaScript implementations (Task P1.12). — المهمة T275944 تم Python implementations (Task O6). — المهمة T273517

تم Python implementations (Task O6). — المهمة T273517 تم Allow forms to be included for evaluation. — المهمة T261472

تم Allow forms to be included for evaluation. — المهمة T261472

Phase completion condition: As a user, I can use a special page to evaluate a user-written function in one of the supported languages, e.g. call a user-written function in Python to add up two numbers.

Phase ζ (zeta): composition —  تم 2021-08-27

تم 2021-08-27

تم Allow for composition implementations (Task P1.6). — المهمة T261468

تم Allow for composition implementations (Task P1.6). — المهمة T261468

Phase completion condition:

- As a user, I can implement a function using composition of other functions, rather than writing it myself, e.g.

negate(Boolean → Boolean). — تم

تم - (Stretch condition) As a user, I can see the results of testers on the relevant function implementation's page. [This might need to be moved to a later phase as not all requirements may be met this point. Must be done by phase ι.] —

تم

تم

Phase η (eta): generic types —  تم 2022-04-08

تم 2022-04-08

تم Allow for generic types, particularly for Z10/List and Z8/Function, and replace Z10/List and Z8/Function. ― المهمة T275941

تم Allow for generic types, particularly for Z10/List and Z8/Function, and replace Z10/List and Z8/Function. ― المهمة T275941 تم Errors can be processed like ZObjects.

تم Errors can be processed like ZObjects. تم User-defined types work with validators.

تم User-defined types work with validators.

Phase completion condition:

- Being able to implement

curryas a composition on the wiki, but without requiring strict static analysis — تم

تم - Making it possible to create the following three 'user-defined' types on the wiki:

positive integer,sign, andinteger— تم

تم - Being able to make a generic wrapper type through composition on the wiki —

تم

تم

See also the newsletter posted about this phase.

Phase θ (theta): thawing and freezing —  تم 2023-06-19

تم 2023-06-19

تم Freezing and thawing content (Task P1.7). ― المهمة T275942

تم Freezing and thawing content (Task P1.7). ― المهمة T275942 تم Task P1.9: Pass security review. — المهمة T274682, …

تم Task P1.9: Pass security review. — المهمة T274682, … تم Launch public test system (Task P1.4). — المهمة T261469

تم Launch public test system (Task P1.4). — المهمة T261469

Phase completion condition:

- As a sysop, I can freeze and unfreeze any user-written object (akin to, or maybe the same as, MediaWiki's protection system); all system-supplied objects are permanently frozen.

- As a user editing a frozen page, I can change the label, but not the implementation, whereas on an unfrozen page both are possible.

- ZObjects are stored using the new canonical form for typed lists, and all parts are still working

- View and edit function is implemented and tested successfully

- When several implementations are available, the "best" is chosen. (Fitness determination to potentially be changed later.)

- We measure the clock time & memory use of each function run, and display it on the execution result & in the implementation/test table.

- Edits to system-defined ZObjects are restricted to users with the correct rights. Understandable diffs are emitted. Results are cached.

- Text with fallback, references, strings, lists is implemented and tested successfully

- A shared understanding with the community of how the team will contribute to Wikifunctions, and why, is documented

- Designs for viewing and editing multi-lingual documentation on mobile and desktop are approved. UX is instrumented and data collected.

Phase ι (iota): documentation of objects

- This is a preliminary assignment, moving the documentation tasks here.

- Provide editing for the header (additionally to full raw editing) (that is a pretty big task) — this refers only to the labels, actually.

- Extend editing for Z2K3/label with multilingual text.

- Extend the header with Z2K4/documentation. — المهمة T260954 & المهمة T260956

- Extend editing to deal with Z2K4/documentation. — المهمة T260955

Phase completion condition: As a user, I can document a ZObject in any and all supported languages, using a wikitext.

Phase κ (kappa): cleanup

- Tightening up and clean up tasks, to close all pre-launch tasks.

Phase completion condition: As the Abstract Wikipedia Team, we feel ready for launch, including sign-off from all relevant colleagues.

Phase λ (lambda): launch

- Phase λ (lambda) is meant for launch. If there are pre-launch tasks that prevent that, so be it, obviously.

- Set up a new Wikimedia project.

- Move some of the wiki pages about the project from Meta to Wikifunctions.

Phase completion condition: As a person on the Web, I can visit and use Wikifunctions.org to write and run functions directly on the site.

Unphased tasks

Pre-launch tasks that need to happen but are not phased in yet: