Research:Identification of Unsourced Statements/Citation Reason Pilot

| This page in a nutshell: The goal of this labeling campaign is to collect reliable reasons for adding a [citation needed] tag on 2K statements in 3 languages. (see also this page in French and Italian.) |

.

About

[edit]

If you are an editor of the French, Italian or English Wikipedia interested to contribute in building technologies for improving missing citation detection in Wikipedia articles, please read on.

As part of our current work on verifiability, the Wikimedia Foundation’s Research team is studying ways to use machine learning to flag unsourced statements needing a citation. If successful, this project will allow us to identify areas where identifying high quality citations is particularly urgent or important.

To help with this project, we need to collect high-quality labeled data regarding individual sentences: whether they need citations, and why.

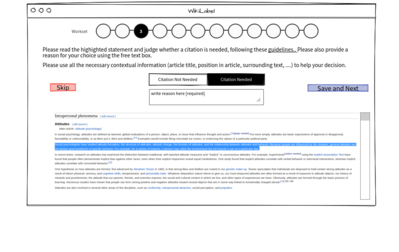

We created a tool for this purpose and we would like to invite you to participate in a pilot. The annotation task should be fun, short, and straightforward for experienced Wikipedia editors.

How to participate

[edit]If you are interested in participating, please proceed as follows:

- Sign-up by adding your name to the sign-up page (this step is optional).

- Go to your language campaign (English Wikipedia, French Wikipedia, Italian Wikipedia), login, and from 'Labeling Unsourced Statements’, request one (or more) workset. Each workset takes maximum 5 minutes to complete and contains 5 tasks. There is no minimum number of worksets, but of course the more labels you provide, the better.

- For each task in a workset, the tool will show you an unsourced sentence in an article and ask you to annotate it. You can then label the sentence as needing an inline citation or not, and specify a reason for your choices.

- If you can't respond, please select 'skip'. If you can respond but you are not 100% sure about your choice, please select 'Unsure'.

If you have any question/comment, please let us know by sending an email to miriam@wikimedia.org or leaving a message on the talk page of the project. We can relatively easily adapt the tool if something needs to be changed.

Initial Results

[edit]We have analyzed an initial subset of 500 annotated statements. Results and comments can be found in our in-depth analysis page