Research talk:Revision scoring as a service/Work log/2016-02-11

Thursday, February 11, 2016[edit]

OK. I now have the 500k set split into 400k training and 100k testing subsets. Here's the lines from the Makefile:

datasets/wikidata.rev_reverted.nonbot.400k_2015.training.tsv: \ datasets/wikidata.rev_reverted.nonbot.500k_2015.tsv head -n 400000 datasets/wikidata.rev_reverted.nonbot.500k_2015.tsv > \ datasets/wikidata.rev_reverted.nonbot.400k_2015.training.tsv datasets/wikidata.rev_reverted.nonbot.100k_2015.testing.tsv: \ datasets/wikidata.rev_reverted.nonbot.500k_2015.tsv tail -n+400001 datasets/wikidata.rev_reverted.nonbot.500k_2015.tsv > \ datasets/wikidata.rev_reverted.nonbot.100k_2015.testing.tsv

$ wc datasets/wikidata.rev_reverted.nonbot.400k_2015.training.tsv 400000 800000 6399444 datasets/wikidata.rev_reverted.nonbot.400k_2015.training.tsv $ wc datasets/wikidata.rev_reverted.nonbot.100k_2015.testing.tsv 99966 199932 1599314 datasets/wikidata.rev_reverted.nonbot.100k_2015.testing.tsv

So, we don't have quite 100k in the testing set because some revisions had been deleted before I could do the labeling.

Now for feature extraction.

datasets/wikidata.features_reverted.all.nonbot.400k_2015.training.tsv: \

datasets/wikidata.rev_reverted.nonbot.400k_2015.training.tsv

cat datasets/wikidata.rev_reverted.nonbot.400k_2015.training.tsv | \

revscoring extract_features \

wb_vandalism.feature_lists.experimental.all \

--host https://wikidata.org \

--include-revid \

--verbose > \

datasets/wikidata.features_reverted.all.nonbot.400k_2015.training.tsv

datasets/wikidata.features_reverted.all.nonbot.100k_2015.testing.tsv: \

datasets/wikidata.rev_reverted.nonbot.100k_2015.testing.tsv

cat datasets/wikidata.rev_reverted.nonbot.100k_2015.testing.tsv | \

revscoring extract_features \

wb_vandalism.feature_lists.experimental.all \

--host https://wikidata.org \

--include-revid \

--verbose > \

datasets/wikidata.features_reverted.all.nonbot.100k_2015.testing.tsv

Here, we lost a lot of observations to non-Mainspace edits.

$ wc datasets/wikidata.features_reverted.all.nonbot.400k_2015.training.tsv 397050 24617100 97650478 datasets/wikidata.features_reverted.all.nonbot.400k_2015.training.tsv $ wc datasets/wikidata.features_reverted.all.nonbot.100k_2015.testing.tsv 99222 6151764 24403909 datasets/wikidata.features_reverted.all.nonbot.100k_2015.testing.tsv

Now, I can split up this "all" feature set into subsets with cut. For example, if we want just the general set.

datasets/wikidata.features_reverted.general.nonbot.400k_2015.training.tsv: \

datasets/wikidata.features_reverted.all.nonbot.400k_2015.training.tsv

cat datasets/wikidata.features_reverted.all.nonbot.400k_2015.training.tsv | \

cut -f1-34,62 > \

datasets/wikidata.features_reverted.general.nonbot.400k_2015.training.tsv

datasets/wikidata.features_reverted.general.nonbot.100k_2015.testing.tsv: \

datasets/wikidata.features_reverted.all.nonbot.100k_2015.testing.tsv

cat datasets/wikidata.features_reverted.all.nonbot.100k_2015.testing.tsv | \

cut -f1-34,62 > \

datasets/wikidata.features_reverted.general.nonbot.100k_2015.testing.tsv

The first column is the rev_id, the next 33 columns are the general feature set, and the 62nd column is the True/False (reverted or not) label. This work is a bit ticklish, but it makes for fast sub-samples of the dataset and seems to be working as expected.

Test scores[edit]

So, I want a dataset with rev_id, score pairs, so I adapted one of the test utilities to just output the True probability prediction. See https://github.com/wiki-ai/wb-vandalism/blob/sample_subsets/wb_vandalism/test_scores.py Here's the docs:

Tests a model by generating a set of scores and outputting probabilities.

Usage:

test_scores <model-file>

[--verbose]

[--debug]

Options:

-h --help Prints this documentation

<model-file> The path to a model file to load.

And the Makefile rule.

datasets/wikidata.reverted.all.rf.test_scores.tsv: \

datasets/wikidata.features_reverted.all.nonbot.100k_2015.testing.tsv \

models/wikidata.reverted.all.rf.model

cat datasets/wikidata.features_reverted.all.nonbot.100k_2015.testing.tsv | \

python wb_vandalism/test_scores.py \

models/wikidata.reverted.all.rf.model > \

datasets/wikidata.reverted.all.rf.test_scores.tsv

I ran into a couple bugs (mostly Makefile typos) along the way, so the last few test_scores files are still being generated. Tomorrow, I hope to dazzle you (dear reader) with graphs of precisions, recalls and filter rates! --EpochFail (talk) 05:28, 11 February 2016 (UTC)

Response time tester[edit]

Today I built Response time tester. In order to use it you can do something like this:

sql wikidatawiki "select rc_this_oldid from recentchanges where rc_Type = 0 order by rand() limit 5000;" | python3 time_scorer.py wikidatawiki --model=reverted > res2.tsv

It stores rev id and response time also error log, if any.

- https://tools.wmflabs.org/dexbot/res2.txt -> 1000 edits from Wikidata, not batched

- https://tools.wmflabs.org/dexbot/res_wikidata.txt -> 1000 edits from Wikidata, batched

- https://tools.wmflabs.org/dexbot/res_enwp.txt -> 1000 edits from English Wikipedia, not batched

- https://tools.wmflabs.org/dexbot/res_enwp_batched.txt -> 1000 edits from English Wikipedia, batched

I also draw some plots, upload them really soon. There are two peaks, one is close to zero which is for cached edits (probably) and also the other one around 1.6 seconds. Amir (talk) 19:07, 11 February 2016 (UTC)

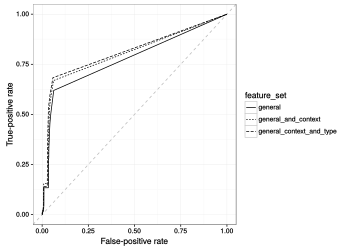

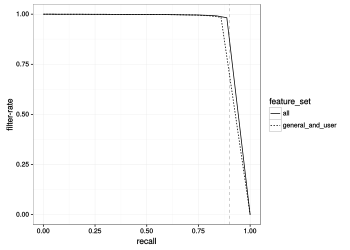

Fitness curves for our models[edit]

I just got done building some fitness curves for our models. For this, I used the 100k test set that we held aside during training. All models were tested on the exact same set of 99,222 revisions.

Generally, I think that we can take-away the same thoughts that we had from the basic statistics. It looks like `all` and `general+user` have a clear benefit over the other feature subsets. We get a very minor benefit when adding contextual and edit type based features to general and user. This doesn't seem like much when looking at the graphs, but note that the filter rate @ .90 recall is substantially higher when using all of the features. This amounts to the difference between reviewing 5% of edits and reviewing 35% of edit. That's 7X more reviewing workload! --EpochFail (talk) 19:50, 11 February 2016 (UTC)

- Just added the ROC plots. They are a bit deceiving since the false-positive and rate jumps from tiny values to 1.00. --EpochFail (talk) 22:01, 11 February 2016 (UTC)

Speed of scoring[edit]

Ladsgroup and I have been working on a good visualization for the speed at which you can get "reverted" prediction scores from ORES for Wikidata. See his notes above. Here's a first hack at a black-and-white plot of the timing.

So, it looks like single requests will generally be scored in 0.5 seconds with rare cases taking up to 2-10 seconds. If the score has already been generated, the system will generally respond in 0.01 seconds! This is a common use-case since we run a pre-caching service that caches scores for edits as soon as they are saved. If you want to get a bit more performance when scoring a large set, it looks like you can expect to average 0.12 seconds per revision when requesting scores in batch. As the plot suggests, this timing varies quite widely -- likely due to the rare individual edits that take a loooong time to score. --EpochFail (talk) 21:45, 11 February 2016 (UTC)