Grants:IEG/Revision scoring as a service/Final

![]() This project is funded by an Individual Engagement Grant

This project is funded by an Individual Engagement Grant

![]() This Individual Engagement Grant is renewed

This Individual Engagement Grant is renewed

| renewal scope | timeline & progress | finances | midpoint report | final report |

Welcome to this project's final report! This report shares the outcomes, impact and learnings from the Individual Engagement Grantee's 6-month project.

Part 1: The Project

[edit]Summary

[edit]Our project aims to create an Artificial Intelligence (AI) infrastructure for Wikimedia sites as well as any other Mediawiki installation. We have successfully deployed a working revision scoring service that currently supports 5 languages (enwiki, fawiki, frwiki, ptwiki and trwiki) and we have made the onboarding of new languages relatively trivial (specify language-specific features or simply choose to use only language-independent features). In order to gather en:labeled data for new languages and machine learning problems, we also developed and deployed a generalized crowd-sourced labeling system (see Wiki labels) with translations for all of our supported languages.

We currently support two types of models:

- "reverted" -- Predicts whether an edit will need to be reverted. This is useful for counter-vandalism tools and newcomer support tools like en:WP:Snuggle

- "wp10" -- Predicts the Wikipedia 1.0 assessment rating of an article. This is useful to triage WikiProject assessment backlogs. (e.g. Research:Screening WikiProject Medicine articles for quality)

An "edit_type" model is currently in development.

To accomplish this, we have developed and released a set of libraries and applications that are openly licensed (MIT):

revscoring-- a python library for building machine learning models to score MediaWiki revisionsores-- a python application for hostingrevscoringmodels behind a web APIwikilabels-- a complex web application built in python/html/css/javascript that uses OAuth to integrate an on-wiki gadget with a WMFLabs-hosted back-end to provide a convenient interface for hosting crowd-sourced labeling tasks on Wikimedia sites

This project is hardly done and will likely (hopefully) never be done. We leave the IEG period working on developing new models, increasing the systems' scale-ability and coordinating with tool developers (e.g. en:WP:Huggle) to switch to using the revscoring system.

Methods and activities

[edit]This project was very technically focused, so primarily, we built stuff.

| Revscores | ORES | Labels |

|---|---|---|

|

|

|

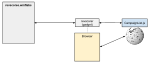

- The technical work was split across three components

- Revscoring (Revision Scoring): Which houses the AI back-end with a number of machine learning classifiers.

- ORES (Objective revision evaluation service): Our API usable by tool developers and academia. Users, tools and bots alike are able to query the API.

- Wiki labels: Unlike existing AI tools, we made an extra effort to crowdsource handcoding to contributors which serves as a means for us to generate a training set but also get feedback from the communities we serve to better train our machine learning algorithms.

With technical work comes documentation. We wrote a lot of documentation.

- We wrote docs on how to make use of the revscoring system (since "ores" is just one use-case): see pythonhosted.org/revscoring

- We wrote docs on how Wiki labels works and how you can enable it in your Wiki and documented the project on individual wikis (e.g. en:WP:labels & fr:WP:Label)

- We published weekly status updates so that our followers could track our progress more easily: see Research talk:Revision scoring as a service

Finally, we also engaged in substantial work with "the community". We discussed in out Midpoint how we were featured in the Signpost on several Wikis. Since the midpoint most of our community engagement has revolved around Wiki labels campaigns -- reporting on progress, answering questions, fixing bugs reports and encouraging continued participation. E.g., see the talk page for our English Wikipedia labeling campaign.

Outcomes and impact

[edit]Outcomes

[edit]As we have covered previously, we have deployed the systems we set out to build and deploy. The only area in which we did not reach our goal was that we were not able to complete the labeling campaigns quickly enough. That means our models are trained on reverted edits rather than human-labeled data. However, this is temporary and will be rectified as soon as the campaigns are completed.

As far as measurable outcomes, we stated two categories: model fitness and adoption rate.

Progress towards stated goals

[edit]| Planned measure of success (include numeric target, if applicable) |

Actual result | Explanation |

| Comparable to state-of-the-art model fitness | We are matching or beating the reported state-of-the-art (84% AUC) in 5 wikis for our revert predictor models | Woo! |

| Adoption by tool developers. | We didn't actually instrument our API to measure usage. | It seems that our time was better spent engineering the system to be able to deal with demand than counting individual tools. However, we have some key indications of adoption & planned adoption. WEF and WikiProject X are making use of our article quality predictor. We are working with en:WP:Huggle devs help them convert to using ORES. We have developed our own tool based on revision scores (see RCScoreFilter which currently has 11 users despite it's demo-level quality). |

Global Metrics

[edit]These metrics have a limited relevance for our project as we are primarily building a back-end infrastructure hence we would not have a direct impact on content creation. Furthermore, the ultimate goal of our project is to process on-wiki tasks such as counter-vandalism or WP 1.0 assessments and predict future cases as accurately as possible. As such, we rely on the feedback of more experienced users which at this stage of our project significantly limits our interaction with newer users. As such we do not achieve such global metrics as much as provide the means of support to help others do so.

In reflection, it seems like this list is more relevant to edit-a-thons and other in-person events where it is easier to count individuals and to track the work that they do. However, we try to address the prompts as well as we can below.

For more information and a sample, see Global Metrics.

| Metric | Achieved outcome | Explanation |

| 1. Number of active editors involved | 51 active editors helping us label revisions, several others involved in discussion of machine learning in Wikipedia in general. How many users WikiProject Medicine and the WEF account for? | |

| 2. Number of new editors | 0 | We aren't in the stage yet were our project will have impacted this. |

| 3. Number of individuals involved | Measurement of this is not tractible. | |

| 4. Number of new images/media added to Wikimedia articles/pages | N/A | We do not add new content. Media related to our project: Commons:Revision scoring as a service (category). |

| 5. Number of articles added or improved on Wikimedia projects | 0 | Our system supports triage, so you might count every article where a user used our tool to perform a revert, but that's probably not is desired here. |

| 6. Absolute value of bytes added to or deleted from Wikimedia projects | N/A | We don't really edit directly -- just evaluate in support of editors doing the work themselves. We did however create a landing page on the wikis we operate on: |

- Learning question

- Did your work increase the motivation of contributors, and how do you know?

- This is a larger goal of User:Halfak (WMF)'s research agenda. In his expert opinion, this project provides a critical means to stopping the mass demotivation of newcomers[1]. So, to answer the exact question, we did nothing to increase contributor motivation. To answer the spirit of the question, we have done some very critical things to preserve contributor motivation. See Halfak's recent presentation for more discussion.

Indicators of impact

[edit]- How did you improve quality on one or more Wikimedia projects?

- We built a system that provides the essential functionality to triage quality work -- AI-based prediction of quality and quality changes.

- Our system provides this quality dimension in a way that is extremely easy for tool developers to work with.

- Our system provides this quality dimension to wikis that have historically suffered from a lack of triage support.

Project resources

[edit]Mockups

Screenshots

Presentations

ORES performance plans & analysis

Project management

- trello.com/b/kks8UsRv/revscoring -- Managing weekly tasks

- etherpad.wikimedia.org/p/revscoring -- Meeting notes (outside of trello)

Project documentation

- Research:Revision scoring as a service (General documentation)

Repositories

- github.com/halfak/Revision-Scoring

- github.com/halfak/Objective-Revision-Evaluation-Service

- github.com/halfak/Wiki-labels

Wiki labels WikiProjects

- en:Wikipedia:Labels

- az:Vikipediya:Etiketləmə

- de:Wikipedia:Kennzeichnung

- fa:ویکیپدیا:برچسبها (demonstrates rtl and unicode support!)

- fr:Wikipédia:Label

- pt:Wikipédia:Projetos/Rotulagem

- tr:Vikipedi:Etiketleme

Wikimedia Labs project

Nova_Resource:Revscoring

Outreach

- phab:T90034 -- Wikimedia Hackathon session

- English Wikipedia signpost en:Wikipedia:Wikipedia Signpost/2015-02-18/Special report

- Portuguese Wikipedia technical Village Pump:

- Persian Wikipedia

- Turkish village pump

- Azerbaijani village pump

Learning

[edit]What worked well

[edit]- SCRUM methodology

- We held weekly meetings to adjust and/or reprioritize our workload to best meet our longer term goals. Do this! This methodology was particularly helpful when something unexpected happened where we needed to shift our attention to a specific component of our project.

- Project management with trello

- This is probably true to any card/swimlane-based system. When it came to prioritizing work and coordinating who would work on what, it was very helpful to be able to assign cards, and then talk about them next week if they were not finished. Do this. It's good.

- Lots of mockups

- We didn't have a single disagreement about how something should look or work because we mocked things up in advance. For interfaces, we started mocking them up before the IEG began and went through a few iterations before implementing things. We also "mocked up" APIs by talking about what URLs would be served and what the responses would look like. This let us work quickly when it was time to write the code.

- Hackathons

- We gathered a lot of attention and many potential long-term collaborators to the project at the Wikimedia Hackathon in Lyon. We're planning to do a similar push at the Wikimania Hackathon since it was such an obvious win.

- Presentations

- We presented on this project in several different venues. This has helped us get some of the resources that we need. It's common that IEG-like projects are a bit ahead of their time. When that's the case, helping an audience understand *why* it is you are doing what you are doing is critical.

- Code review

- We followed a simple rule for each code project once it hit a basic level of maturity: You may not merge your own changes into "master". That means we filed pull request and had to have at least one other person sign off on the change before it would be incorporated into the "master" branch of the code. While this had the potential to slow us down, we could minimize this problem by communicating about open pull requests whenever we met. The plus side was our code quality is high and the combined concerns of our team were expressed effectively on the code-bases we shared.

- Learning patterns

- Keeping documentation of discussions with team: we kept some notes on Etherpad during our meetings

- Project roles: we had some roles assigned for each participant (e.g. community engagement, project management, reporting and documentation)

- Short reports go a long way is a learning pattern related to our usage of Trello and git history as basis for the weekly/monthly reports

- Let the community know is an important pattern when the success of our project also depends on the participation of volunteer members of the communities (e.g. to label revisions which are used to train Machine Learning models)

What didn’t work

[edit]- We had some difficulty attracting volunteers where even users with a technical background were a bit disheartened from participating and assisting with handcoding the data through wiki-labels.

- We believe there are two reasons for this:

- The complicated and hard to understand nature of Artificial Intelligence in general caused users to shy away from participating even though our work reduces the need to understand how Artificial Intelligence works. Hence, we weren't able to fully convey the social aspects of our technical work quickly enough to gather enough volunteers for wiki labels.

- Users are afraid to make mistakes hence they do not want to make "close calls". We circumvented this problem by adding an "unsure" checkbox.

Other recommendations

[edit]- We do believe a separate global metrics is needed to better quantify the kind of technical infrastructure work we are preforming. Even though our project intends to have a high impact to fulfill Wikimedia's strategic goals, our impact at this stage will mostly be indirect.

- For instance we enable tool developers to develop tools using AI, as a result the performance impact of such tools would be improved however this entirely depends on how quickly our system would be adopted.

- Also consider that we are trying to stop mass demotivation of newcomers and to preserve contributor motivation which we believe will have a profound impact on the Wikimedia Strategic Goals but are not measured by the global metrics at the moment. For example at this stage of our project we are seeking to prevent alienation of newcomers generated by other means such as other projects or campaigns rather than seeking to generate them ourselves. So in a sense we are indirectly supporting all other attempts to generate newer users.

Next steps and opportunities

[edit]- The limitless nature of opportunities offered by Artificial Intelligence makes this particularly difficult to summarize. We have so far only uncovered the tip of the iceberg.

- In this six months as it was discussed before, we were able to setup the Artificial Intelligence framework to build on top of.

- We have also addressed the more back-end but critical aspects of the hardware/performance aspects of our project.

- Hence we see a great potential for our project if we can continue with our project. In the next six months...

- We discuss this in greater detail at the renewal proposal.

Part 2: The Grant

[edit]Finances

[edit]Actual spending

[edit]| Expense | Approved amount | Actual funds spent | Difference |

| Development & community organizing stipends (とある白い猫) | $7500 | $7500 | $0 |

| Development & community organizing stipends (He7d3r) | $4875 | $4875 | $0 |

| Development & community organizing stipends (He7d3r) | $4500 | $4500 | $0 |

| Total | $16875 | $16875 | $0 |

Remaining funds

[edit]- No unspent funds remain.

Documentation

[edit]Did you send documentation of all expenses paid with grant funds to grantsadmin![]() wikimedia.org, according to the guidelines here?

wikimedia.org, according to the guidelines here?

Please answer yes or no. If no, include an explanation.

- Yes

Confirmation of project status

[edit]Did you comply with the requirements specified by WMF in the grant agreement?

Please answer yes or no.

- Yes.

Is your project completed?

Please answer yes or no.

- Yes, we achieved our goals for this 6 month cycle and we are seeking a renewal to build on top of our progress.

Grantee reflection

[edit]- This project allowed me to utilize my expertise in Artificial Intelligence for the benefit of the Wikimedia family as a whole. I enjoyed this aspect of the IEG the most. -- とある白い猫 chi? 09:50, 24 June 2015 (UTC)

- Seeing for the first time the recent changes feed coloured with predictions of reversions obtained from our project was really amazing. I enjoyed the process to get there a lot :-) It allowed me to practice my understanding of AI, Python while creating something which will benefit a lot of users. Helder 14:47, 24 June 2015 (UTC)