Research:Article feedback/Stage 3/Conversion and newcomer quality

| Article Feedback v5 | Data & Metrics | Stage 1: Design (December 2011 - March 2012) |

Stage 2: Placement (March 2012 - April 2012) |

Stage 3: Impact on engagement (April 2012 - May 2012) |

|

WP:AFT5 (Talk) Dashboards |

Volume analysis |

Conversions and newcomer quality Final tests Quality assessment |

Methods[edit]

Experimental conditions[edit]

- 0X - control case

- 1E - AFTv5 Option 1 appears at the bottom of the article and also via prominent link E. After feedback is submitted a call to action appears that requests the user make an edit.

- 4E - AFTv5 is replaced with a direct call to action at the bottom of the page or via prominent link E.

Datasets[edit]

aft_new_users

- All edits made to the sample of articles between 4/27 and 5/07 were gathered from the clicktracking logs (n=4195)

- Limited the set to the first tracked edit per user (n=2184)

- Limited the set to only those editors who had not made a previous edit (using checkuser to eliminate registered editors editing anonymously) (n=578)

aft_new_user_revisions

- For each new user, the revisions saved (both added and deleted) to all pages (including those outside the sample) within a week of their first edit was gathered from the revision & archive MySQL tables.

- To observe contributions made by editors who registered an account after making their transition, the recentchanges table was used to look for their account registration. This ended up applying to very few users (0X: 4, 1E: 4, 4E: 9).

- Using the API and reverts were identified by looking for identical revision content within 15 revisions before and after the edit as described in [1], revisions that were reverted within 48 hours by another editor were flagged.

Metrics[edit]

Transition rate. The driving research question of this study is "How does AFTv5 affect rate at which readers transition to become new editors?" This question is particularly relevant due to the recently observed English Wikipedia decline and the well researched conclusion that the decline is a product of decreased newcomer retention[2][3]. Concerns have also been raised that AFTv5 has the potential to "cannibalize" potential new editors by redirecting the energy they may have put into editing into feedback[citation needed].

To explore the transition rate, we used the click tracking logs produced from readers and editors using the tool to identify which experimental condition was delivered at the time that an edit is made[4]. We limit our assessment of a "transition" to editors who appear to have made their first edit as described above for aft_new_users.

This approach is limited in that it disregards editors who began editing on pages outside of our sample. Also, it is difficult to know when anonymous editors have never edited before due to prevalence of dynamic IP addresses. We assume that these limitations apply equally to each of our experimental conditions and do not reduce our ability to reason about comparisons, however, these discrepancies may have unknown effects on individual statistics interpreted outside of comparison.

Productive edits and editors. Comparing the value of good contributions (productivity) with the cost of bad contributions (anti-productivity) is essential for understanding the cost and benefit of introducing new editors into Wikipedia. To measure the productivity, we borrow the judgement of other editors (expressed in the form of a revert) to help us evaluate the quality of contributions. We assume that, if a revision was reverted by another editor within 48 hours, it was likely to have been unproductive. On the contrary, we assume that revisions that were not reverted within 48 hours were likely to have contributed some value to the articles they changed. We limit the revert analysis to 48 hours control for revisions that were saved towards the beginning of the observation period under the assumption that revert tools like Huggle make the quick identification of unproductive edits across the wiki both efficient and predictable.

I limit my analysis to edits within the Main (encyclopedia article) namespace since a revert/non-revert of a revision in another namespace is not so closely coupled to the utility of the contribution. For example, many talk page contributions can appear to have been reverted by the common process of archiving conversations.

As an extension of our approach for determining the productivity of edits, I apply a similar concept to identifying "productive editors". I classify an editor as productive if, within their first week of editing, they make at least one contribution to an encyclopedia article that is not reverted within 48 hours.

Origin of transition. To explore the differences between new editors based on how their first edit originated, we used data from the clicktracking logs that logged how each editing user arrived at the edit pane. This metric allows for differentiation between editors who made edits directly through the call to action and those who entered via the usual vectors.

Possible values:

- Tab: Clicked the edit tab at the top of the page

- Section: Click the section [edit] link

- CTA: Click "Edit this page" button in the call to action (not relevant for 0X)

Research Questions[edit]

How are new editor conversions affected?[edit]

To look for difference between the conversion rates of the three experimental conditions, the total number of new editors who appeared to making their first edit to the sampled articles was observed during a the period between April 27th and May 7th. New editors by origin plots the total number of new contributors stacked by how the click tracking logs suggest they accessed the edit pane. Both in total and by origin, option 1E converted more new contributors than the control condition (0X). This result represents strong evidence against the cannibalization hypothesis.

| exp. condition | origin | new users |

|---|---|---|

| 0X | tab | 39 |

| 0X | section | 77 |

| 1E | tab | 49 |

| 1E | section | 91 |

| 1E | cta | 31 |

| 4E | tab | 36 |

| 4E | section | 77 |

| 4E | cta | 178 |

To determine whether the differences in the number of conversions are real and not simply random anomalies, a χ2 significance test was performed with page views as the denominator. According to data retrieved by User:DarTar, the random sample of pages upon which the experiment was loaded were viewed 9,424,041 times. One might naively assume that dividing that number by three would represent the number of views that fell under each bucket, but since this is a random variable, it's probable that the values are slightly skewed. Using a binomial approximation for the bucketing strategy, we can find the expected standard error rate for the underlying proportion (sqrt(p*(1/p)/n) = sqrt(.33*(1-.33)/9,424,041) = 0.00004807). To determine the number of view that would like be affected by this for the bucketing strategy, we can multiply the proportion's standard error by the expected proportion of total views (0.00004807 * 3141347 = 151.00). In order to apply variance in observations conservatively, we can draw 95% confidence intervals around each condition's views (9,424,041/3 +- SE*1.96[5] = 3141347 += 151.00*1.96 = 295.96) and use the closest limits of the confidence intervals as the n for a chi^2 significance test. Even with this conservative approximation views taken into account, the proportions of converted new editors is significantly different for all three conditions (0X < 1E: p=0.002, 1E < 4E: p<0.001). Estimated proportion of new editor conversion plots the estimated conversion/views proportions with conservative confidence intervals based on the view rate approximation.

How is the rate of new productive editors affected?[edit]

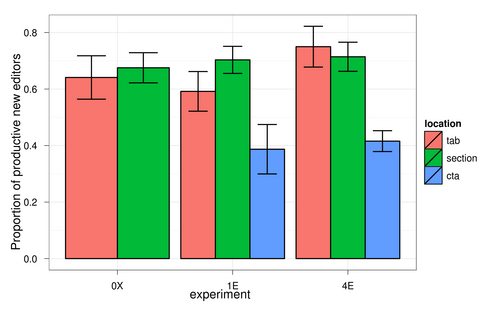

To determine the rate of productive editors elicited via each of the experimental conditions we use the metric for productive editors (described above) that categorizes an editor as productive if they make at least one contribution to an article that is not reverted in the first 48 hours. Productive editors by experiment plots the proportion of productive editors. Compared to the control condition (0X), a χ2 test shows the difference in the proportion of productive editors to be insignificantly lower than the 1E (diff=0.05, p=0.463) condition, but significantly lower for the 4E condition (diff=0.127, p=0.025).

Productive editors by origin of first edit breaks down the proportion of productive editors by how they initially reached the edit pane. A χ2 test shows that the difference in proportion of productive editors originating via the CTA is significantly lower than those originating via the tab or section link in both experimental conditions (1E: p=0.009, 4E: p<0.001). This result is evidence that the editors entering via the CTA are significantly less likely to be productive in their first week than editors originating from the standard edit links. However, it is important to note that, despite the significantly lower proportion, ~40% of editors still do manage to make at least one productive contribution in their first week.

How productive are these new editors?[edit]

To explore the productivity of new edits elicited via the experiment, we measured the number of edits the editors made in their first week compared with the raw number of productive vs. unproductive contributions. Revisions per new editor week plots the average number of revisions that each editor performed based on their experimental condition. A Student's t-test fails to find a significant difference between the control and 1E (p=0.778) or 4E (p=0.214).

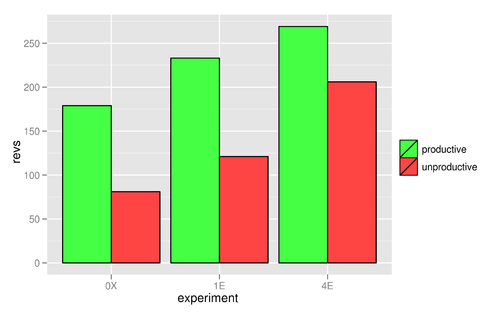

To explore the raw benefit (productive edits) and additional work (unproductive edits) incurred by the incoming new editors, the raw total. A χ2 test shows that the proportion of productive edits in condition 1E was insignificantly lower than the control (p=0.48) while condition 4E was significantly lower (p=0.002). Productivity vs anti-productivity plots the raw number of productive and unproductive edits received in the experimental cases. This result suggests that the indirect CTA in option 1E did not substantially lower the overall productivity from the control, but with high confidence the direct CTA of 4E did.

It's important when consuming these results to keep in mind that only the first week's worth of new user activity was monitored. Due to constraints in the analysis time period, the long term productivity of editors in these cases could not be observed and is therefore not represented by these statistics or plots.

| exp. condition | productive | edits | editors |

|---|---|---|---|

| 0X | yes | 179 | 77 |

| 0X | no | 81 | 39 |

| 1E | yes | 233 | 105 |

| 1E | no | 121 | 66 |

| 4E | yes | 269 | 156 |

| 4E | no | 206 | 135 |

References[edit]

- ↑ Halfaker, A., Kittur, A., Kraut, R., and Riedl, J. A Jury of Your Peers: Quality, Experience and Ownership in Wikipedia, 2009. WikiSym. ACM.

- ↑ Editor Trends Study

- ↑ Bongwon Suh, Gregorio Convertino, Ed H. Chi, and Peter Pirolli. 2009. The singularity is not near: slowing growth of Wikipedia. WikiSym'09. Article 8, 10 pages. DOI=10.1145/1641309.1641322 [1]

- ↑ Note that it is impossible to track individual users until the time at which they make an edit.

- ↑ Note that 1.96 is the 97.5% quantile for a standard normal distribution