Grants:IdeaLab/Automated good-faith newcomer detection

Project idea

[edit]What is the problem you're trying to solve?

[edit]Recent changes patrolling, review and warning templates have become common to curation practice in large wikis. Research suggests that when good-faith newcomers interact with these quality control processes, they tend to be demotivated and frustrated by the lack of personal human interaction[1]. But efficiency is a serious concern. For example, English Wikipedia is edited 160k times per day. Reviewing edits at that scale requires that we minimize the amount of time that patrollers need to spend on each edit (and therefor, the contributing editor). This is why warning templates and high-speed automation have become so popular. Having a real human interaction with every new editor whose contribution was rejected would require an overwhelming additional investment of time and energy.

What is your solution?

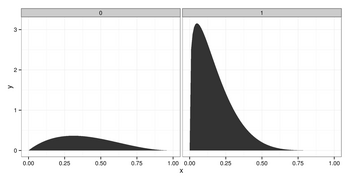

[edit]We know how to predict which new editors are likely to be editing in good-faith.[2] If we could make the predictions of such a model available as a service, automated quality control tools like Huggle could incorporate the prediction signal such that, when a Huggle user reverts a newcomer who is likely to be editing in good-faith, they are interrupted and encouraged to take a little bit of extra time to have a personal interaction.

Further, such a model could feed into systems like HostBot that route good-faith newcomers to newcomer support spaces like the Teahouse.

There's many potential use-cases for such a prediction model. By releasing a prediction model for good-faith newcomers, we could empower a whole class of new tools directed towards support good-faith new editors and to help target them for socialization & training (as opposed to rejection and warning).

Goals

[edit]Get Involved

[edit]About the idea creator

[edit]I'm a Senior Research Scientist at the Wikimedia Foundation. I've personally performed research studies around the retention of newcomers, the quality of new article creations, and the design of machine learning tools for supporting curation practices.

Participants

[edit]Endorsements

[edit]- endorse we need to move away from automatic reversion based on source, and move to assessment and rejection based on quality of edit. there are some semi-automatic welcomes, but automating this process will free up the humans to do other tasks. Slowking4 (talk) 15:30, 1 March 2016 (UTC)

- Critical to editor uptake. Any tools which allow identification of wheat from chaff a good idea Casliber (talk) 02:51, 3 March 2016 (UTC)

- Endorse; I think the idea could even be expanded to guiding detected newbies, but this is a good start. {{Nihiltres |talk |edits}} 16:54, 8 March 2016 (UTC)

- Not entirely clear on how the detection will work in practice, but if it works, it will be quite valuable Sphilbrick (talk) 14:52, 15 March 2016 (UTC)

- I really like the idea as it causes more newcomers to stay on the site. Muchotreeo (talk) 15:19, 16 March 2016 (UTC)

Expand your idea

[edit]Would a grant from the Wikimedia Foundation help make your idea happen? You can expand this idea into a grant proposal.

- ↑ m:R:The Rise and Decline

- ↑ Halfaker, A., Geiger, R. S., & Terveen, L. G. (2014, April). Snuggle: Designing for efficient socialization and ideological critique. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 311-320). ACM. PDF