Community Insights/2018 Report/Learning & Evaluation

The Learning & Evaluation Team (L&E) helps track the impact of the work across the Wikimedia movement. They guide other Community Engagement teams in executing effective grants. They do this through ongoing analysis, learning, and evaluation support. They develop the capacity of internal and affiliate leaders in program evaluation, design, and learning. They provide a hub of learning and evaluation resources. They execute and apply research and evaluation work to inform WMF’s community engagement strategy.

Besides building capacity for Wikimedia affiliates, the Learning & Evaluation team also supports the Affiliations Committee. They provide administrative and consultative support that helps the committee to make decisions about Wikimedia affiliates.

In this survey, the Learning and Evaluation team would like to answer the following questions:

- What are the characteristics of Wikimedia program & affiliate organizers?

- Are there changes to evaluation capacity development among program and affiliate organizers?

- Is there evidence of improved evaluation capacity for organizers who have participated in the Wikimedia Foundation's evaluation capacity programs?

- What are the support needs related to program evaluation for those who organize Wikimedia programs?

- What are the support needs for Wikimedia affiliates?

Results

[edit]1. What are the characteristics of Wikimedia program & affiliate organizers?

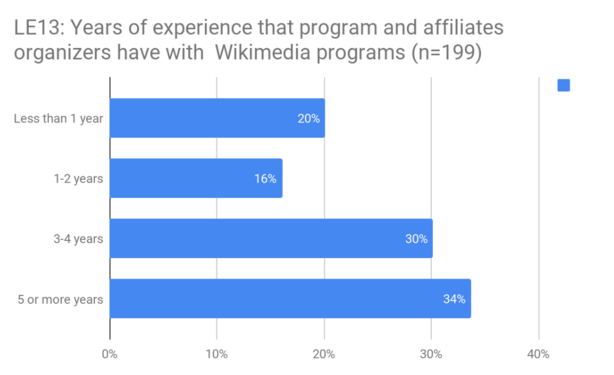

[edit]The average program organizer has been implementing programs for 2.77 years (LE13). While there was a statistically significant difference in the average response between men and women, both reported an average (median) of 1 to 2 years of experience. [1]

Program organizers from Asia may have more years of experience than organizers from Africa, North America, Eastern Europe, and Western Europe.[2] We found no differences when dividing organizers into being from the Global North and from emerging communities.

Program organizers most often reach out to Wikimedia friends for help with implementing Wikimedia programs. (LE01). The second most-often reported across the resources is Wikimedia Meta. When looking at only the social and network resources, Wikimedia Organizers reported reaching out to other Wikimedia friends and Wikimedia affiliates the most. When looking only at media resources, organizers reported using Wikimedia Meta most often, followed by the Outreach wiki.

Affiliate organizers are reporting a reduced proportion who are implementing various Wikimedia Programs (LE12). Compared to 2017, in 2018 affiliate organizers are less often reporting that they organize programs such as Edit-a-thons, Editing Workshops, Education programs, and Wiki Loves Monuments.

A large majority of Wikimedia organizers surveyed were considered primary contacts of their Wikimedia affiliate (LE35). Yet, about 3.1% reported that they were not primary contacts of their Wikimedia affiliate.

The largest type of affiliate organizers is "organization leadership/coordinator". This counts for about 46.5% for both affiliates and organizers. (LE17). "Affiliate member" and "Volunteer" were selected the next most often, followed by "Staff", which was selected the least.

2. Are there changes to evaluation capacity development among program and affiliate organizers?

[edit]The Learning and Evaluation team has conducted surveys for the last 4 years that analyze the evaluation capacity of Wikimedia program organizers.

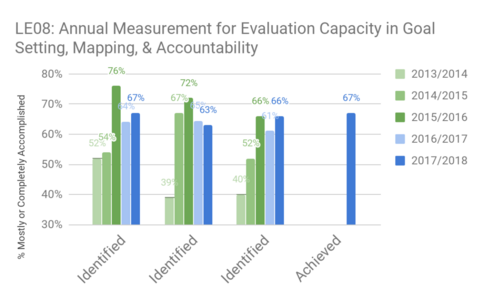

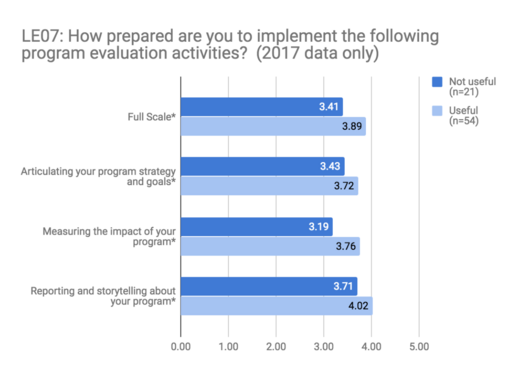

This is measured using two sets of questions. The first set measures self-perceptions of preparedness (LE07). The second measures confidence in doing various program and evaluation-related activities (LE08).

In general, preparedness for evaluation among organizers has remained steady from 2017 (LE07). Program and affiliate organizers reported that they articulate their program goals and strategies more on average in 2017 than in 2018. We detected no statistically significant changes for all other statements between 2017 and 2018.[3] We are seeing evaluation capacity remaining steady, as compared to previous surveys since 2014. Between 2014 and 2016, the Evaluation Pulse survey only reached program organizers who had been in contact with Foundation through various programs. With CE Insights, the population expanded to a broader community. In general, we have seen that capacity has remained the same and not decreasing, which is what we hope to see. In the Graph LE07 and LE08, the green bars represent our original surveys that reached only program organizers involved in Foundation work while the blue bars represent the new sampling for CE Insights.

Evaluation capacity in goal and target mapping has remained generally steady from 2017 (LE08). When examining the data from previous surveys, we find that CE Insights has generally remained the same. CE Insights is the blue bar on the following charts. We found small differences that were statistically significant comparing the full scale of LE08 between men and women in 2018. Men on average reported 3.8, while women reported 4.1.[4] For data from 2018, we found no differences between Global North and emerging communities and region.

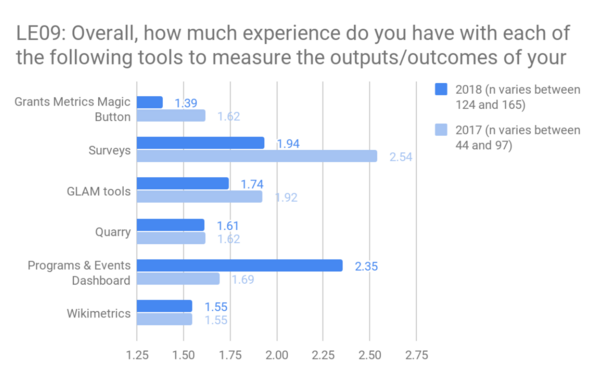

Among both affiliate and program organizers, there were mixed levels of experiences with tools used for gathering metrics or evaluating programs (LE09). Among affiliate organizers, we detected no statistically significant decreases when comparing experiences with evaluation tools. There is an exception with the Program & Events Dashboard, which increased. For program organizers, experience with surveys, GLAM tools, and Quarry all decreased from 2017.[5] For affiliate organizers, experience with surveys and Wikimetrics both decreased from 2017.[6] When examining the 2018 tools for differences between demographics, we found statistically significant differences for Quarry and the Magic Button. Experience with Quarry is higher among men (mean of 1.73) than women (mean of 1.31). Organizers from emerging communities reported an average of 1.97 while organizers from the global north reported an average of 1.41.[7] Also, organizers from Eastern Europe reported higher experience with Quarry (mean of 2.2) than those from Oceania (mean of 1.0).[8] We found no other differences between other regions. For the Magic Button, experience was higher among organizers from emerging communities with an average of 1.96 than organizers from the Global North, who reported an average experience of 1.46.[9]

Wikimedia organizers most often reported that they share knowledge about Wikimedia programs using blogs (LE04). About 44% of 183 organizers reported that they use blogs about half the time or more. 39% reported writing guides or case studies. 30% reported creating data visualizations or graphics, and 24% reported writing learning patterns.

About 40% of Wikimedia organizers reported having a project-based and community-wide communications plan. 34% reported not having any communications plan (LE06). The number of organizers who reported learning about storytelling with data at a conference or online was 27% (LE05).

3. Is there evidence of improved evaluation capacity for organizers who have participated in the Wikimedia Foundation's evaluation capacity programs?

[edit]Wikimedia Foundation programs contribute to evaluation capacity for program and affiliate organizers to a certain extent. We compared how organizers who have received support for organizing programs (PR36) might influence preparedness (LE07) and evaluation activities (LE08).

We found a weak correlation between PR36 and LE07 and LE08, suggesting that our work with program organizers may influence their knowledge of evaluation.[10]

For our data in 2017 only, organizers who found Learning Days useful reported more often feeling prepared to documenting program activities and events as well as tracking and monitoring their program accomplishments (LE07)[11]

We found differences among genders. Women reported a statistically significant higher mean than men. For data in 2018, we found no differences between Global North and emerging communities and across regions.

4. What are the support needs related to program evaluation for those who organize Wikimedia programs?

[edit]Looking at existing tools, organizers reported that the Program and Events Dashboard (56%) and surveys (52%) were the easiest tools to use (LE10). While Quarry (23%) and Grants Metrics (22%) button were least often reported to be easy or very easy to use. Respectivley about 36% and 35% of organizers reported that GLAM Tools and Wikimetrics are "Easy" or "Very Easy" to use.

Most of the challenges were about the Program & Events Dashboard (22).

These challenges related to:

- Functionality (16)

- Reduction of technical steps or made more intuitive

- Issues with the ability to search or local customization (LE34).

Also noted by a handful of people were access issues (3) experienced at events. Getting people to create accounts and log into the Dashboard at events is not as easy as an event page.

The biggest challenges with Quarry (9) were quite consistent. There is a language barrier in needing to know complex SQL coding (5). Ther are some challenges around the alignment of metrics and parameters from one data group to another.

Seventeen respondents commented about the Program & Events Dashboard and eleven commented about Quarry. General difficulties reported centered on lack of familiarity or irregular use of the tools. Compounded by issues the tool interface or parameter complexity. Inflexibility for localization (18) and poor documentation of the tools (10) were also mentioned.

For the coming 12 months organizers reported most often wanting to learn about collecting data and tracking progress. A well a as analyzing and interpreting what data mean (LE02).

Less often selected were:

- Building a culture of learning and evaluation

- Setting goals and measurable targets

- Reporting and sharing outcomes of projects

5. What are the support needs for Wikimedia affiliates?

[edit]Chapter organizers reported higher preparedness in finance management & budgeting and project management when compared to user group organizers (LE25). In general, Chapters were more prepared for project management (mean = 3.93), finance (mean = 3.68), and communications (mean = 3.43). User groups were more prepared with communications (mean = 3.43), project management (mean = 3.42), and volunteer engagement (mean = 3.31). User groups reported being least prepared for finance management & budgeting. Chapters reported being least prepared in Fundraising and grant applications.

Wikimedia affiliate organizers most often reported that their leadership needs support with volunteer engagement (LE26). The second most often reported support was donor outreach. The next most selected were Human resources and culture, Governance, and Leadership.

About 61% Wikimedia affiliates reported that they "Mostly" or "Completely" feel their organization is engaged with the Wikimedia movement on a global level (LE28). About 18% reported that they felt "Not at all" or "Slightly" engaged with the Wikimedia movement on a global level.

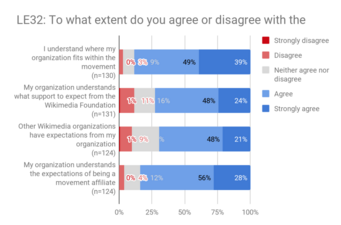

For affiliate engagement, affiliate organizers reported the most that they understand where their affiliate fits within the Wikimedia movement (LE32). About 88% "Agreed" or "Strongly agreed" they understand where their organization fits in the movement. About 84% reported that their organization understands the expectations of being an affiliate. About 72% reported that they understand what support to expect from the Wikimedia Foundation. 69% of affiliate organizers reported that other Wikimedia organizations have expectations of them.

Affiliate organizers most often reported strength (LE23) was for Projects/Programs (40 participants).

The programs reported included:

- Education (7 participants)

- GLAM (4 participants)

- Events (4 participants)

- Other diverse programs and innovations (8 participants)

- The group’s general skills at program design & management (6 participants)

- Inter-group/global coordination across projects and languages (4 participants).

The second most noted, was a strength in Community (36 participants). Most groups noted the positive characteristics of their community’s capacity and relations or describing high engagement by their community “With our work we have managed to win the trust of people (institutions, national community, international community."

The third most noted strength was diversity (23). Most often in diversity of activities & projects or cultural diversity of their collaborators, but some noted it as a value of their organization. “We are diverse people in terms of language capability”; “Diversity matters to us”.

Affiliate organizers most often reported challenge (LE23) was for volunteer engagement (38). Many noted a lack of volunteers due to lack of time, burnout, or lack of interest. A few people noted issues with volunteer access.

The second most often noted challenge was the need for funding support (36). Most comments referring to the need for increased, or more diverse sources of funding. Either from WMF or other local or regional supports in order to resource affiliate projects and events. “Need for internet enabled devices to train and lend for volunteer work”

There were some concerns expressed that resources were diminishing or insufficient overall. “Decreasing support from donors, government, partners”. A handful explicitly called out the need for more support from others in the movement. “Insufficient support from more experienced WMF affiliates to help learn skills to grow organization”.

The third most noted challenge was in the area of community organization and coordination (31). Comments ranged from organizational development/growth, strategic coordination & setting direction, partnership needs, events coordination, and territorial conflicts.

The most often reported non-monetary support need (LE27) was for Direct Support & Collaboration (45 participants). Most often in support for bringing in new and more diverse people into the movement. People who have the skills to fill the gaps in knowledge or leadership (8 participants).

Second most noted in terms of direct support needs were:

- Support needed from the Foundation (8 participants).

- A need for clarity on the direction of the foundation and its rules and requirements

- Direct technical support or a need for recognition of affiliate work

Following that were:

- Direct technical support (7 participants)

- Collecting metrics

- Translation

- Developing software on MediaWiki

- Organizing the meta knowledge base

The third most noted direct supports were the need for support from contributors (5 participants) or for contributors (3 participants) or for connecting to knowledge bodies external to the movement (3 participants).

Like the points raised in the Challenges section, people shared about support need. Recruiting and engaging more volunteers and technical barriers that could use better support.

The second theme in non-monetary support need (LE27) was Organizational Development (31 participants).

The noted needs included:

- Affiliate management and governance support (7 participants)

- Support to develop into a chapter or legal entity (5 participants)

- Develop partnerships (4 participants)

- Eengage with other affiliates (3 participants)

- To expand geographically (2 participants)

The third most popular need of non-monetary support need (LE27) was Skills Training (26 participants). Affiliate organizers reported a wide variety of skills and training needs.

Examples include:

- Technical or personalized training,

- Recruitment and how to engage more people

- Organizational management

- Event management

- Training certifications

- Development from larger affiliates

- Legal training

- Conflict management

Most useful results

[edit]- Continue to see upward trends in program and evaluation capacities across affiliates, grantees, and program organizers. Graphs in section 2 show that evaluation capacity continues to improve among affiliates and program organizers.

- Results support our strategy in capacity building through online resources, tools and leveraging peer networks. Results are in line with our work to create peer-mentoring spaces online and offline, as well as to document resources on Meta (such as Learning patterns). We see this through an increase of use of the Programs & Events Dashboard, work on the Wikimedia Resource Center, and that people mostly go to their friends or on Meta to find resources. Respondents "Mostly" or "Completely Agree" with evaluation skills through direct Foundation support, which is in line with the training support we provide.

Next steps

[edit](Forthcoming)

Notes

[edit]- ↑ p-value>0.05*, z=-2.202. Mean rank for men = 73; Mean rank for women = 58.

- ↑ Differences between organizers from Asia and those from other regions were significant only with the Games-Howell post-hoc test and not the Bonferroni, which suggests this difference may not be real.

- ↑ Kruskal-Wallis test, p-value<0.05; z-score=-2.713.

- ↑ Medians reported, Kruskal-Wallis test; p-value>0.05

- ↑ Surveys: Mann-Whitney, p-value < .05, z = -2.515; GLAM Tools: Mann-Whitney, p-value < .001, z = -3.657; Quarry: Mann-Whitney, p-value < .05, z = -2.332

- ↑ Surveys: Mann-Whitney, p-value < .01, z = -2.869; Wikimetrics: Mann-Whitney, p-value < .05, z = -2.514

- ↑ Mann-Whitney, p-value < .05, z = -2.936

- ↑ Games-Howell post-hoc, p-value < .05; no significant difference in bonferroni test.

- ↑ Mann-Whitney, p-value < .05, z = -2.084

- ↑ regression analysis shows that there (need to add details)

- ↑ For "documenting": p-value>0.05; z-score = -1.995; For "tracking": p-value>0.05; z-score = -2.165